'Apple Glass' & iPhone combo may react to gestures like nodding and shaking your head

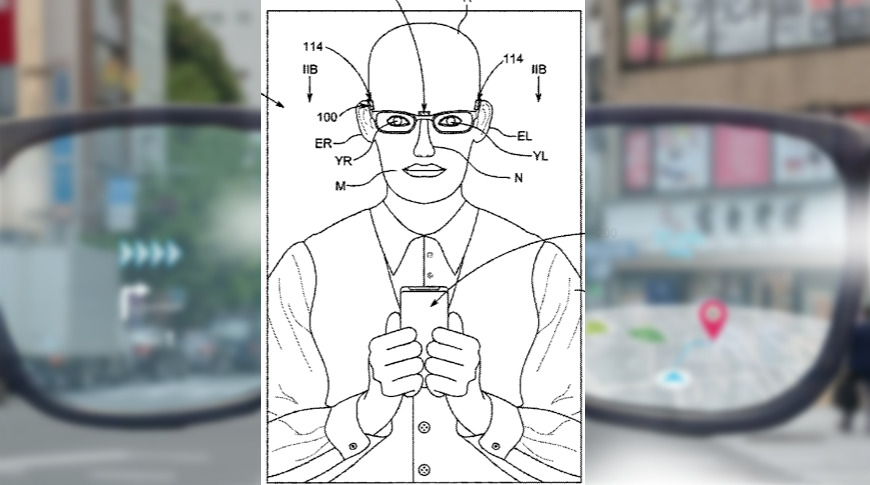

Where a user is wearing "Apple Glass" or other head-mounted device, their iPhone may be able to recognize and respond to head gestures.

It's not that long since Steve Jobs showed us the Multi-Touch iPhone screen we're all so familiar with — and it's truly not very much time at all since Apple brought Siri to the iPhone. Yet in a newly revealed patent, Apple suggests both touch and voice are now too old-fashioned, and what we really need is for our iPhones to recognize when we're shaking our heads at them.

"[Current device control] often requires the user to interact with the sensing components actively, such as via touch or speech," says Apple's "Monitoring a user of a head-wearable electronic device" patent. If it doesn't go so far as to sound sniffy, it is definitely dismissive of talking or typing, and says that detecting changing light patterns is the future.

That sounds like Face ID, but it's more as Face ID were reversed. Instead of the iPhone having the TrueDepth technology that powers Face ID, and scanning a user's face, Apple proposes that "Apple Glass" detect facial expressions or movement, and transmits that to the iPhone.

"Apple Glass," or any other head-worn device such as "headphones... a mask... earmuffs or any combination thereof," can contain light sensors. The sensors "detect light reflected by and/or transmitted through a portion of the user's head (e.g., using photoplethysmography ("PPG"))."

The patent proposes that sensors be placed at different points across or over the head-mounted device, and says that "due to such positioning, the sensor data from the light sensors can capture movement of anatomical features in the tissue of the head of the user and can be used to determine any suitable gestures of the user."

At its most basic, if you're shaking your head vigorously, the light sensors will detect that movement. However, Apple's proposal is much finer than that. "[Sensor] data from the photodiodes can capture expansion, contraction, and/or any other suitable movement in the tissue of the user during a head gesture."

That does mean shaking or nodding of the head, but also "chewing, blinking, winking, smiling, eyebrow raising, jaw motioning (e.g., jaw protrusion, jaw retrusion, lateral jaw excursion, jaw depression, jaw elevation, etc.), mouth opening, and/or the like."

The patent's 35,000 words go into elaborate detail over every combination of facial feature or head gesture that might be detected, plus multiple ways of using different types of light sensor to determine it.

What Apple's patent is not particularly concerned with, is what an iPhone or other device would actually do with the information. Nonetheless, there are situations where it would be of obvious benefit. Say you're dictating into Siri on your iPhone and you pause.

By seeing that your expression shows you're thinking, Siri could know that it should wait. Alternatively, if your expression is telling the iPhone to please get on with it, then Siri could reasonably infer that you'd finished dictating and stop listening.

This patent is credited to three inventors, including Joel S. Armstrong-Muntner. His previous patents include a related one on optical sensing mechanisms for input devices.

Separately, Apple has also now be granted a patent on a related principle to do with how to transmit data between devices, such as "Apple Glass" and iPhones. An Apple AR device is likely to be generating a lot of data and perhaps also receiving it, too, so there is an issue over how quickly that can be done.

One of Apple's proposed solutions is to minimize just how much data is transmitted. "Low bandwidth transmission of event data," is a newly-granted patent by Ian M. Richter.

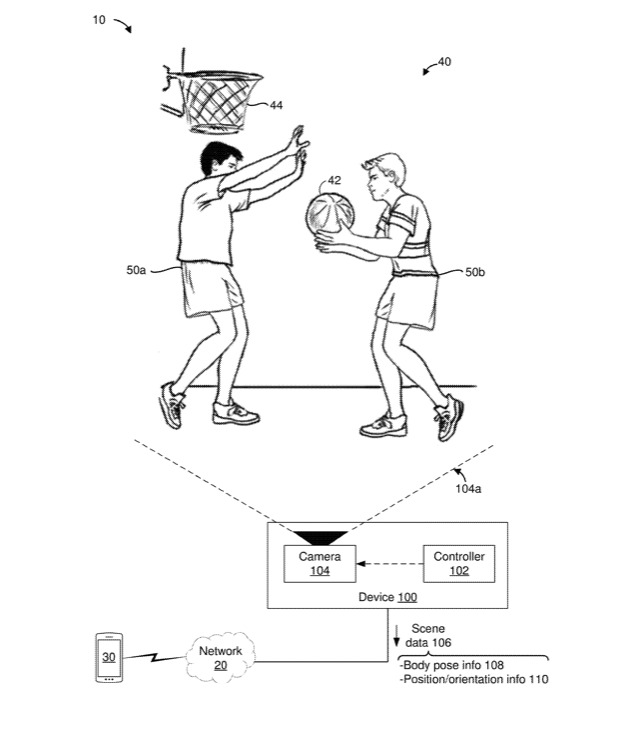

This is specifically about video data and Apple's patent covers all forms of "capturing images and transmitting the images over a network," whether that's between devices, or to "broadcast the video via a television channel." In each case, though, the issue is to do with how these transmissions "tend to require a significant amount of bandwidth."

Just as the head-mounted display patent deals with detecting head and facial movements, this one is concerned with the similar issues of detecting "body poses." With the ability to recognize a person's gait, systems could transmit the information describing that instead of relaying each pixel of video.

Video compression such as MPEG relies on how a background may change far less than a human being moving through it. If a system knows where the person has moved, it can then know which parts of the background to redraw, rather than having to receive the entire background image again.

"[A] representation of a physical environment may be transformed by graphically modifying (e.g., enlarging) portions thereof, such that the modified portion may be representative but not photorealistic versions of the originally captured images," says Apple's patent. "As a further example, a representation of a physical environment may be transformed by graphically eliminating or obfuscating portions thereof."

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Thomas Sibilly

Thomas Sibilly

AppleInsider Staff

AppleInsider Staff

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

1 Comment

Serious question here... will Siri know when I give it the finger then?