Future Apple AR devices, such as "Apple Glass," may include holograms to make moving through virtual environments feel more natural.

Apple has already very many patents and patent applications concerning displaying information on "Apple Glass," or similar devices. There's the possibility, for instance, that when you hold up two items in an Apple Store, the glasses could display a bullet list of the differences and similarities.

The company has also investigated a more 3D approach with realistic surrounding audio effects. Now among the sea of Apple AR research, there's a newly-granted patent concerned with improving visual 3D experiences.

"Scene camera," by UK-based inventors Richard J. Topliss and Michael David Simmonds, wants to supplement images on "Apple Glass" with holographic ones. The aim is to make wearers feel, "as if they were physically in that environment."

"For example, virtual reality systems may display stereoscopic scenes to users in order to create an illusion of depth," says the patent, "and a computer may adjust the scene content in real-time to provide the illusion of the user moving within the scene."

"When the user views images through a virtual reality system, the user may thus feel as if they are moving within the scenes from a first-person point of view," it continues. "Mixed reality (MR) covers a spectrum from augmented reality (AR) systems that combine computer generated information (referred to as virtual content) with views of the real world to augment, or add virtual content to, a user's view of their real environment..."

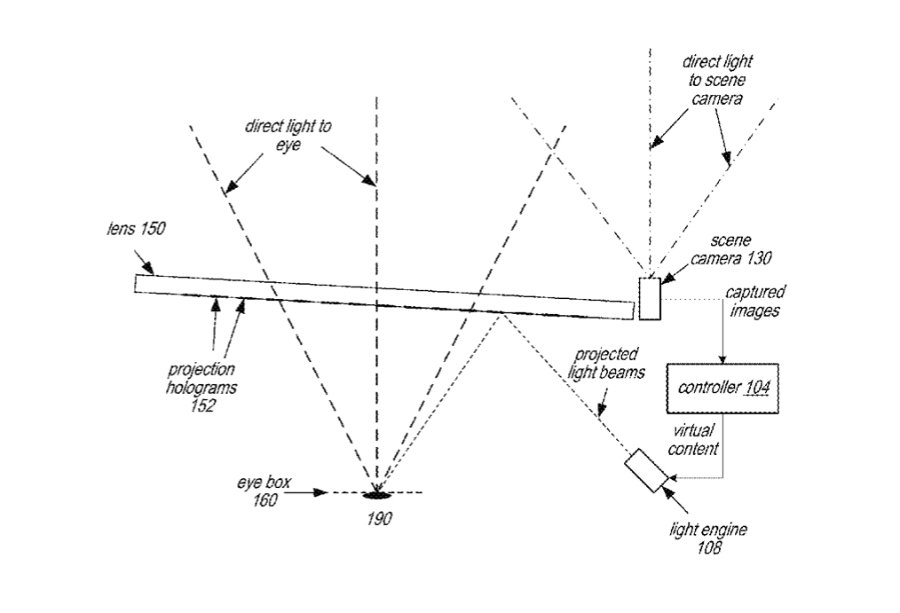

The patent doesn't specify — or need to specify — how other "Apple Glass" systems will present virtual objects, so it's not clear how a holographic one would fit in with previous designs. This particular proposal, though, aims to have projectors mounted to the side of the glasses to create what Apple refers to as a "scene camera."

"To achieve a more accurate representation of the perspective of the user, the scene camera is located on the side of the MR headset and facing the inside surface of the lens," says Apple. "The lens includes a holographic medium recorded with one or more transmission holograms that diffract a portion of the light from the scene that is directed to the user's eye to the scene camera. Thus, the scene camera captures images of the environment from substantially the same perspective as the user's eye."

It's capturing the real world as seen by the user in order to then present images in such a way that they look as if they are present in the real world. As the user moves, so the scene camera changes what it is showing to better mimic a real 3D environment.

"The images may, for example, be analyzed to locate edges and objects in the scene," continues the patent. "In some embodiments, the images may also be analyzed to determine depth information for the scene. The information obtained from the analysis may, for example, be used to place virtual content in appropriate locations in the mixed view of reality provided by the direct retinal projector system."

Most of the patent is concerned with solving potential problems caused by having projectors, or other devices, mounted at the side of the glasses.

"To stop unwanted direct light from reaching the scene camera," says Apple for instance, "a band-pass filter, tuned to the transmission hologram wavelength, may be used to block all direct view wavelengths other than the transmission hologram operating wavelength."

This is not the first patent or patent application from Apple regarding 3D space. It has previously been granted a patent concerning the use of sourcing 3D mapping data from an iPhone.

William Gallagher

William Gallagher

-m.jpg)

Andrew Orr

Andrew Orr

Malcolm Owen

Malcolm Owen

-m.jpg)

2 Comments

Uses fixed holograms, yes. Uses dynamic holography or is holographic, no. When Microsoft introduced its HoloLens product, the press totally screwed up that distinction.

Virtual 3D objects and scenes can be generated to a viewer by means of a stereographic method (a slightly different image to each eye, like how 3D movies work)...so the images themselves are not likely true holograms. My guess is that it is a Holographic Optical Element (HOE) that is the "projection hologram" used to direct the images to each eye.

Combine this with eye-tracking and dynamically focussing (or even de-focussing) both the actual scene and the virtual objects depending on where the user is looking, as well as incorporating actual 3D scene detection, I can imagine a very convincing integration of real and virtual imagery would be possible.