At Google I/O the company introduced LaMDA as a model for generating dynamic responses to queries, which could grant Google Assistant better conversational capabilities

Revealed at Google I/O, LaMDA is a language model for dialogue applications. Citing the improvements in search and Google Assistant results, Google CEO Sundar Pichai mentioned that sometimes the results are not as natural as they could be.

"Sensible responses keep conversations going," said Sundar, before a demonstration of LaMDA itself.

The idea is a continuation of Google's BERT system for understanding the context of terms in a search query. By learning concepts on a subject and in language, it can provide more natural-sounding responses, which can flow into a conversation.

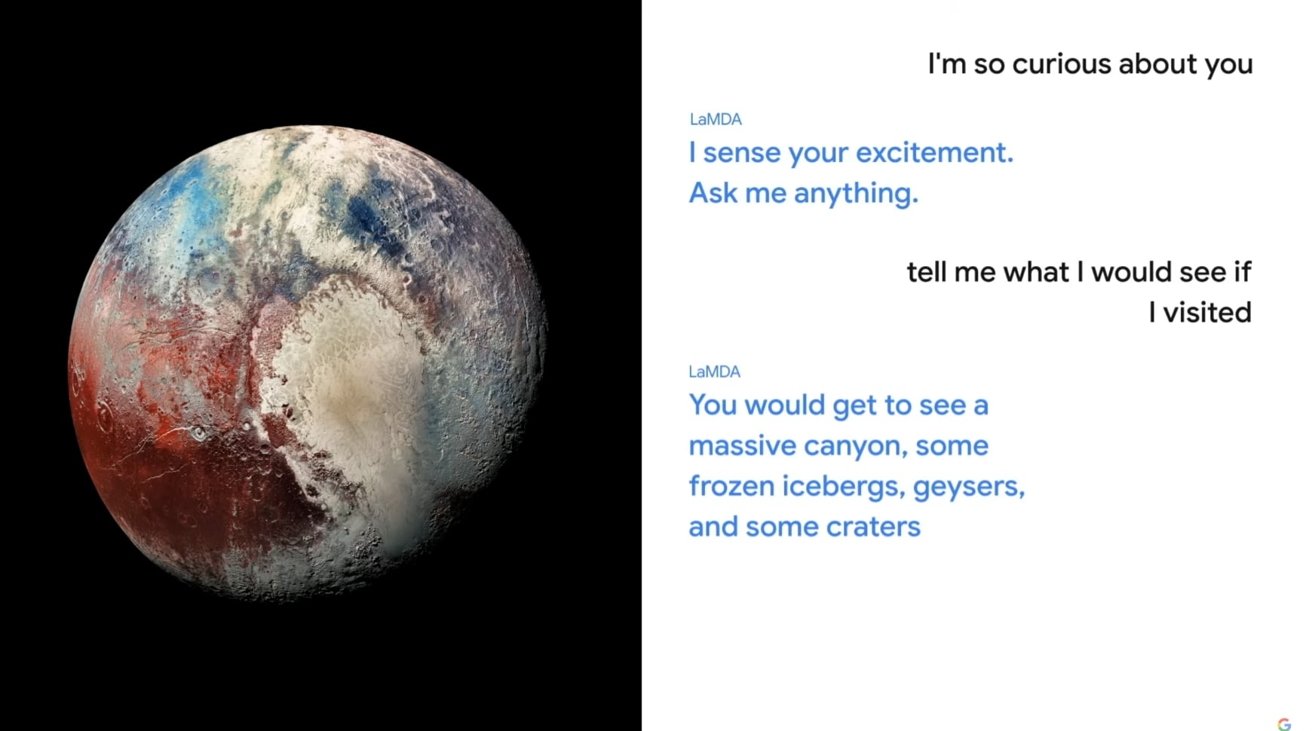

In demonstrations, LaMDA is used to demonstrate a conversation with Pluto and a paper airplane. Each LaMDA model held its own part of a conversation with a user, including innocuous questions such as "Tell me what I would see if I visited" resulting in a description of the landscape and frozen icebergs on Pluto's surface.

Google is still developing LaMDA, and it is "still early research," Pichai admits, with it currently only working on text at the moment. Google intends to make it multi-modal, to understand images and video alongside text.

Eventually, this could lead to the Google Assistant becoming more conversational in nature for its users. The research may also drive Apple towards further advancements with its own Siri, bringing its own virtual assistant up to speed with the search company's version, and making it more chatty for users.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

3 Comments

Apple has had this tech for about 5 years but have been dragging their feet allowing Siri wannabes to take the market. This is sad.

This is Siri‘s 10th anniversary year. Perhaps Apple has something up its sleeve that increases series functionality for its 10th birthday for WWDC. Hey, a gal can dream.

I just wish that Apple was more like Google and Amazon in trying to improve all of their voice assistants. I get trying to maintain privacy, but there has to be a middle ground that would still allow Apple to improve its backend models. The thing I like about Google search is that it remembers what I've done in the past. It knows where I've gone via google maps, and which restaurants I like to go to, so it gives me heads up on new restaurants that are opening that I might like. It knows what websites I've bought stuff from, so it knows new things to recommend to me. If Apple was willing to use the data it had about its users in this way, do you have any idea how much more useful their products would be? And to babe able to have a more natural conversation with Siri? I mean, there's a reason I don't remember the last time I even wanted to use Siri for anything more than "set a timer" or "when's sunset?" Siri could be SO much better