The continued tradition of Apple SVP of software engineering Craig Federighi and VP of product marketing Greg Joswiak sitting down with John Gruber continues in 2021, with discussions about what Apple launched during WWDC 2021, privacy, on-device Siri processing, and the creation of Universal Control

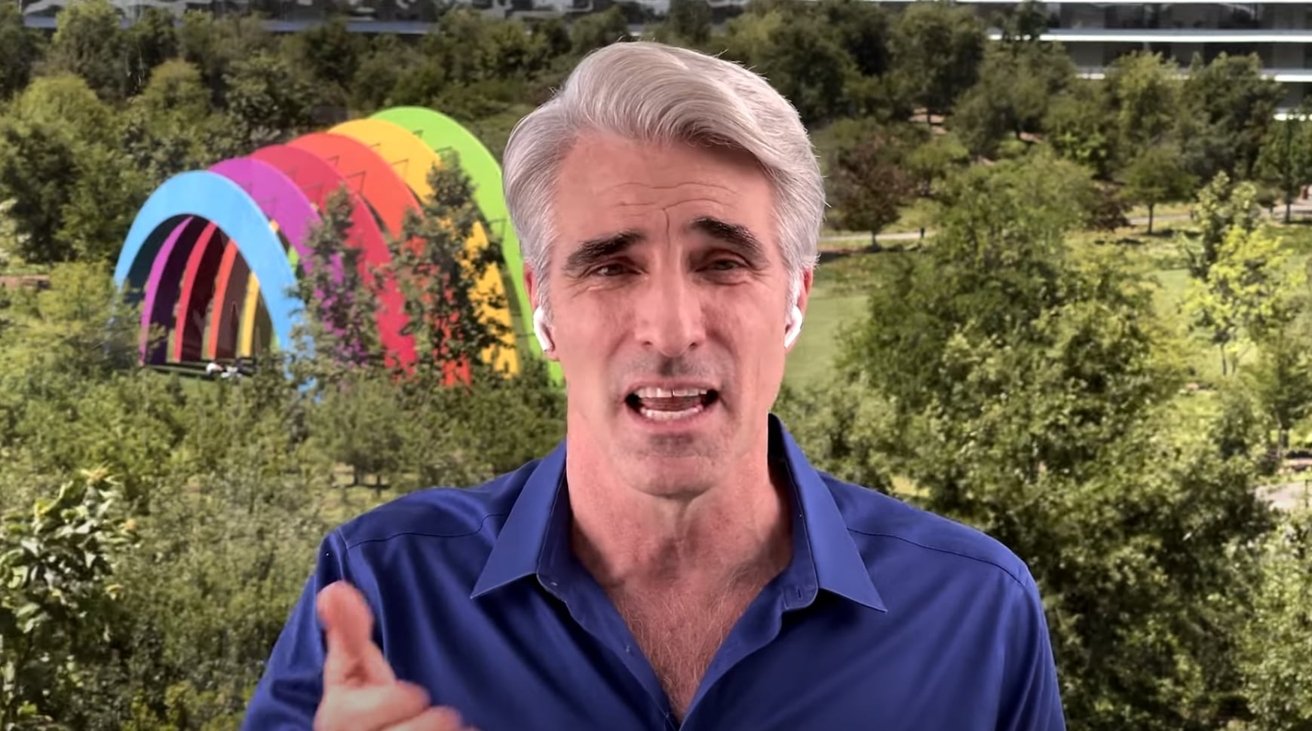

In a special edition of Daring Fireball's "The Talk Show," the conversion takes place remotely, with Federighi and Joswiak located at Apple Park. While other interviews are quite tightly controlled, the lengthy interview with the executives is considerably looser in structure and much longer, allowing the pair to enthuse about the announced features and development.

Uploaded to YouTube on Friday, the hour and a half show has the two executives covering many different elements brought up during the keynote and throughout the WWDC week.

Privacy

The first subject brought up was privacy, which is a major focal point for Apple's work. After bringing up how Apple has spent decades putting design first and being proven right with high sales to consumers while the rest of the industry didn't have the same focus, Gruber asks if the privacy push by Apple ahead of the rest of the industry is the same sort of thing.

According to Greg Joswiak in raising previously suggested observations, "[Apple] has been saying for quite some time that privacy is a fundamental human right, and we have been building it into our products, we have been designing it into our architectures long before it was popular, because it was the right thing to do."

He continued "This wasn't something that we just, you know, scotch tape on the side. This is deeply woven into our systems and our architectures, and now that privacy happens to be more important to customers, well that's great. I mean, we encourage that. Customers should care about their privacy, but this is something for us that's much bigger than trying to be popular."

On-device Siri

Performing Siri processing on the device is more than a privacy feature, as Apple's digital assistant using onboard processing provides speed and other benefits over existing processing systems.

"The speed is awesome," starts Federighi. "We're all living on it, obviously, and it is remarkable. Yeah, it's, not only do you not have to care if you're on the network for those kinds of requests, but the responsiveness is a huge win."

"And that's generally true with on-device machine learning," Federighi adds. "You don't want to deal with is my network flaky, how's my connection right now, you want it to work wherever. These are mobile devices, right, you want them to work well wherever you are, and getting Siri to be local in this way is fantastic for the user experience."

Federighi mentions how the last two years where "if you listen to Siri's neural text to speech like this incredibly high quality voice. It felt like a moon shot a couple years ago to do that locally. To not have to send what you wanted to say to a server to synthesize it to that quality level."

Moving to using on-device processing to deal with the front-end of a request has "been just an incredible leap forward in the last year. So it's really exciting where we're going with on-device learning for sure."

Universal Control

In discussions on iPadOS 15, the standout feature of Universal Control was raised, which allows a Mac's keyboard and mouse to control a nearby iPad and to handle the movement of files between devices as seamlessly as possible.

On the subject of the length of its development, Federighi starts by offering "I do think it's one of those things where, for some extent we have been working on it for 10 years, because the foundation that we've been building, that it's built on, all those pieces, a lot of threads got pulled together to then finally realize that experience."

Federighi continued "With all the Continuity experiences, the fact that our devices generally are signed into your account, know about each other, that they're, via Bluetooth low energy can talk to each other. The fact with peer-to-peer Wi-Fi we can quickly establish a high-speed Wi-Fi link between your devices. You know, this is something we did for AirDrop years ago, and had that as a foundation."

"But then you need the iPad, of course, to actually support a trackpad in order to make this meaningful, right, so that set the stage. Once we had all of those pieces, we knew that we wanted it to be a zero setup experience, right, that if you're going to do something like this, if there was this friction where let me bring up System Preferences and lay out my stuff' you know, then you're probably not going to do it a lot of the time."

Apple evaluated "lots of crazy ideas" about using ultrasonics to locate where devices were, but it was an "intuitive leap" according to the SVP to realize the user knows where the other devices are relative to each other. "You're going to move the cursor towards the iPad. You're telling us something when you do that. Can we take advantage of that and go Okay' and then give you this indication that says we think you might be trying to do this, are you?' and take advantage of the visual language we built in iPad to reflect that and to make a really natural way to say, like, pull it through.

To Federighi, this results in something that seems like "the most natural way to perform the operation in the first place."

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Malcolm Owen

Malcolm Owen

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Bon Adamson

Bon Adamson

-m.jpg)

2 Comments

Siri on device. What a concept. Just like how it used to work when Apple bought that tech. And honestly it was more responsive back on my iPhone 4 too… never got “working on that” and other bullshit back then. Used to also be much better at finding music on my device to play it. This whole do it on the network, then find a slightly different track than the one I have on my phone in the cloud and expect to stream it instead is not nearly as nice.