Future iPhone cameras that capture the direction light is traveling may provide greater 3D detail that will improve Apple AR walk-through experiences.

Current iPhone cameras capture the intensity of light in a scene, but future ones may do more. Using light field photography, the camera system could capture the direction light is traveling, plus record the positions of different objects in view.

That's the idea at the heart of "Panoramic light field capture, processing, and display," a newly-granted patent.

It's concerned with how a device such as an iPhone could be used to capture images and build data for an AR experience as a user walks around freely.

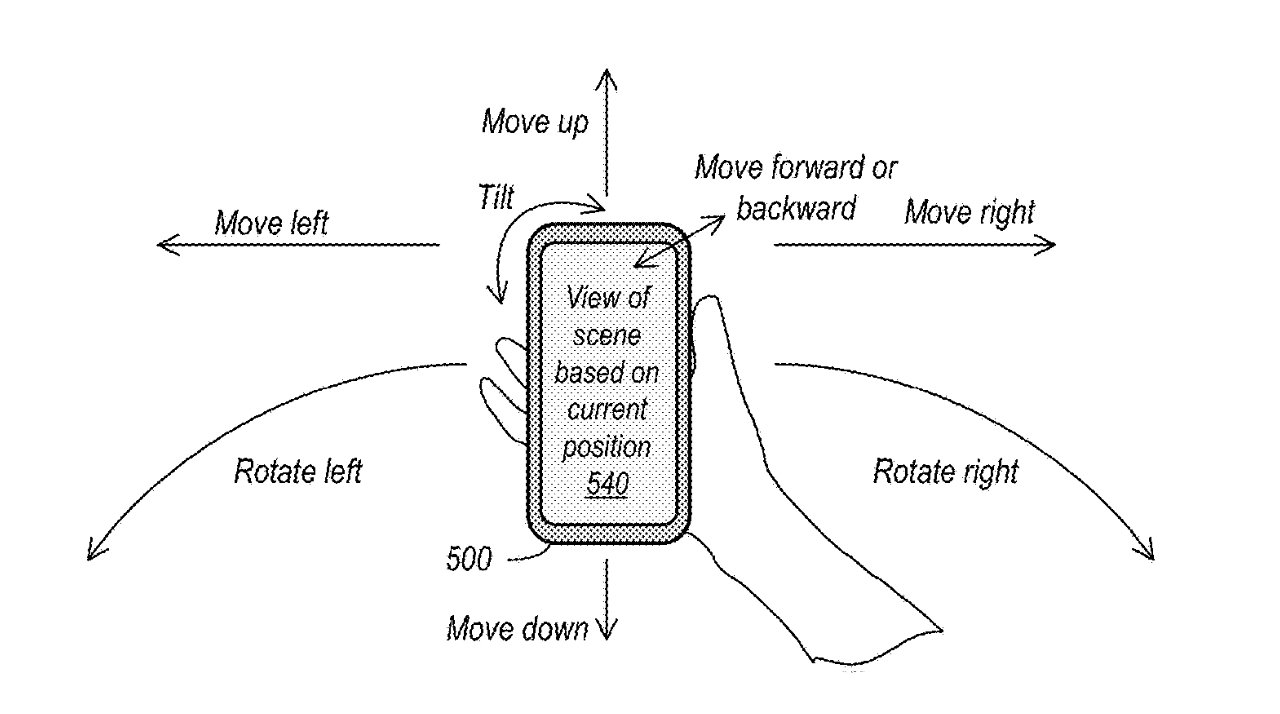

"[The patent details a] light field panorama system in which a user holding a mobile device performs a gesture to capture images of a scene from different positions," says the patent. "Additional information, for example position and orientation information, may also be captured."

"The images and information may be processed to determine metadata including the relative positions of the images and depth information for the images," it continues. "The light field panorama may be processed by a rendering engine to render different 3D views of the scene to allow a viewer to explore the scene from different positions and angles with six degrees of freedom."

You've seen 3D images where your position is fixed and as much as you can turn around, or look up and down, you cannot step forward or go around objects. For AR to be more immersive, Apple needs the ability to record more information — and then display it, too.

"Using a rendering and viewing system such as a mobile device or head-mounted display," says Apple, "the viewer may see behind or over objects in the scene, zoom in or out on the scene, or view different parts of the scene."

Edited detail from the patent showing how gestures can capture information that is then later relayed for display as AR

Edited detail from the patent showing how gestures can capture information that is then later relayed for display as ARThe intended result is that the "captured wide angle content of a scene" is "parallax aware." So "when the image is rendered in virtual reality, objects in the scene will move properly according to their position in the world and the viewer's relative position to them."

"In addition," continues Apple, "the image content appears photographically realistic compared to renderings of computer generated content that are typically viewed in virtual reality systems."

This patent is credited to six inventors, including Ricardo J. Motta and Gary L. Vondran, Jr. Their previous work includes a granted patent regarding light field capture for AR.

As is normal for patents, this one concentrates on the key technologies and doesn't particularly focus on either use cases, or potential issues. In this case, a key issue is that the device capturing the 3D detail needs to be powerful — and perhaps more powerful than a phone.

Capturing images to a base station

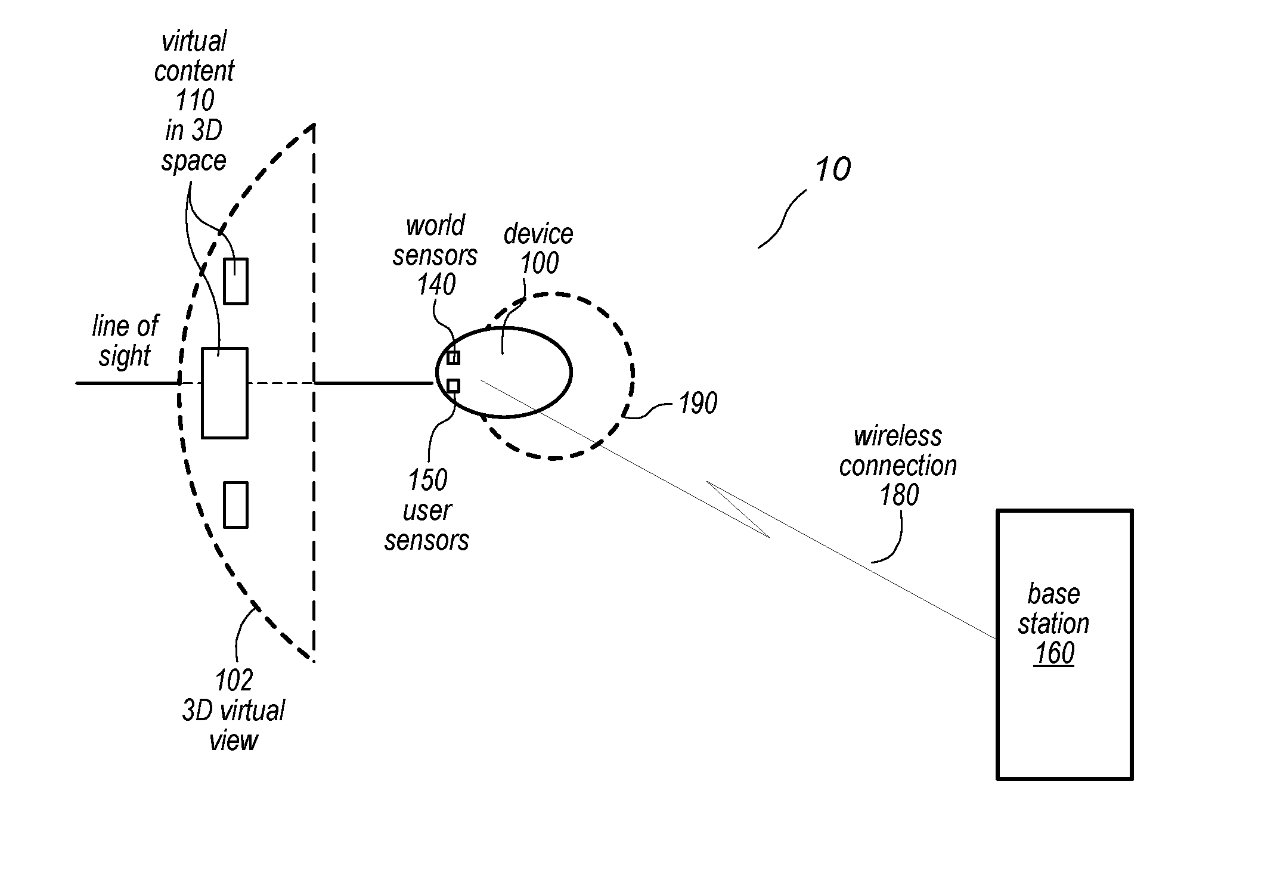

Consequently, there is a separate patent — also newly granted — which is about exactly this. "Video pipeline" is concerned with how a device like an iPhone or HMD could capture image data that is then wirelessly sent to another device, such as a base station, for processing.

"The base station may provide more computing power than conventional stand-alone systems," says Apple, "and the wireless connection does not tether the device to the base station as in conventional tethered systems."

It's about compromising between portability and processing power.

"Stand-alone systems allow users freedom of movement," continues Apple, "however, because of restraints including size, weight, batteries, and heat, stand-alone devices are generally limited in terms of computing power and thus limited in the quality of content that can be rendered."

"The base stations of tethered systems may provide more computing power and thus higher quality rendering than stand-alone devices," says Apple, "however, the physical cable tethers the device to the base station and thus constrains the movements of the user."

So Apple's proposal is for a wireless system. However, the patent is concerned with more than just presenting systems for receiving data from capturing devices, it's about displaying it back.

"The [capture] device may include sensors that collect information about the user's environment and about the user," says Apple. "The information collected by the sensors may be transmitted to the base station via the wireless connection."

"[Then the] base station renders frames or slices based at least in part on the sensor information received from the device, encodes the frames or slices, and transmits the compressed frames or slices to the device for decoding and display," continues Apple. "The system may implement methods and apparatus to maintain a target frame rate through the wireless link and to minimize latency in frame rendering, transmittal, and display."

This patent is credited to 13 inventors. They include Avi Bar-Zeev, who has worked on spatial audio navigation, and Geoffrey Stahl, who has previous AR/VR work.

Keep up with everything Apple in the weekly AppleInsider Podcast — and get a fast news update from AppleInsider Daily. Just say, "Hey, Siri," to your HomePod mini and ask for these podcasts, and our latest HomeKit Insider episode too.

If you want an ad-free main AppleInsider Podcast experience, you can support the AppleInsider podcast by subscribing for $5 per month through Apple's Podcasts app, or via Patreon if you prefer any other podcast player.

AppleInsider is also bringing you the best Apple-related deals for Amazon Prime Day 2021. There are bargains before, during, and even after Prime Day on June 21 and 22 — with every deal at your fingertips throughout the event.

William Gallagher

William Gallagher

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->