Apple's VR or AR headset could move an avatar of the user based on monitoring the user's body movements, while its battery life could be lengthened by some clever data transmission techniques.

Apple is believed to be in development of a virtual reality or augmented reality headset, as well as AR smart glasses known as "Apple Glass." In entering a slowly growing field of human-computer interaction, Apple is also attempting to engineer solutions to a variety of problems, to make its products stand out from other head-mounted systems.

In a pair of patents granted by the U.S. Patent and Trademark Office on Tuesday, Apple reckons it can improve what its headset can offer, in relation to how it interacts with the user, and how it communicates with a host device.

Body monitoring

The first patent, "Generating body pose information" covers the ability for the system to keep track of a user's movements, and then to use that data to perform other related actions.

Apple reckons that some immersive computer-generated reality experiences require knowing the body pose of the user. In some experiences, the VR or AR application may make changes to what it presents, based on the user's pose or movements, such as a guard in a game responding differently to a user's stance.

More explicitly, Apple suggests that knowing the body pose could be used to control an avatar of the user. This may be useful in situations such as the popular online social experience VR Chat, which can use the motion of other hardware to alter the user's avatar's movements.

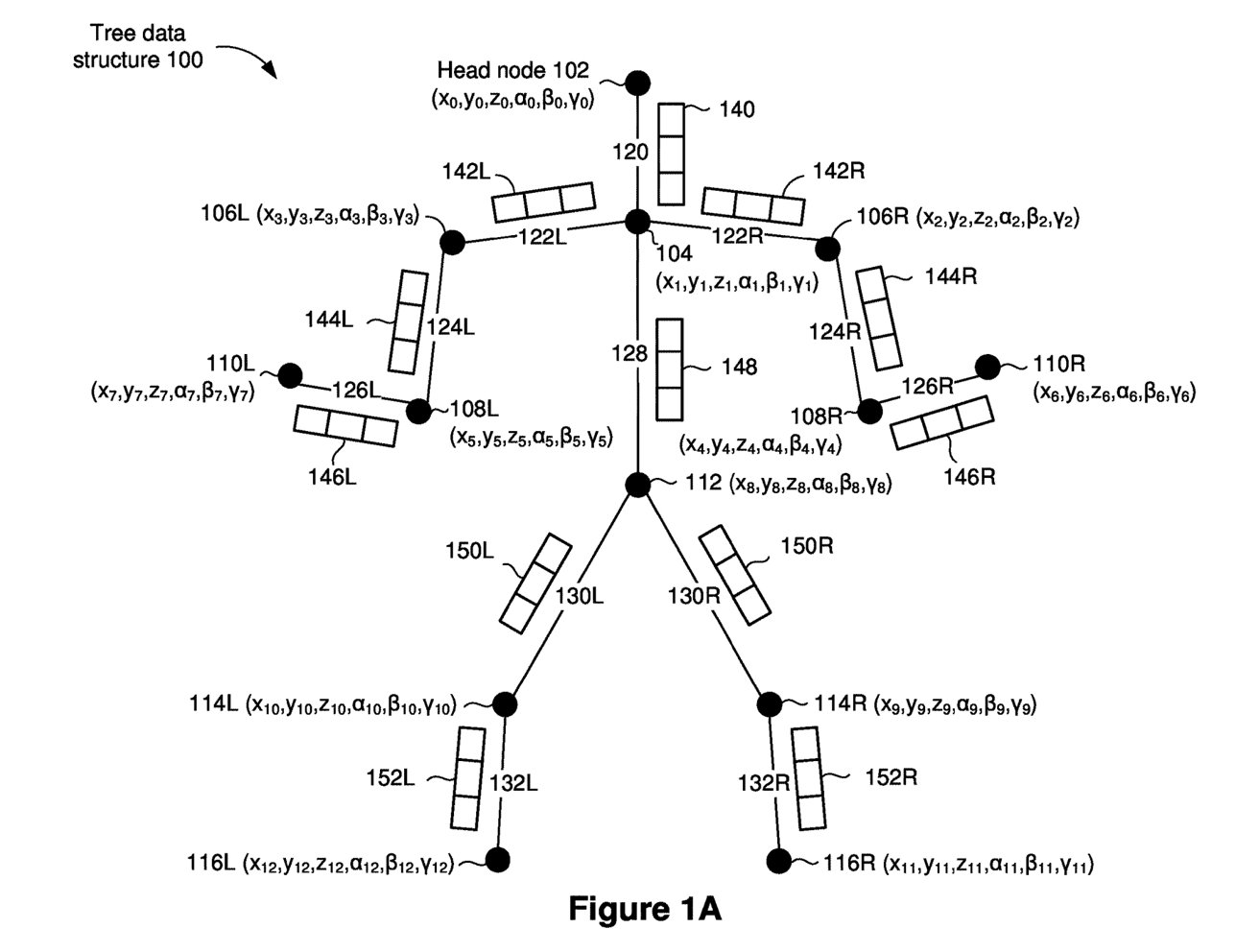

Multiple neural networks can monitor individual joints on a user, working together to create a full body model.

Multiple neural networks can monitor individual joints on a user, working together to create a full body model. According to the patent, Apple suggests the pose could be learned using a variety of cameras and neural networks, with multiple neural networks working together to model individual body joints. Each of the neural networks works individually, but the results are fed together to create the whole-body model.

Training would be undertaken in multiple ways, with not only the understanding of data from the cameras being taught, but also training on how the networks interact with each other. This includes "determining respective topologies of the branched plurality of neural network systems."

In terms of how much information the network can gather about a person's pose, there is the claim the joints monitored cover the "collar region, shoulder joints, elbow joints, wrist joints, pelvic joint, knee joints, ankle joints, and knuckles." Edges, connected elements between joints, are also determined by the system.

The list covers all of the essentials needed to create a model of a user's body, though not at a high level of detail. For example, while "knuckles" are mentioned, most likely as a key element for gestures, toes or foot movements seemingly aren't covered to the same degree.

The patent lists its inventors as Andreas N. Bigontina, Behrooz Mahasseni, Gutemberg B. Guerra Filho, Saumil B. Patel, and Stefan Auer. It was originally filed on September 23, 2019.

Wireless communications

The second VR-related patent, "Adaptive wireless transmission schemes," takes aim at handling communications between a headset and a host computer.

While all-in-one systems exist like the Oculus Quest, they can add weight to a setup, and while tethered setups can reduce the weight, the cable itself can be a problem. An answer to this can be employing a wireless communications system that reduces the weight and eliminates the cable.

However, even wireless systems can cause issues. For example, it consumes power to transmit data, as well as wireless systems typically having a lower amount of available bandwidth to use than a cabled system. There's also the inherent issues of interference and other interruptive problems.

In Apple's patent, it suggests that a wireless system could be used, but to cut down the amount of data being transmitted at once, the system has to send less video data. Instead of data covering two full streams, one for each eye, Apple wants to halve the amount of bandwidth being used.

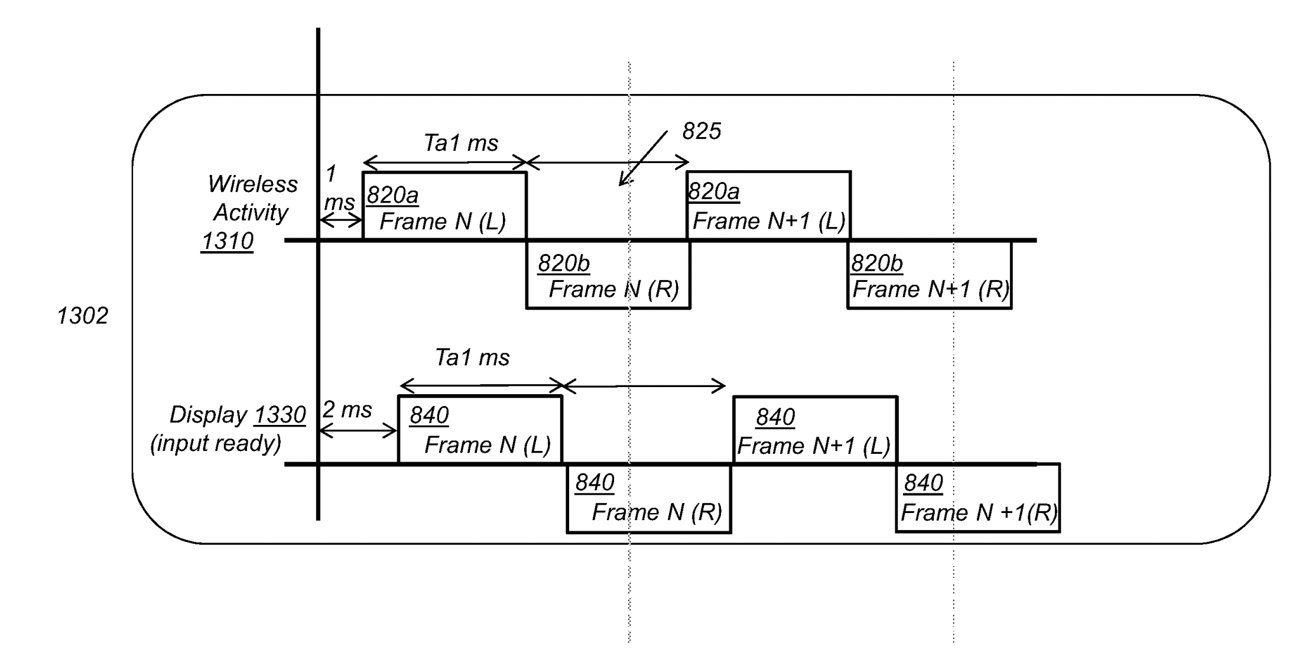

It plans to do this by using interleaved frame transmissions for the left eye data and the right eye data. Once frame data for both eyes have been received, the system displays them to the user.

To further improve the system by reducing the data being transmitted, each frame doesn't have to be complete. Apple suggests partial frame transmissions could be dispatched, potentially covering elements that need to be updated urgently, and allowing elements of earlier frames covering non-updated sections to be reused.

There is also mention of gaze tracking, which can also play into the system. By knowing where the user is looking, those areas can be prioritized for updates, while using foveated display techniques could reduce the amount of detail needed for parts of a screen that isn't being looked at, again saving data.

Such a system could potentially offer extremely high frame rates, of "at least 100 frames per second using a rolling shutter," though this may not be as high as you think. One claim has the frames received at "less than 60 frames per second," but since the eye frames are displayed in alternate eyes, it can do so at a speed greater than 100 frames per second.

Other elements include monitoring wireless links and using multiple connections to maintain high levels of bandwidth, and data transmission timings.

The patent was originally filed on May 14, 2019, and was invented by Aleksandr M. Movshovich, Arthur Y. Zhang, Hao Pan, Holly E. Gerhard, Jim C. Chou, Moinul H. Khan, Paul V. Johnson, Sorin C. Cismas, Sreeraman Anantharaman, and William W. Sprague.

Apple files numerous patent applications on a weekly basis, but while the existence of patents indicates areas of interest for its research and development teams, it doesn't guarantee they will appear in a future product or service.

Previous patent filings

Apple has numerous patent filings in the field of VR and AR, and there is some crossover with the latest two patents.

For example, it has looked at foveated displays in the past, including in a 2019 patent where a system could offer high headset refresh rates. In 2020, it explored a similar system that used eye tracking to decide what sections of a screen to throw resources at, by using gaze detection.

Gesture recognition has floated a few times before, with one patent from 2018 covering how cameras below a headset could monitor a user's hands to manage 3D documents. In 2015, it thought machine vision could be recognize human hand gestures at a distance.

Keep up with everything Apple in the weekly AppleInsider Podcast — and get a fast news update from AppleInsider Daily. Just say, "Hey, Siri," to your HomePod mini and ask for these podcasts, and our latest HomeKit Insider episode too.

If you want an ad-free main AppleInsider Podcast experience, you can support the AppleInsider podcast by subscribing for $5 per month through Apple's Podcasts app, or via Patreon if you prefer any other podcast player.

Malcolm Owen

Malcolm Owen

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

5 Comments

My, probably vain, hope is that any Apple VR device will not be exclusive to the Apple ecosystem.

And today in unnecessary censorship… :D