A pair of Princeton researchers claim that Apple's CSAM detection system is dangerous because they explored and warned against similar technology, but the two systems are far from identical.

Jonathan Mayer and Anunay Kulshrestha recently penned an op-ed for the Washington Post detailing some of their concerns about Apple's CSAM detection system. The security researchers wrote that they had built a similar system two years prior, only to warn against the technology after realizing its security and privacy pitfalls.

For example, Mayer and Kulshrestha claim that the service could be easily repurposed for surveillance by authoritarian governments. They also suggest that the system could suffer from false positives, and could be vulnerable to bad actors subjecting innocent users to false flags.

However, the system that the two security researchers developed doesn't contain the same privacy or security safeguards that Apple baked into its own CSAM detecting technology.

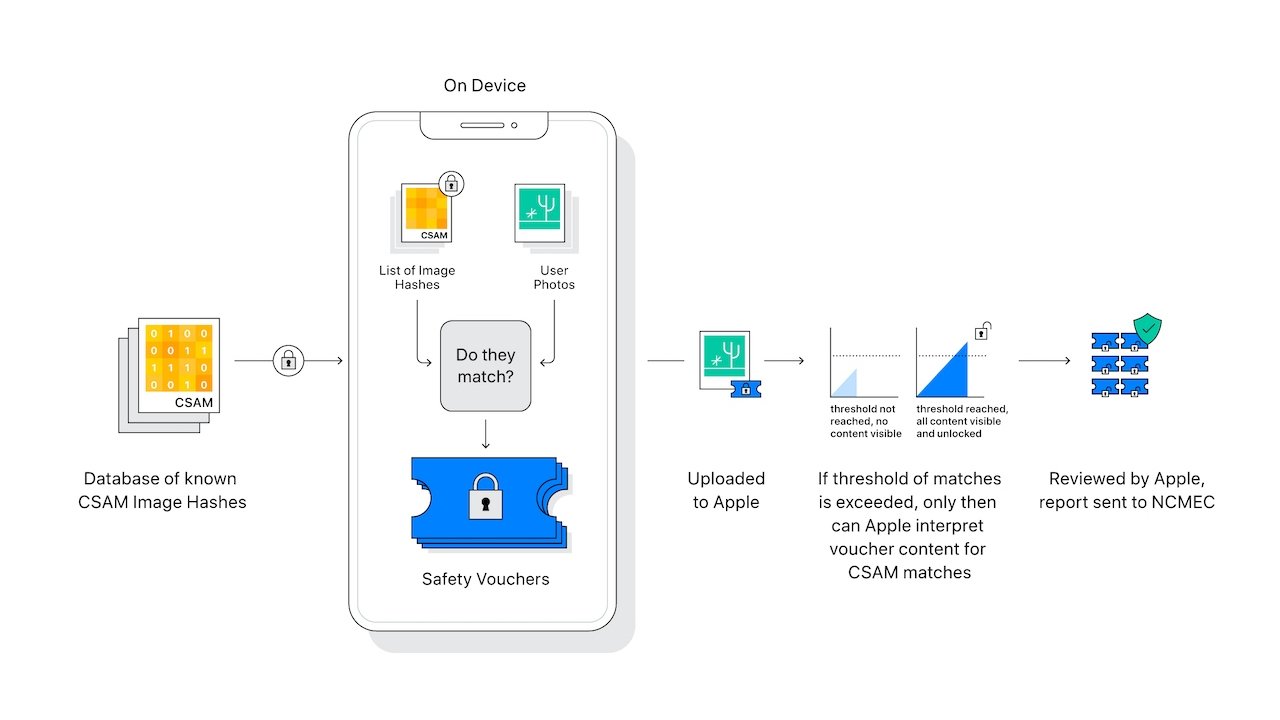

Additionally, concerns that a government could easily swap in a database full of dissident speech don't pan out. Apple's database contains hashes sourced from at least two child safety organizations in completely different jurisdictions. This provides protection against a single government corrupting a child safety organization.

Additionally, such a scheme would need to rely on Apple's human auditors to also be collaborators. Apple says flagged accounts are checked for false positives by human auditors. If Apple's team found accounts flagged for non-CSAM material, Apple says they'd suspect something was amiss and would stop sourcing the database from those organizations.

False positives are also incredibly rare on Apple's systems. The company says there's a one-in-a-trillion chance of a falsely flagged account. Again, even if an account is falsely flagged, the presence of CSAM must be confirmed before any report is generated to child safety organizations.

There are also protections against a bad actor sending CSAM to an innocent person. The Apple system only detects collections of CSAM in iCloud. Unless a user saves CSAM to iCloud themselves, or their Apple account is hacked by a sophisticated threat actor, then there's little chance of such a scam working out.

Additionally, users will be able to verify that the database of known CSAM hashes stored locally on their devices matches the one maintained by Apple. The database also can't be targeted — it applies to all users in a specific country.

Apple's system also relies on client-side scanning and local on-device intelligence. There's a threshold for CSAM too — a user needs to pass that threshold to even get flagged.

In other words, the two security researchers built a system that only vaguely resembles Apple's. Although there might be valid arguments to be made about "mission creep" and privacy, Apple's system was built from the ground up to address at least some of those concerns.

Mike Peterson

Mike Peterson

-xl-m.jpg)

-m.jpg)

Chip Loder

Chip Loder

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

-m.jpg)

46 Comments

LISTEN TO THE EXPERTS.

They have been down this road.

They know what they are talking about.

They abandoned this line of development because they saw what a massively bad idea it was.

It can and will be used by governments to crack down on dissent. It’s not an if but a when. It will produce false positives, it’s not an if but a when. Apple’s privacy safeguards are a fig-leaf that will be ripped off by the first government that wants to. Worst of all it will destroy the reputation Apple has crafted over the last twenty years of being on the individual users side when it comes to privacy and security. Once they lose that, in the minds of a huge number of consumers they will then be no better than Google.

The silly exculpatory listing of differences in the systems is useless.

Did Apple leave the Russian market when Russia demanded the installation of Russian government approved apps? Did Apple leave the Russian and Chinese markets, when Russia and China demanded that iCloud servers be located in their countries where government has physical access? Did Apple leave the Chinese market, when VPN apps were requested to be removed from the Chinese AppStore? Did Apple comply when Russia demanded that Telegram be removed from the Russian AppStore? Did Apple leave the UAE when VoIP apps were outlawed there?

NO, NO, NO, NO, NO, and NO!

And NO will be the answer if these countries require additional databases, direct notification (instead of Apple reviewing the cases), etc.

Once this is baked into the OS, Apple has no leg to stand on, once “lawful” requests from governments are coming.