Apple has published a new document that provides more detail about the security and privacy of its new child safety features, including how it's designed to prevent misuse.

For one, Apple says in the document that the system will be auditable by third parties like security researchers or nonprofit groups. The company says that it will publish a Knowledge Base with the root hash of the encrypted CSAM hash database used for iCloud photo scanning.

The Cupertino tech giant says that "users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the Knowledge Base article." Additionally, it added that the accuracy of the database will be reviewable by security researchers.

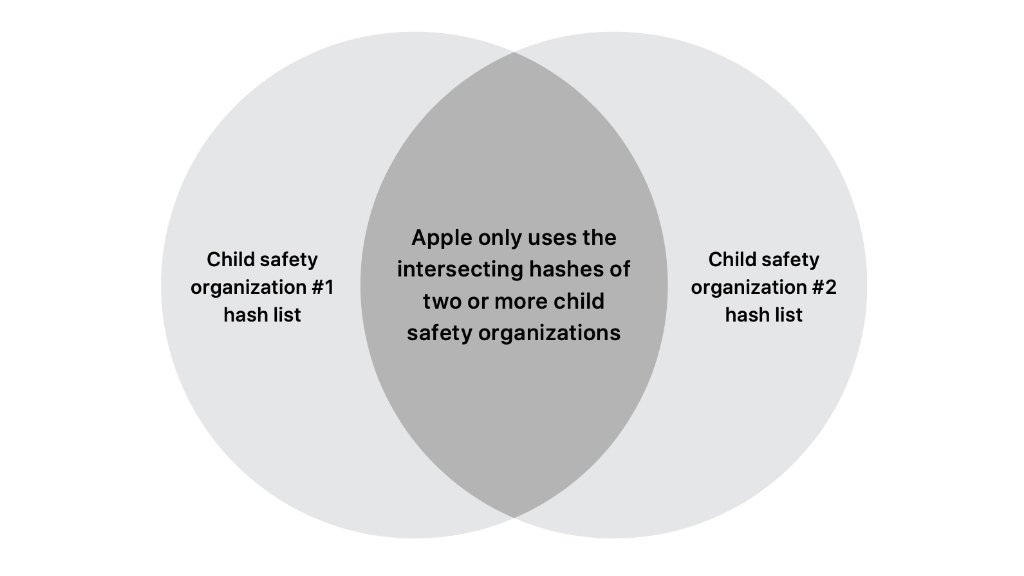

"This approach enables third-party technical audits: an auditor can confirm that for any given root hash of the encrypted CSAM database in the Knowledge Base article or on a device, the database was generated only from an intersection of hashes from participating child safety organizations, with no additions, removals, or changes," Apple wrote.

Additionally, there are mechanisms to prevent abuse from specific child safety organizations or governments. Apple says that it's working with at least two child safety organizations to generate its CSAM hash database. It's also ensuring that the two organizations are not under the jurisdiction of the same government.

If multiple governments and child safety organizations somehow collaborate and include non-CSAM hashes in the database, Apple says there's a protection for that, too. The company's human review team will realize that an account was flagged for something other than CSAM, and will respond accordingly.

Separately on Friday, it was revealed that the threshold for a CSAM collection that would trigger an alert was 30 pieces of abuse material. Apple says the number is flexible, however, and it's only committed to stick to that at launch.

Bracing for an expected onslaught of questions from customers, the company instructed retail personnel to use a recently published FAQ as a resource when discussing the topic, according to an internal memo seen by Bloomberg. The letter also said Apple plans to hire an independent auditor to review the system.

Apple's child safety features has stirred controversy among privacy and security experts, as well as regular users. On Friday, Apple software chief Craig Federighi admitted that the messaging has been "jumbled" and "misunderstood."

Mike Peterson

Mike Peterson

-m.jpg)

-m.jpg)

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

Christine McKee

Christine McKee

71 Comments

In this brave new world where facts are irrelevant and perception and spin is all that matters the horse is already out of the barn and nothing Apple says or does will change the perception being spun in the news media and tech blogs.

Shame on Apple. They are not the police!!

User data should only used to improve that user's experience, not to share with other orgs. No matter what the scenario is.

They don't get it!!! We DO NOT WANT THIS. I don't give a rat's ass on their "white paper".

I am still wondering why Apple developed this feature? I don't see any other reason than a requirement/request from the government.