Following widespread criticism, Apple has announced that it will not be launching its child protection features as planned, and will instead "take additional time" to consult.

In an email sent to AppleInsider and other publications, Apple says that it has taken the decision to postpone its features following the reaction to its original announcement.

"Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material," said Apple in a statement.

"Based on feedback from customers, advocacy groups, researchers and others," it continues, "we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features."

There are no further details either of how the company may consult to "collect input," nor with whom it will be working.

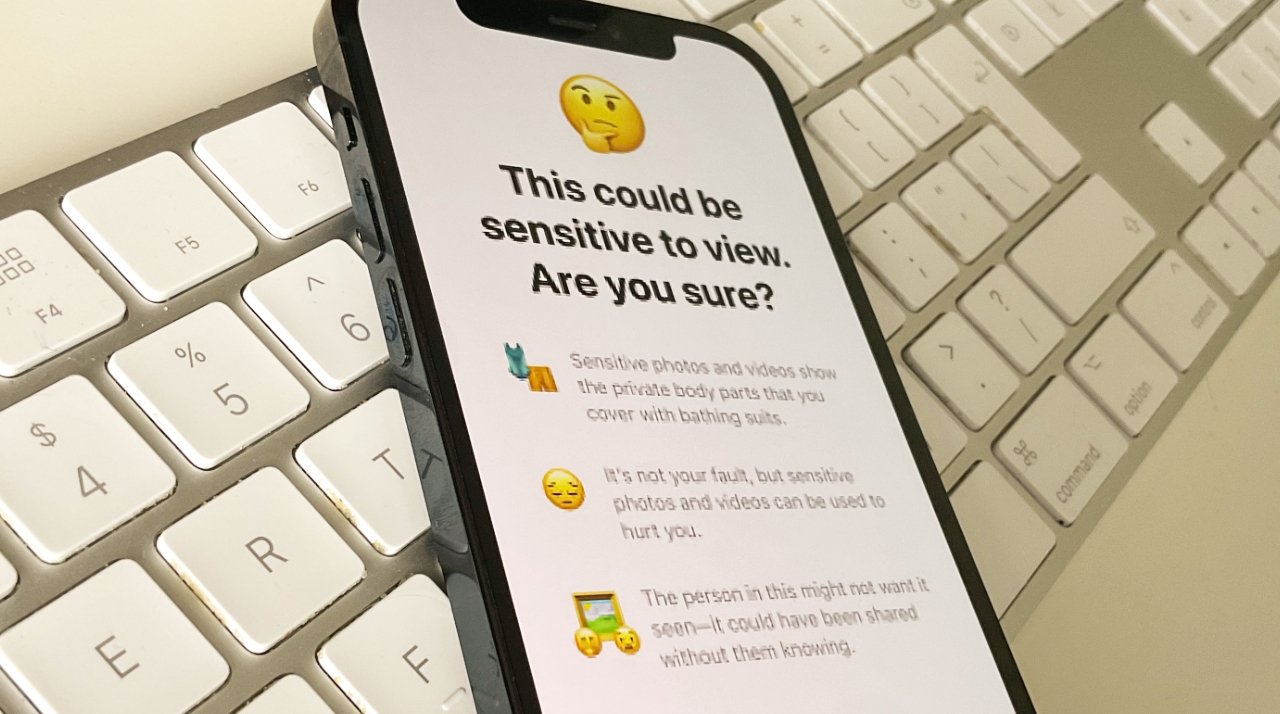

Apple originally announced its CSAM features on August 5, 2021, saying they would debut later in 2021. The features include detecting child sexual abuse images stored in iCloud Photos, and, separately, blocking potentially harmful Messages sent to children.

Industry expert and high-profile names such as Edward Snowden responded with an open letter asking Apple to not implement these features. The objection is that it was perceived these features could be used for surveillance.

AppleInsider issued an explanatory article, covering both what Apple actually planned, and how it was being seen as an issue. Then Apple published a clarification in the form of a document detailing what its intentions were, and broadly describing how the features are to work.

However, complaints, both informed and not, continued. Apple's Craig Federighi eventually said publicly that Apple had misjudged how it announced the new features.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi.

"I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion," he continued. "It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

William Gallagher

William Gallagher

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Wesley Hilliard

Wesley Hilliard

157 Comments

🥳🥳🥳🥳🥳🥳🥳🥳🥳🥳

I hope this turns into an indefinite postponement. I think Apple meant well with trying to implement these features but I’m glad they’re standing down for now and hopefully this will be like that AirPower pad that never materialized

Hollow victory.

As soon as the uproar has died down and Apple thinks it is safe they will be back. They will frame what they put forth next time as "look what we have done to protect you." when in reality it will likely just be cosmetic.

Good! Kudos to Apple for trying to do the right thing, then backtracking when they realised it wasn't the right way to do it. My respect for Apple has been restored.