Security researchers say that Apple's CSAM prevention plans, and the EU's similar proposals, represent "dangerous technology," that expands the "surveillance powers of the state."

Apple has not yet announced when it intends to introduce its child protection features, after postponing them because of concerns from security experts. Now a team of researchers have published a report saying that similar plans from both Apple and the European Union, represent a national security issue.

According to the New York Times, the report comes from more than a dozen cybersecurity researchers. The group began its study before Apple's initial announcement, and say they are publishing despite Apple's delay, in order to warn the EU about this "dangerous technology."

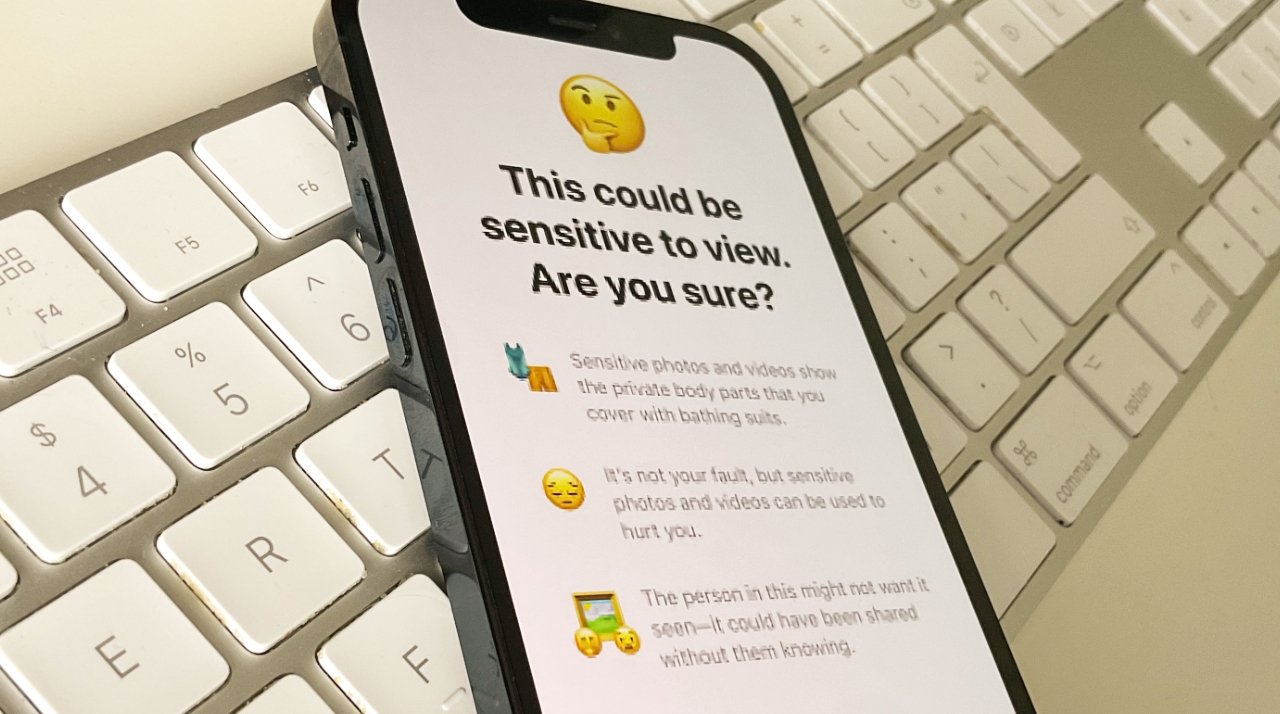

Apple's plan was for a suite of tools to do with protecting children from the spread of child sexual abuse material (CSAM). One part would block suspected harmful images being seen in Messages, and another automatically scan images stored in iCloud.

The latter is similar to how Google has already been scanning Gmail for such images since 2008. However, privacy groups believed Apple's plan could lead to governments demanding that the firm scan images for political purposes.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," Apple's Craig Federighi said. "I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion."

"It's really clear a lot of messages got jumbled up pretty badly," he continued. "I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

There was considerable confusion, which AppleInsider addressed, as well as much objection from privacy organizations, and governments.

The authors of the new report believe that the EU plans a similar system to Apple's in how it would scan for images of child sexual abuse. The EU's plan goes further in that it also looks for organized crime and terrorist activity.

"It should be a national-security priority to resist attempts to spy on and influence law-abiding citizens," the researchers wrote in the report seen by the New York Times.

"It's allowing scanning of a personal private device without any probable cause for anything illegitimate being done," said the group's Susan Landau, professor of cybersecurity and policy at Tufts University. "It's extraordinarily dangerous. It's dangerous for business, national security, for public safety and for privacy."

Apple has not commented on the report.

William Gallagher

William Gallagher

-m.jpg)

Oliver Haslam

Oliver Haslam

Thomas Sibilly

Thomas Sibilly

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

-m.jpg)

21 Comments

"Confusion" was not the issue. Apple of course would say it is because to do otherwise would be an admission that the feature was toxic and entirely contradictory to their public privacy stance. Privacy organisations and governments weren't "confused", they could foresee the potential privacy consequences. Apple knows full well the pushback was due to their public "what's happens on your phone stays on your phone" stance, the polar opposite to scanning phones for CSAM - and the potential for further encroachment on privacy.

Expanding on the article just a bit:

... now the EU knows Apple possesses this capability – it might simply pass a law requiring the iPhone maker to expand the scope of its scanning. Why reinvent the wheel when a few strokes of a pen can get the job done in 27 countries?

“It should be a national-security priority to resist attempts to spy on and influence law-abiding citizens,” the researchers wrote ...

For those who want more details on what the EU may propose read this report commissioned by the Council of the European Union and the recommended Council Resolution for Encryption. https://data.consilium.europa.eu/doc/document/ST-13084-2020-REV-1/en/pdf CSAM as proposed by Apple is a horrible idea IMO.

Anyone saying "oh it's no different than Google does in the cloud" or "anyone that doesn't want it just don't use the Photos app" needs to read this.

Hopefully this technology will never be installed und users devices!

Prediction: the CSAM horse is dead as a Monty Python parrot — no need to keep beating it. Yes, we know Apple says it’s only resting, and if we believe that, well…

How Apple didn’t see these privacy and security threats to their users and to society is mind boggling.