Apple and Microsoft have provided details of their methods for detecting or preventing child sexual abuse material distribution, and an Australian regulator has found their efforts lacking.

The Australian e-Safety Commissioner demanded that major tech firms like Apple, Facebook, Snapchat, Microsoft, and others detail their methods for preventing child abuse and exploitation on their platforms. The demand was made on August 30, and the companies had 29 days to comply or face fines.

Apple and Microsoft are the first companies to receive scrutiny from this review, and according to Reuters, the Australian regulator found their efforts insufficient. The two companies do not proactively scan user files on iCloud or OneDrive for CSAM, nor are there algorithmic detections in place for FaceTime or Skype.

Commissioner Julie Inman Grant called the company's responses "alarming." She stated that there was "clearly inadequate and inconsistent use of widely available technology to detect child abuse material and grooming."

Apple recently announced that it had abandoned its plans to scan photos being uploaded to iCloud for CSAM. This method would have been increasingly less effective since users now have access to fully end-to-end encrypted photo storage and backups.

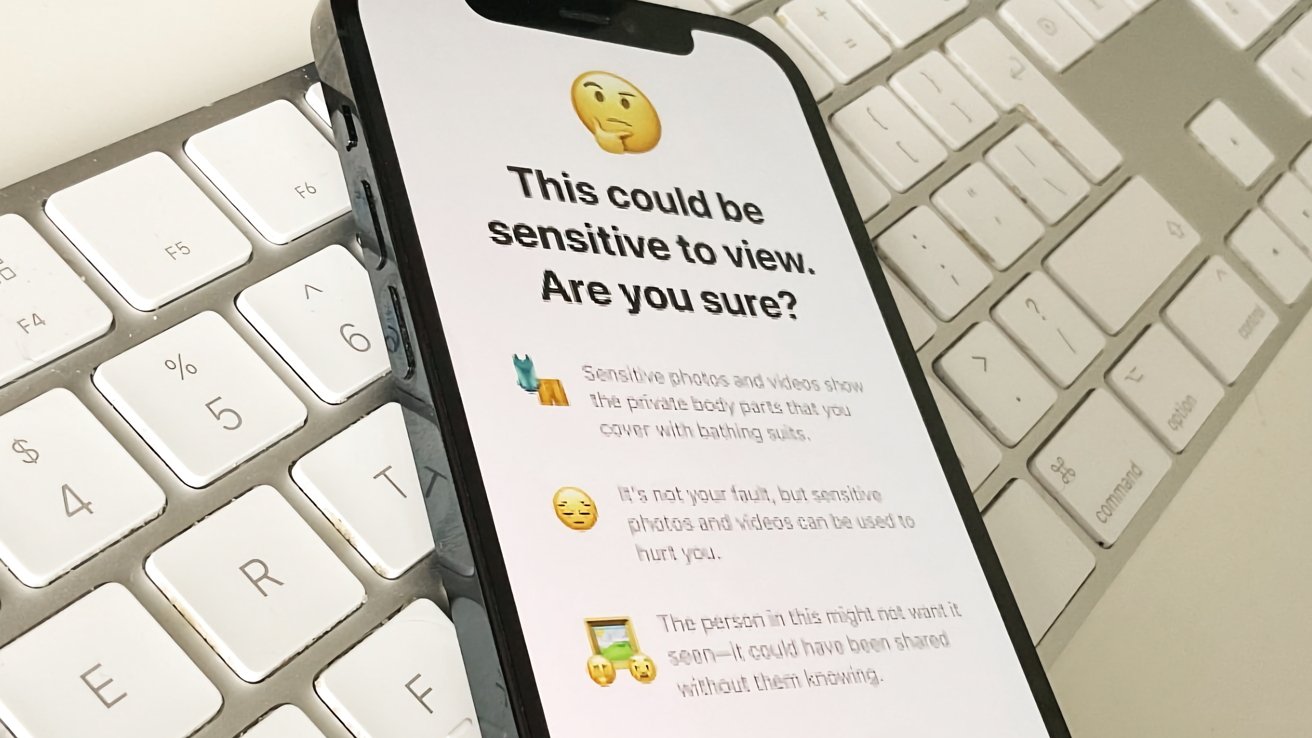

Instead of scanning for existing content being stored or distributed, Apple has decided to take a different approach that will evolve over time. Currently, devices used by children can be configured by parents to alert the child if nudity is detected in photos being sent over iMessage.

Apple plans on expanding this capability to detect material in videos, then move the detection and warning system into FaceTime and other Apple apps. Eventually, the company hopes to create an API that would let developers use the detection system in their apps as well.

Abandoning CSAM detection in iCloud has been celebrated by privacy advocates. At the same time, it has has been condemned by child safety groups, law enforcement, and governmental officials.

Wesley Hilliard

Wesley Hilliard

-xl-m.jpg)

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele

Thomas Sibilly

Thomas Sibilly

7 Comments

Classic case of damned if you do and damned if you don ’t. Are any of you critics even beginning to see the predicament companies like Apple are in? No, I guess not. All you do is threaten to boycott if they don’t comply with YOUR position. I always guffaw when I read the “I have purchased my last Apple product” rants be it China, CSAM, unionization, or some other sociopolitical cause du jour. And yes, I have even succumbed to that rage myself recently. It accomplishes n nothing.

So if they do it they’re horrible spies and if they don’t they’re in league with child perverts. Governments need to shut the f up.

Under the new Advanced Data Protection feature, which will be an opt-in feature for users (starting now in the USA, but likely to expand to other countries), Apple won't even have the ability to scan iCloud images because iCloud photos will be encrypted with keys that only the end user has access to. So is Australia going to ban the use of this new security feature in Australia?

https://appleinsider.com/articles/22/12/13/apples-advanced-data-protection-feature-is-here---what-you-need-to-know <--

"Apple's new Advanced Data Protection feature goes a step further and allows you to encrypt additional information in iCloud with a few new layers of security.

Data encrypted by enabling Advanced Data Protection

Apple notes that the only major iCloud data categories that aren't covered are Calendar, Contacts, and iCloud Mail, as these features need to interoperate with global systems."

I thought people got mad at Apple because they WANTED to start doing it, and the backlash was so bad, they backed off. Now people are mad about that? Seeseh, people, figure it out!