While Apple's controversial plan to hunt down child sexual abuse material with on-iPhone scanning has been abandoned, the company has other plans in mind to stop it at the source.

Apple announced two initiatives in late 2021 that aimed to protect children from abuse. One, which is already in effect today, would warn minors before sending or receiving photos with nude content. It works using algorithmic detection of nudity and only warns the kids — the parents aren't notified.

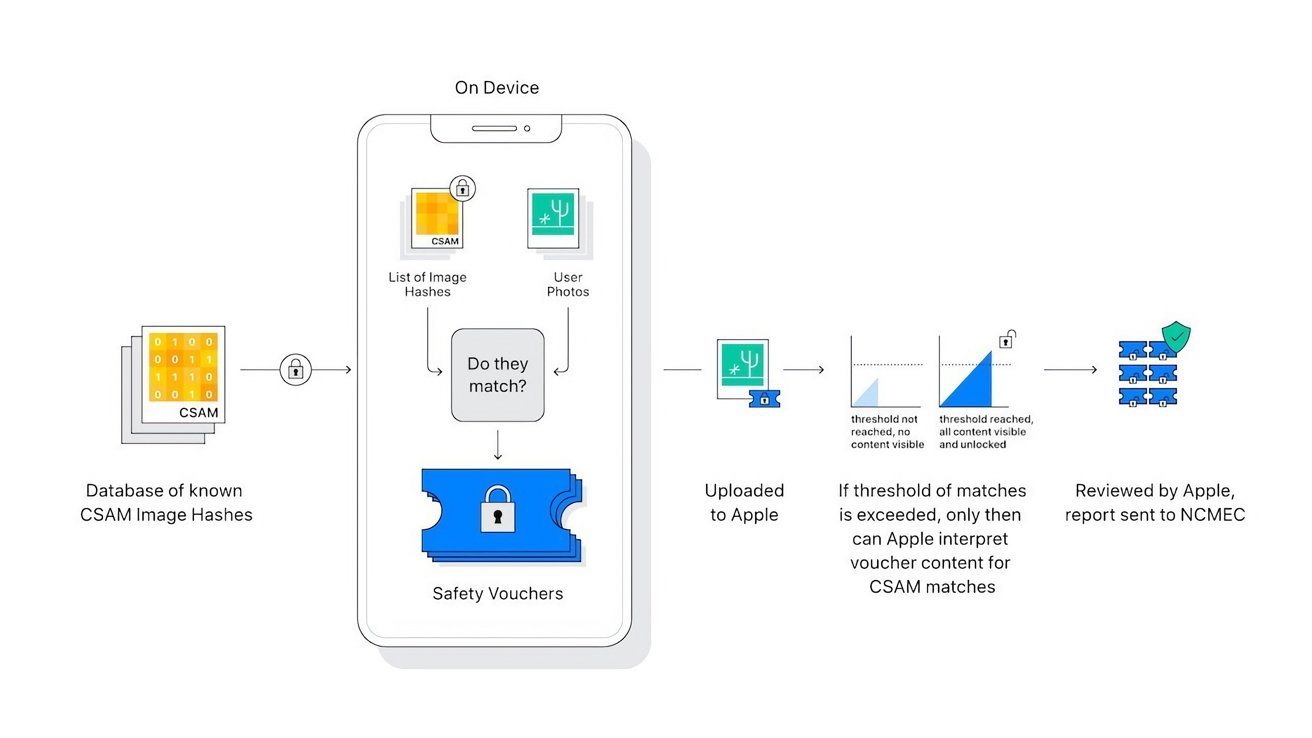

The second, and much more controversial feature, would analyze a user's photos being uploaded to iCloud on the user's iPhone for known CSAM content. The analysis was performed locally, on device, using a hashing system.

After backlash from privacy experts, child safety groups, and governments, Apple paused the feature indefinitely for review. On Wednesday, Apple released a statement to AppleInsider and other venues explaining that it has abandoned the feature altogether.

"After extensive consultation with experts to gather feedback on child protection initiatives we proposed last year, we are deepening our investment in the Communication Safety feature that we first made available in December 2021.""We have further decided to not move forward with our previously proposed CSAM detection tool for iCloud Photos. Children can be protected without companies combing through personal data, and we will continue working with governments, child advocates, and other companies to help protect young people, preserve their right to privacy, and make the internet a safer place for children and for us all."

The statement comes moments after Apple announced new features that would end-to-end encrypt even more iCloud data, including iMessage content and photos. These strengthened protections would have made the server-side flag system impossible, which was a primary part of Apple's CSAM detection feature.

Taking a different approach

Amazon, Google, Microsoft, and others perform server-side scanning as a requirement by law, but end-to-end encryption will prevent Apple from doing so.

Instead, Apple hopes to address the issue at its source — creation and distribution. Rather than target those who hoard content in cloud servers, Apple hopes to educate users and prevent the content from being created and sent in the first place.

Apple provided extra details about this initiative to Wired. While there isn't a timeline for the features, it would start with expanding algorithmic nudity detection to video for the Communication Safety feature. Apple then plans on expanding these protections to its other communication tools, then provide developers with access as well.

"Potential child exploitation can be interrupted before it happens by providing opt-in tools for parents to help protect their children from unsafe communications," Apple also said in a statement. "Apple is dedicated to developing innovative privacy-preserving solutions to combat Child Sexual Abuse Material and protect children, while addressing the unique privacy needs of personal communications and data storage."

Other on-device protections exist in Siri, Safari, and Spotlight to detect when users search for CSAM. This redirects the search to resources that provide help to the individual.

Features that educate users while preserving privacy has been Apple's goal for decades. All the existing implementations for child safety seek to inform, and Apple never learns when the safety feature is triggered.

Wesley Hilliard

Wesley Hilliard

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

15 Comments

As I have said before it does not make sense unless you go after the sources not the end pervert. Plus if the image is not in the database to compare the hash you are not going to catch the new sources. Glad apple saw the error of the altruistic ways, yes they thought them were well meaning and doing it for right reason, but at the cost to everyone.

I am amazed that Apple backtracked on what it claimed was such a noble and virtue cause by eventually accepting it could also result in being used for another kind of evil itself.

Unless that’s what “they” want us to think! Bwaahahhhaha!

A noble idea that was doomed to fail. Glad Apple was willing to admit their mistake and backtrack.

That is not true. In the US, there are no laws that requires company like Amazon, Google, Microsoft or others to perform CSAM search on the data stored in there servers. Our US Constitution would forbid government from passing such laws. Such laws would violate the 4th Amendment regarding unreasonable and unwarranted search, without probable cause.

The only laws that exist is that companies must report to law enforcement, instances of child porn that they find on their servers. But no law exist that they must actively search for this.

However, Amazon, Google Microsoft and others can voluntarily perform CSAM on the data stored in their servers at the request of foundations against child exploitation, even if no law exist that requires them to do it.

The gray area is that many foundations against child exploitation often pressure companies to perform CSAM or otherwise face public shame for not performing it. And many of these foundations are government funded and might be construed as actors working for the government and thus subject to the US Constitution.

https://crsreports.congress.gov/product/pdf/LSB/LSB10713

>Currently, n

For citizens in the EU on the other hand, they have no Constitution protecting them from unreasonable and unwarranted search by the government and thus might be (or soon will be) subject to mandatory CSAM searches, on the data they keep on a third party server. Even if it's suppose to be encrypted end to end.

https://techcrunch.com/2022/05/11/eu-csam-detection-plan/?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLmNvbS91cmw_c2E9dCZyY3Q9aiZxPSZlc3JjPXMmc291cmNlPXdlYiZjZD0mdmVkPTJhaFVLRXdpVXc5cjBfZWo3QWhWb01EUUlIY2J2Q2FBUUZub0VDQW9RQVEmdXJsPWh0dHBzJTNBJTJGJTJGdGVjaGNydW5jaC5jb20lMkYyMDIyJTJGMDUlMkYxMSUyRmV1LWNzYW0tZGV0ZWN0aW9uLXBsYW4lMkYmdXNnPUFPdlZhdzFjYkw5RlF3Y0tKQ0ZLQnhFYTFickg&guce_referrer_sig=AQAAAAa00vwPWJ8hov6dKhTHkWFDKxkOOna5JP7CvrJUxLg47DVnrCVqYY0W3UQgoZnFKub-eQ4VwMUcUGzxE8g2rpl_SwL_Ooqx8PNCJZTGn5BCHk_tOBTD_MGHqc0mxVRWhoGHSyGYPqgrqabbyiczcl7ZIE6uWKf7Rjm3p2-fkWxihttps://9to5mac.com/2022/05/12/eu-csam-scanning-law/

In the US, its citizens privacy is better protected from government intrusion because of the US Constitution but laws protecting our privacy from third parties tends to be lax. But in the EU, its citizens privacy are better protected from third party intrusions by strict privacy laws, while laws protecting their privacy from government intrusions, tends to be lax.

And the fbi was quick to start crying “tHiNk AbOuT tHe ChIlDrEn”