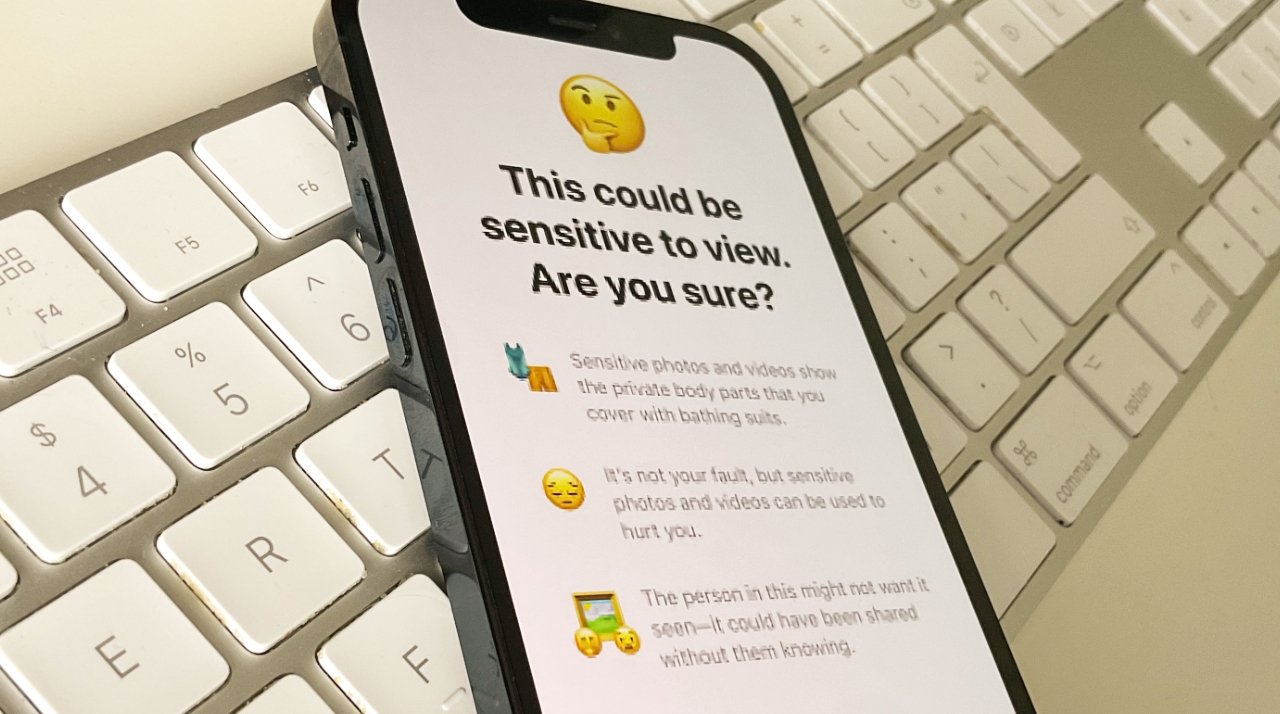

More than 80 civil rights groups have sent an open letter to Apple, asking the company to abandon its child safety plans in Messages and Photos, fearing expansion of the technology by governments.

Following the German government's description of Apple's Child Sexual Abuse Material plans as surveillance, 85 organizations around the world have joined the protest. Groups including 28 US-based ones, have written to CEO Tim Cook.

"Though these capabilities are intended to protect children and to reduce the spread of child sexual abuse material (CSAM)," says the full letter, "we are concerned that they will be used to censor protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children."

"Once this capability is built into Apple products, the company and its competitors will face enormous pressure — and potentially legal requirements — from governments around the world to scan photos not just for CSAM, but also for other images a government finds objectionable," it continues.

"Those images may be of human rights abuses, political protests, images companies have tagged as 'terrorist' or violent extremist content, or even unflattering images of the very politicians who will pressure the company to scan for them," says the letter.

"And that pressure could extend to all images stored on the device, not just those uploaded to iCloud. Thus, Apple will have laid the foundation for censorship, surveillance and persecution on a global basis."

Signatories on the letter have separately been promoting its criticisms, including the Electronic Frontier Foundation.

Apple's latest feature is intended to protect children from abuse, but the company doesn't seem to have considered the ways in which it could enable abuse https://t.co/FjIJN8bKaL

— EFF (@EFF) August 18, 2021

The letter concludes by urging Apple to abandon the new features. It also urges "Apple to more regularly consult with civil society groups," in future.

Apple has not responded to the letter. However, Apple's Craig Federighi has previously said that the company's child protection message was "jumbled," and "misunderstood."

William Gallagher

William Gallagher

-m.jpg)

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

-m.jpg)

48 Comments

Little late for that now. Apple already opened that can of worms (if it is actually a problem) just by announcing it. I'd still kind of like to see this implemented. I think Apple has addressed this as best it can, and governments can mandate that companies scan for this stuff with laws regardless of any prior implementation. Tech has kind of created this problem of sharing these CSAM photos easily. I'd like to see them as part of the solution too.

FYI to EFF: totalitarian governments already have their populations under surveillance. You're not thwarting totalitarianism by having Apple remove the CSAM hash scanning capability. Citizens of China, Russia, Turkey etc. use smartphones and are still under totalitarian control regardless.

Apple can do no wrong in the eyes of many. This new feature that Apple has developed is wrong. It’s a bad capability put to good use. The objective of reducing the transmission of CSAM is good. But it’s like plugging leaks in the proverbial dike. It makes the transmission of illicit content more difficult but If implemented it will just force the use of other pathways to move the content about. However the byproduct of this action- the scanning of content of people’s devices- will be disastrous. Now that governments know there is an ability for Apple to interrogate the content on people’s devices it won’t be long before governments require Apple to perform other types of content scanning on devices. Governments routinely require Apple to divulge iCloud content. That content is not encrypted. Users had the option of keeping content secured from government eyes by keeping content on their devices and out of iCloud. This capability will mark the beginning of the end of that security. This capability is totally at odds with Apple’s heretofore emphasis on the privacy and security of content on their devices. The law of unintended consequences is going to have a significant impact if this capability is implemented. This is an example of the old Ben Franklin adage about giving up some freedom to have better security and having neither as a result. I’m surprised that Apple leadership hasn’t thought through this decision better and I’m fairly sure the marketing department at Apple somehow sees this as being beneficial to the company and revenues - which I think is decidedly wrong.