A new research paper shows that Apple has practical solutions to technical AI issues that other firms appear to be ignoring, specifically how to use massive large language modules on lower-memory devices like iPhone.

Despite claims that Apple is behind the industry on generative AI, the company has twice now revealed that it is continuing to do its longer term planning rather than racing to release a ChatGPT clone. The first sign was a research paper which proposed an AI system called HUGS, which generates digital avatars of humans.

Now as spotted by VentureBeat, a second research paper, proposes solutions for deploying enormous large language modules (LLMs) on devices with limited RAM, such as iPhones.

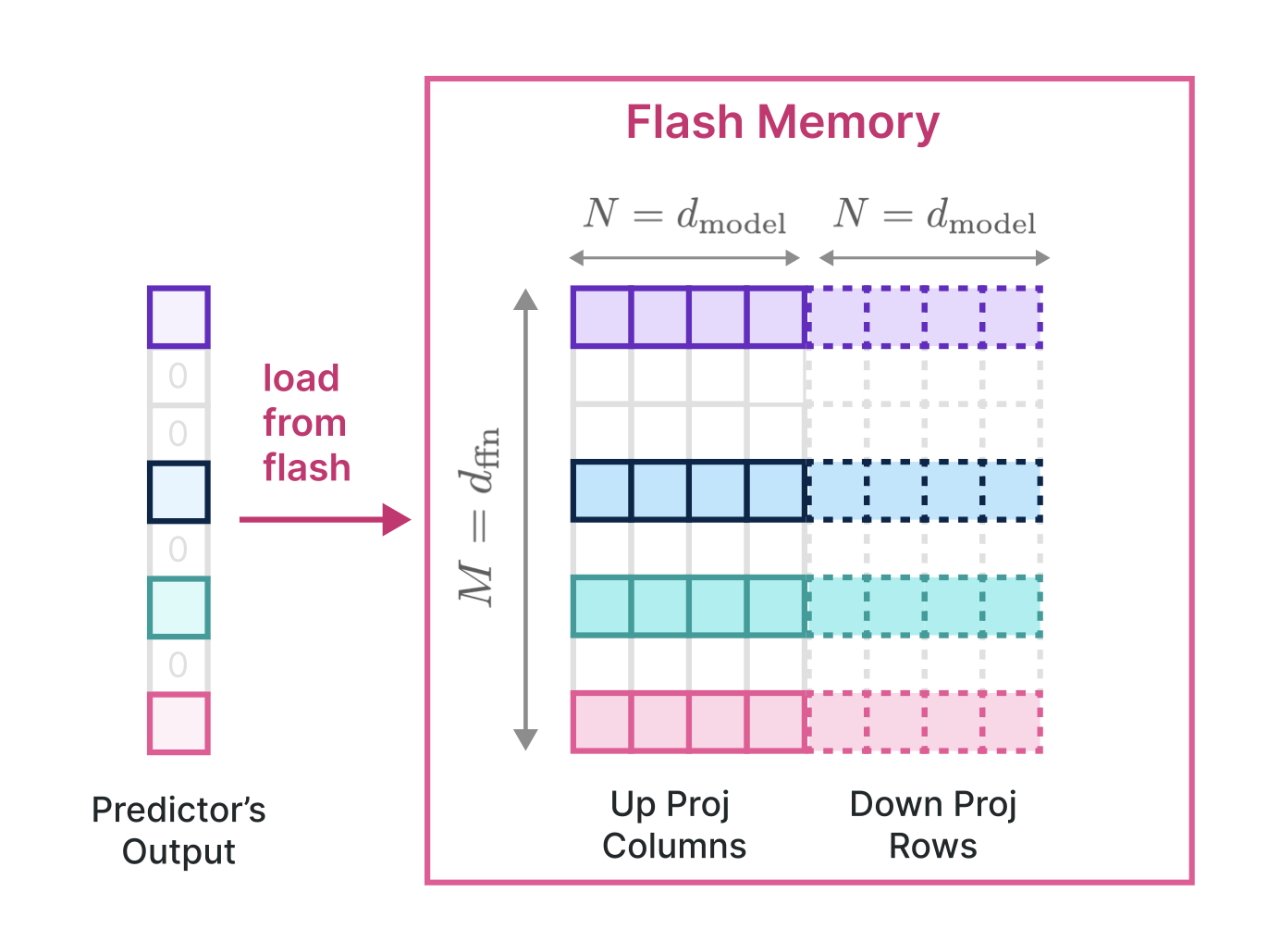

The new paper is called "LLM in a flash: Efficient Large Language Model Inference with Limited Memory." Apple says that it "tackles the challenge of efficiently running LLMs that exceed the available DRAM capacity by storing the model parameters on flash memory but bringing them on demand to DRAM."

So the whole LLM still needs to be stored on-device, but working with it in RAM can be done through working with flash memory as a kind of virtual memory, not dissimilar to how it's done on macOS for memory intensive tasking.

"Within this flash memory-informed framework, we introduce two principal techniques," says the research paper. "First, 'windowing' strategically reduces data transfer by reusing previously activated neurons... and second, 'row-column bundling,' tailored to the sequential data access strengths of flash memory, increases the size of data chunks read from flash memory."

What this ultimately means is that LLMs of practically any size can still be deployed on devices with limited memory or storage. It means that Apple can leverage AI features across more devices, and therefore in more ways.

"The practical outcomes of our research are noteworthy," claims the research paper. "We have demonstrated the ability to run LLMs up to twice the size of available DRAM, achieving an acceleration in inference speed by 4-5x compared to traditional loading methods in CPU, and 20-25x in GPU."

"This breakthrough is particularly crucial for deploying advanced LLMs in resource-limited environments," it continues, "thereby expanding their applicability and accessibility."

Apple has made this research public, as it did with the HUGS paper. So instead of being behind, it is actually working to improve AI capabilities for the whole industry.

This fits in with analysts who, given the user base that Apple does, believe the firm will benefit the most as AI goes further mainstream.

William Gallagher

William Gallagher

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

33 Comments

This article carefully steps around the fact that this improvement will make zero difference to Apple's most visible and most deficient use of AI: Siri.

Siri is the primary and by far most visible use of "AI" at Apple. People quite rightly have a problem with Siri being thick as a brick. Siri has numerous problems which are not going to be solved by improving the creation of useless avatars for a niche device no one yet owns outside of the developer space. This research specifically does nothing to help Siri for several reasons:

This article does nothing to convince anyone that Apple is not far far behind the AI curve. Making a clockwork timepiece 10% more efficient is irrelevant when everyone else has long since moved to battery. Apple needs exactly what this article claims they don't: a clone of ChatGPT. Siri was supposed to be conversational 10 years ago when Steve Jobs introduced it on the iPhone 4. It has arguably got worse since then.

However, Apple's AI in other areas seems pretty good, photos is pretty good at recognising objects/people/things/scenes for example; and that's on-device. But claiming that Apple isn't behind the AI curve when their most visible use of AI is a disaster is pure fanboyism.

I think it's fair to say that Apple is behind in this area.

Objectively, this year has been about ChatGPT style usage and Apple hasn't brought anything to market while others have.

It is also recruiting for specific roles in AI. So far, most of the talk has been only that, talk.

Talking about ML as they made a point of doing, is stating the obvious here. Who isn't using ML?

In this case of LLMs on resource strapped devices, again, some manufacturers are already using them.

A Pangu LLM underpins Huawei's Celia voice assistant on its latest phones.

I believe Xiaomi is also using LLMs on some of its phones too (although I don't know in which areas).

The notion of trying to do more with less is an industry constant. Research never stops in that area and in particular routers have been a persistent research target, being ridiculously low on spare memory and CPU power. I remember, many years ago, doing some external work for the Early Bird project and the entire goal was how to do efficient, real time detection of worm signatures on data streams without impacting the performance of the router.

Now, AI is key to real-time detection of threats in network traffic and storage (ransomware in the case of storage, which is another resource strapped area).

LLMs have to be run according to needs. In some cases there will be zero issues with carrying out tasks in the Cloud or at the Edge. In other cases/scenarios you might want them running more locally. Maybe even in your Earbuds (for voice recognition or Bone Voice ID purposes etc).

Or in your TV or even better across multiple devices at the same time. Resource pooling.

I use Google quite a bit to lookup facts and figures. But as much as I'd love to have a conversational AI with which I could throw random questions and get answers, I simply don't trust most of today's generative LLMs to give me accurate answers to the questions posed.

The more conversational the AI, the more like it is to hallucinate and give me answers that seem perfectly accurate... when in fact they're anything but.

If I were Apple, that's a problem I'd want to solve.

At best you can say that Apple is behind but it is attempting to catch up by looking to the future. But if it fails to do that, then it will remain behind. Way behind.