Apple has filed patent applications for many technologies that don't seem that interesting on their face. Together, they paint a picture of a world where Apple Vision Pro and augmented reality experiences could be available anywhere.

In the movie Free Guy, Ryan Reynolds stars as Guy, a Non-Playable Character (NPC) in a video game world. He is destined to live the same day repeatedly as a non-descript bank teller in a non-descript bank that gets repeatedly robbed by criminals wearing sunglasses.

In the movie's opening scene, Guy explains that the sunglass wearers can do anything they want and are seen as omnipotent heroes. Unbeknownst to him, these powerful people are avatars of the real-world players of the console game he lives inside.

During one of the daily bank heists, instead of meekly following the sunglass-wearing robber's orders, Guy fights back and takes his sunglasses. Putting the sunglasses on upon exiting the bank, Guy can suddenly see the world around him as the players do.

It's a Fortnite-inspired mashup where player health bars are visible. A floating first-aid kit restores Guy's health, and clothing and weapon upgrades are for sale wherever he looks.

Reading Patents doesn't normally remind me of movies. Still, in a recent review of Apple's weekly patent updates, I found a few related applications that, taken together, hint at Apple's plans to make this movie come true— at least to some degree.

Apple's AR-changing patents

The two interesting patents are "System for determining position both indoor and outdoor," the second is "Notification of augmented reality content on electronic device".

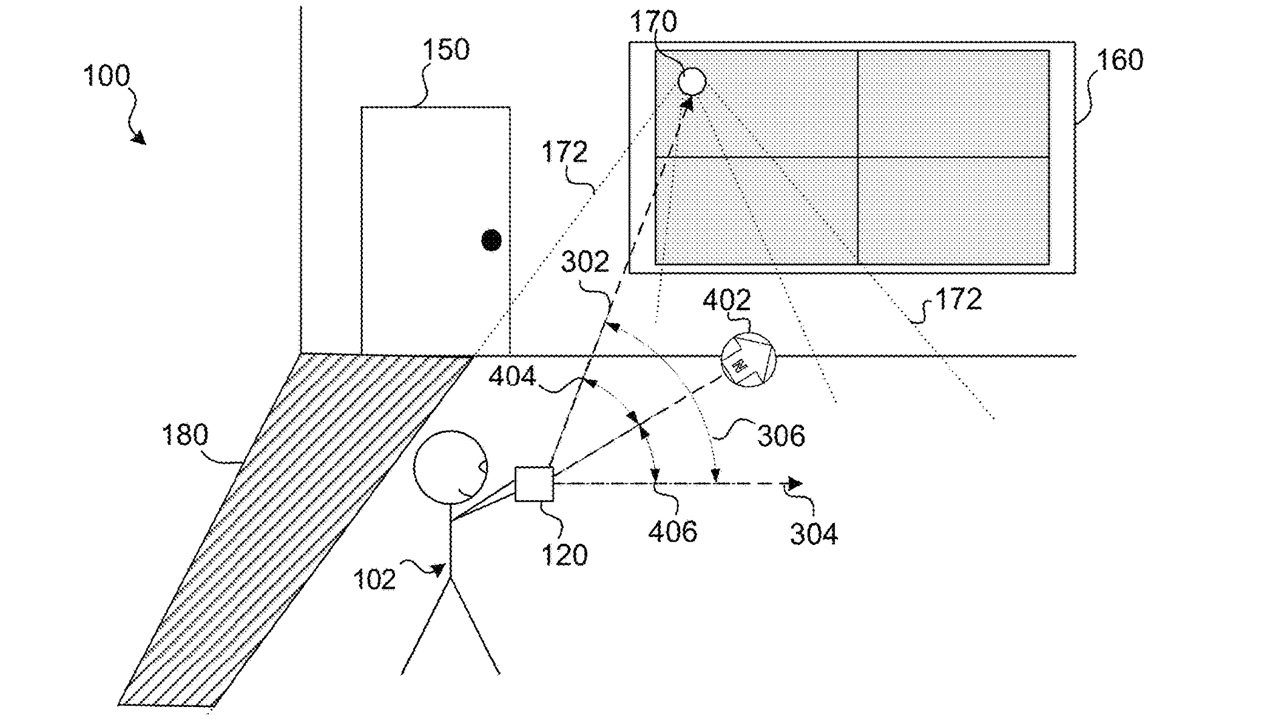

The most important is a "system for determining position indoors and out." This patent describes a system for precise user location using a wide range of devices in addition to Apple's Ultra Wideband chip. In practice, devices like WiFi routers can already determine a person's location, but this is usually used for generalized location.

Triangulation allows for a general sense of a person's location. Apple's patent adds some twists that make location pinpointing much more accurate by utilizing various additional wireless device types.

The more signals available, the more precise the training is, just as a GPS gains more accuracy the more satellites it detects.

The patent describes typical wireless devices, all measured and evaluated simultaneously, to get a more accurate picture of someone's location in a given space. WiFi and Bluetooth transmitting devices can already be used in tandem, but this patent adds something interesting.

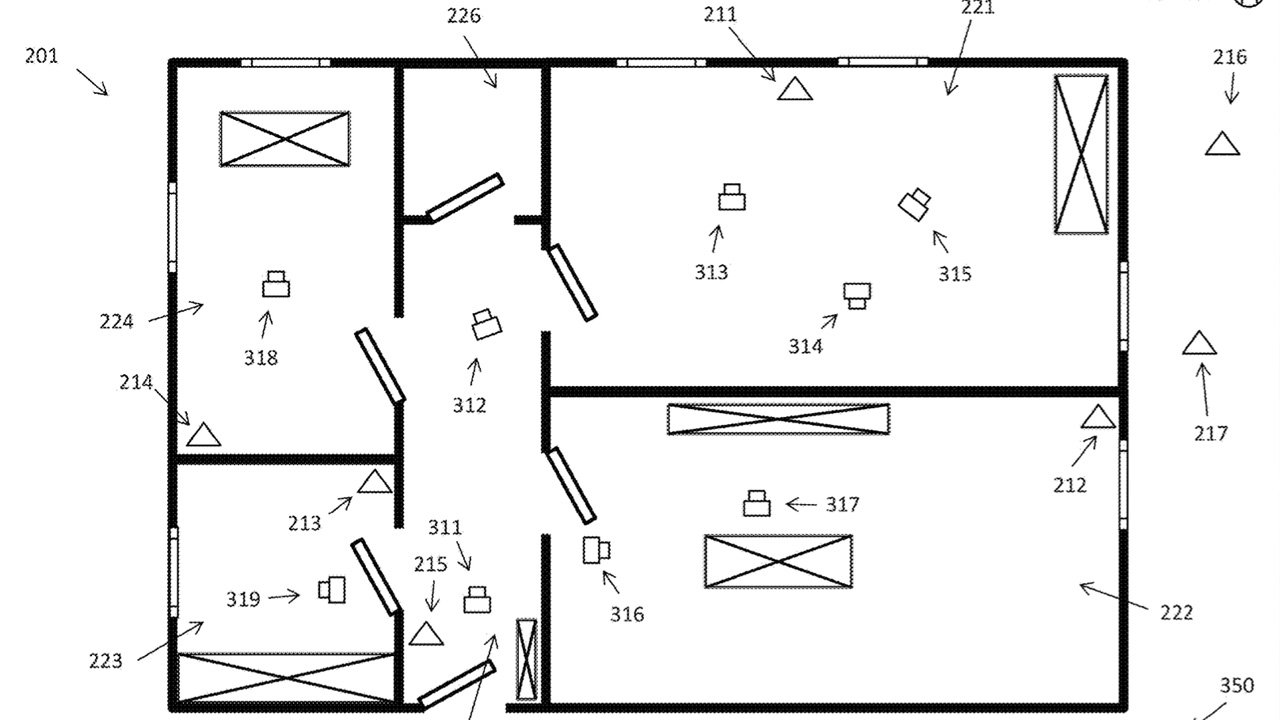

This illustration from Apple's patent application shows multiple cameras used for location detection.

This illustration from Apple's patent application shows multiple cameras used for location detection.Apple plans to use cameras that track users and broadcast location data. In practice, this technology sounds similar to that used in Amazon's Amazon Go store. In Amazon's case, multiple cameras track customers from multiple angles to drive an automatic checkout system that charges users for what the store's computers saw them pick up.

Apple's application of this system would instead locate a user in a physical space to display mixed reality [MR] content. Currently, the Apple Vision Pro relies on its passthrough cameras and physical sensors to determine where a user is, including where they have positioned things like displays or "hanging" artwork.

Apple's technology would enable MR objects to be placed around any environment without relying on image recognition to track objects.

Using multiple small devices could allow for interactive experiences in the "real world" with hyper-precise user positioning. As each device was added to the feedback, the experience improved. I can imagine some pretty interesting use cases for this technology.

As with the movie Free Guy, immersive interactive experiences would be possible with an Apple Vision Pro and future devices. Unlike Free Guy, this tech could enhance a user's interaction with the world more like a William Gibson novel — hopefully without the dystopia.

Indoor accuracy for better AR experiences

There are obvious uses in a retail environment, although this is the most mundane use case. For example, you could walk into an Apple store and see the specs of an iMac hovering above it or pick up an iPhone case and have images of it superimposed over your phone so you can see how it looks.

The ability to overlay MR content on the physical world isn't new and is already used in everything from games to surgeries..

Surgery team with (left) an Apple Vision Pro (source: London Independent Hospital val the Daily Mail)

Surgery team with (left) an Apple Vision Pro (source: London Independent Hospital val the Daily Mail)Home team advantage

The idea of an immersive sporting event is more interesting. The main benefit of watching a game on TV instead of courtside is that the broadcasts include real-time stats, information about the players and the game, and expert commentary.

Overlaying statistics while watching a game is nothing new, but this positional-aware technology could provide hyper-accurate positioning of MR content. Instead of generalized MR elements, the ability to pinpoint a spectator would allow for some interesting possibilities.

The key to this patent is extending this idea to outdoor environments. Typical outdoor spaces lack multiple WiFi points, limiting triangulation possibilities. Low-power Bluetooth devices can be added to the mix to improve location detection radically.

Including cameras that transmit their angular view data would allow someone to set up several devices and have a location-aware environment. Perhaps an Apple iSpy is coming soon.

One of the best MR technologies used in sports today is the virtual first-down line used on football game broadcasts. It's easy to see the down marker in person, but it's difficult to visualize on TV where the offensive team needs to reach to get a first down.

While you can superimpose data over the real world with Apple Vision Pro and Meta's Quest 3, that information can't be location-specific. In other words, you can overlay general stats about a game, but you can't orient that to the user's seating location.

In this example, the first-down marker could be visible no matter where someone is sitting. Whether behind the end zone or up in the stands at the 50-yard line, the down line could be accurate relative to the user's point of view.

Real-time data would be easy to superimpose over individual players, following them across the field no matter where someone is sitting. For example, if you want to know the stats of a baseball player's batting average, that can be placed over the player no matter where the attendee is sitting.

A venue's ability to add more transmission points, such as additional WiFi devices and cameras, would allow a venue or retail store to add simple devices and power immersive experiences.

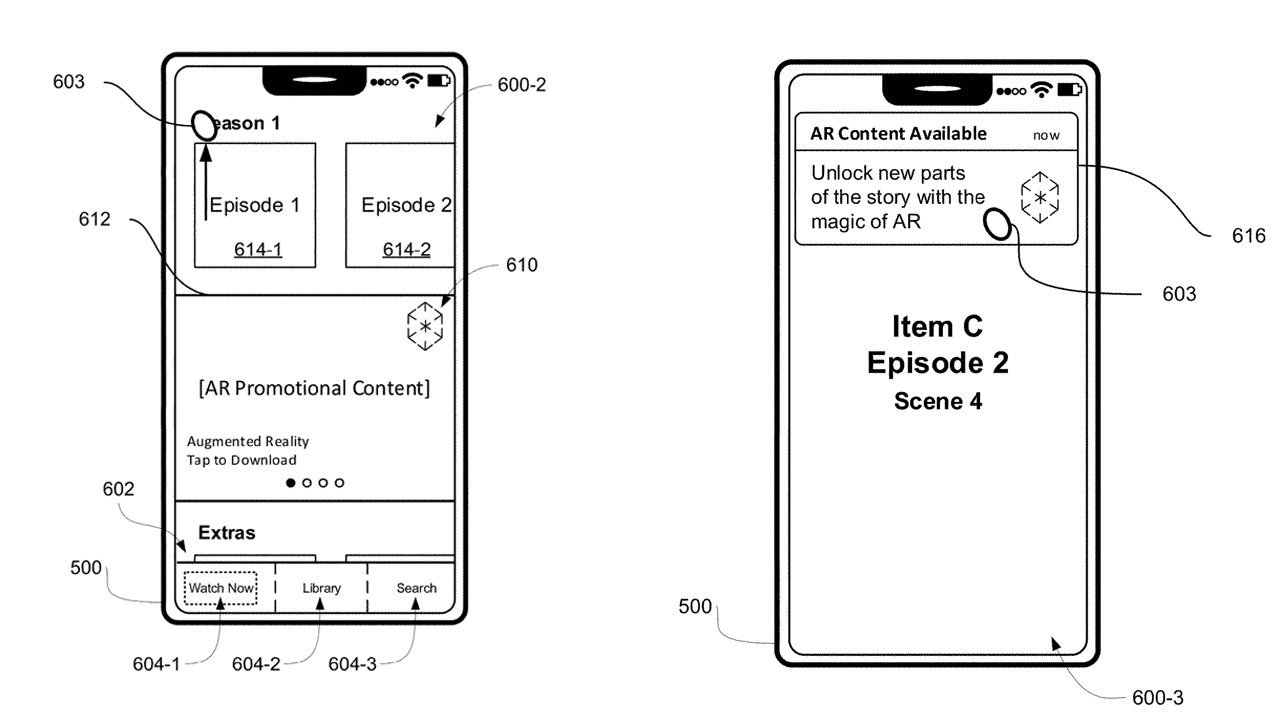

These examples show notifications of AR content for TV shows or movies, but can be extended to more interesting AR uses.

These examples show notifications of AR content for TV shows or movies, but can be extended to more interesting AR uses.Finding AR near you

This ties to the second interesting patent, "Notification of augmented reality content on an electronic device. "By itself, it just looks like a way to trigger an alert on a phone when an MR experience is nearby.

As the name implies, the intersection of this and the first patent would let someone know if they've entered an immersive environment. Presumably, the notifications would appear on an Apple Watch or iPhone, and most people currently would have the experience on the iPhone, as it can already do mixed reality.

These patents aren't the first to point to a more immersive AR world, though they are the first to describe extending this experience to the world.

Apple is looking to improve the accuracy of MR headsets, and an interesting new patent application points to other ways a user's position could be tracked in an outdoor environment or indoors with the right conditions.

This patent uses "celestial objects" to help orient a 3D environment. Most systems to determine direction include an electronic compass and recognition of observed objects like furniture and walls. This patent would incorporate the position of the sun and the moon relative to the user to provide pinpoint accuracy.

The patent shows the sun being detected through a window in a room, though it also describes its use outdoors. In the example of MR at a stadium, the position of the sun overhead would provide strong contextual clues as to where a user is looking.

Of course, this would also require the device to have access to hyper-accurate information about the sun's position relative to not only the time of year but also the user's elevation.

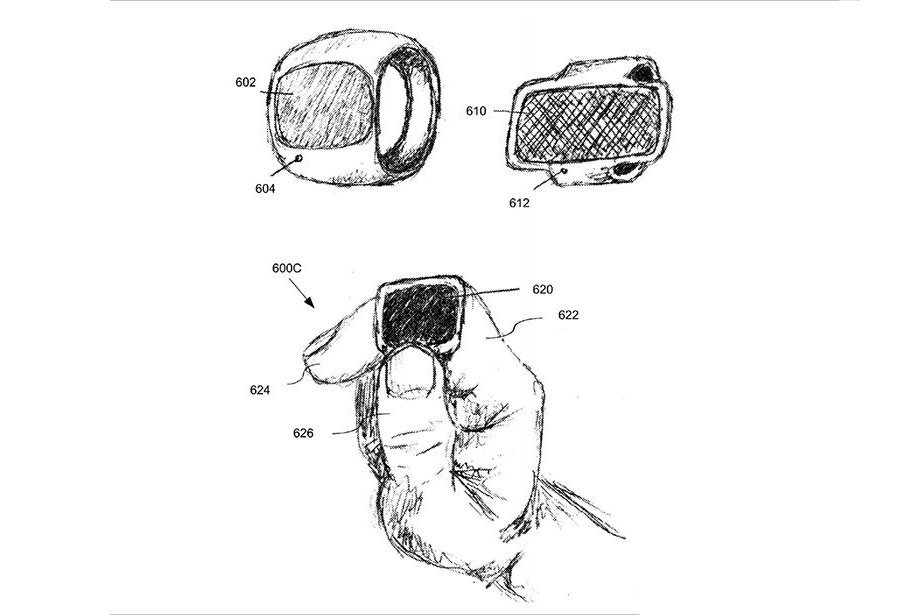

To enhance the user experience in AR and provide better ways to interact with the world and virtual elements, Apple has filed a patent describing additional gestures to supplement the pinch gesture.

Unlike Apple's other patents, this one describes ways that multiple AR users could interact with both physical and generated 3D objects. As an example, this technology would allow multiple Apple Vision Pro users and even users with iPads or iPhones to manipulate virtual items in an environment, each looking at the object from a different perspective and each appearing correctly oriented.

Wearing your controllers

Apple would likely combine these technologies with several additional patents, including "Items with wire actuators," "Fabric control device," and the rumored Apple ring.

An Apple ring almost certainly would interact with Apple Vision Pro, perhaps providing even more accurate tracking and an additional control surface.

The first patent, which we covered when it was filed in 2020, would allow for gloves or other devices to be integrated with sensor. Perhaps this patent was an early exploration in the days of Apple Vision Pro design, but it shows that even four years ago, Apple was on a path to non-traditional ways of controlling devices.

The second patent describes a technique for using fabric to cover electronic buttons. The obvious use of this patent would be a homebody-like device that has buttons on the fabric-covered surface. This could be combined with the patent for gloves to enable a more tactile user experience, and perhaps haptic feedback when using Apple's AR/MR tools.

We are only at the start of an immersive AR/MR world, led currently by Apple's Vision Pro. While we are a long way from wide-scale adoption of XR experiences and portable hardware to empower it, we can see the path Apple takes to make XR part of our daily lives.

David Schloss

David Schloss

-m.jpg)

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Oliver Haslam

Oliver Haslam

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Andrew Orr

Andrew Orr

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->