Apple's Ferret LLM could help allow Siri to understand the layout of apps in an iPhone display, potentially increasing the capabilities of Apple's digital assistant.

Apple has been working on numerous machine learning and AI projects that it could tease at WWDC 2024. In a just-released paper, it now seems that some of that work has the potential for Siri to understand what apps and iOS itself looks like.

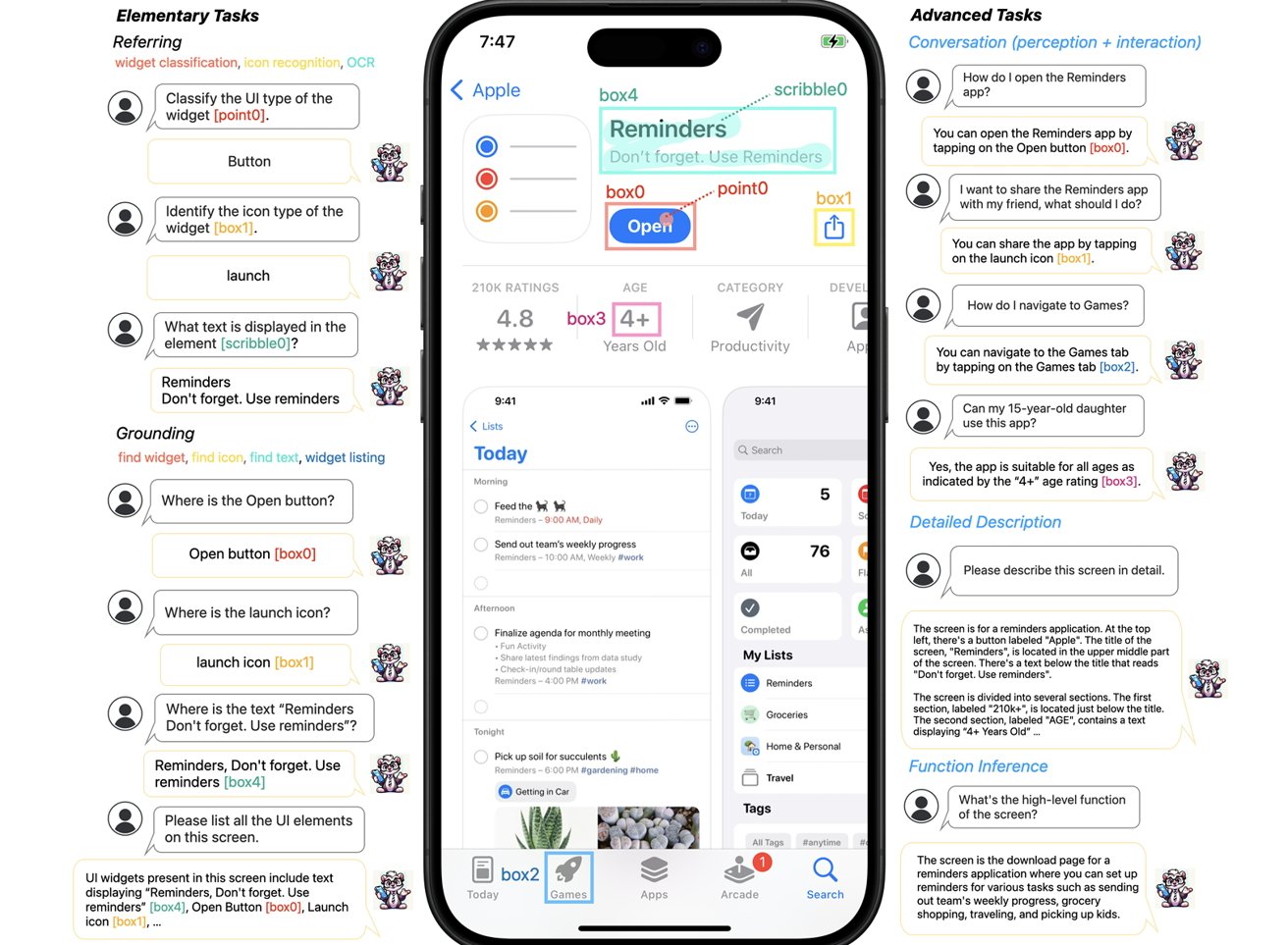

The paper, released by Cornell University on Monday, is titled "Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs." It essentially explains a new multimodal large language model (MLLM) that has the potential to understand the user interfaces of mobile displays.

The Ferret name originally came up from an open-source multi-modal LLMreleased in October, by researchers from Cornell University working with counterparts from Apple. At the time, Ferret was able to detect and understand different regions of an image for complex queries, such as identifying a species of animal in a selected part of a photograph.

An LLM advancement

The new paper for Ferret-UI explains that, while there have been noteworthy advancements in MLLM usage, they still "fall short in their ability to comprehend and interact effectively with user interface (UI) screens." Ferret-UI is described as a new MLLM tailored for understanding mobile UI screens, complete with "referring, grounding, and reasoning capabilities."

Part of the problem that LLMs have in understanding the interface of a mobile display is how it gets used in the first place. Often in a portrait orientation, it often means icons and other details can take up a very compact part of the display, making it difficult for machines to understand.

To help with this, Ferret has a magnification system to upscale images to "any resolution" to make icons and text more readable.

For processing and training, Ferret also divides the screen into two smaller sections, cutting the screen in half. The paper states that other LLMs tend to scan a lower-resolution global image, which reduces the ability to adequately determine what icons look like.

Adding in significant curation of data for training, it's resulted in a model that can sufficiently understand user queries, understand the nature of various on-screen elements, and to offer contextual responses.

For example, a user could ask how to open the Reminders app, and be told to tap the on-screen Open button. A further query asking if a 15-year-old could use an app could check out age guidelines, if they're visible on the display.

An assistive assistant

While we don't know whether it will be incorporated into systems like Siri, Ferret-UI offers the possibility of advanced control over a device like an iPhone. By understanding user interface elements, it offers the possibility of Siri performing actions for users in apps, by selecting graphical elements within the app on its own.

There are also useful applications for the visually impaired. Such an LLM could be more capable of explaining what is on screen in detail, and potentially carry out actions for the user without them needing to do anything else but ask for it to happen.

Malcolm Owen

Malcolm Owen

-m.jpg)

Amber Neely

Amber Neely

Christine McKee

Christine McKee

William Gallagher

William Gallagher

-m.jpg)

9 Comments

This technology could be a great boon to the app review process and, in turn, our security and satisfaction with 3rd party apps.

This starts to get at where I think Apple's machine learning/artificial intelligence and Siri are going.

Despite it being front-and-center in the public consciousness for the past year, AI as currently implemented is a hot mess of questionable utility, privacy and security, and based on petabytes of stolen data and intellectual property. While everyone touts how cool their AI is and the peanut gallery throws shade at Apple for being late to the party, moving in "late" to supplant technological hot messes with something well thought out and useful is actually Apple's sweet spot. The Ferret LLM described above could result in users being able to make voice commands that require complex interactions with apps on their device to yield a desired result. If Siri is able to interface with and read from on-device apps, this does two important things. First, it eliminates a requirement for special code within the apps to allow for things like the current Shortcuts app to drive certain tasks... if the user can figure out how to make Shortcuts do it properly. Second, it allows the digital assistant to carry out functions and draw information from sources for which the user already has legitimate permission to access.

Legally and functionally it would be very much like handing a human PA your iPhone and then asking him or her to use it to carry out various tasks on your behalf.

Imagine waking in the morning and asking Siri to implement various smart home functions based on current conditions like the weather and what you've got on your schedule for the day. Then imagine asking Siri what the morning news is, and it pulls information from your Apple News subscription, along with other news sources to which you have subscribed or otherwise have access, and verbally gives you a news summary, citing each source. Then you ask Siri to bookmark a few of the source articles so you can read them during breakfast. You could ask Siri to order lunch, or flowers, or an Uber, and it simply interfaces with on-device apps and accounts on your behalf. Apple could implement this sort of thing without running roughshod over copyrights, and without selling out the user's privacy and security.

This could be how Apple once again enters a space seemingly late, but then implements its vision of that thing so well that others must regroup and scramble to catch up.

So Apple's decades-long efforts and incremental improvements to accessibility will pay big dividends for AI as it helps the ML learn how the app works.

It makes you wonder if that might have been a reason for pushing hard in that space all along. You know, not just to be nice or sell lots of hardware.