Apple AI research reveals a model that will make giving commands to Siri faster and more efficient by converting any given context into text, which is easier to parse by a Large Language Model.

Artificial Intelligence research at Apple keeps being published as the company approaches a public launch of its AI initiatives in June during WWDC. There has been a variety of research published so far, including an image animation tool.

The latest paper was first shared by VentureBeat. The paper details something called ReALM — Reference Resolution As Language Modeling.

Having a computer program perform a task based on vague language inputs, like how a user might say "this" or "that," is called reference resolution. It's a complex issue to solve since computers can't interpret images the way humans can, but Apple may have found a streamlined resolution using LLMs.

When speaking to smart assistants like Siri, users might reference any number of contextual information to interact with, such as background tasks, on-display data, and other non-conversational entities. Traditional parsing methods rely on incredibly large models and reference materials like images, but Apple has streamlined the approach by converting everything to text.

Apple found that its smallest ReALM models performed similarly to GPT-4 with much fewer parameters, thus better suited for on-device use. Increasing the parameters used in ReALM made it substantially outperform GPT-4.

One reason for this performance boost is GPT-4's reliance on image parsing to understand on-screen information. Much of the image training data is built on natural imagery, not artificial code-based web pages filled with text, so direct OCR is less efficient.

Converting an image into text allows ReALM to skip needing these advanced image recognition parameters, thus making it smaller and more efficient. Apple also avoids issues with hallucination by including the ability to constrain decoding or use simple post-processing.

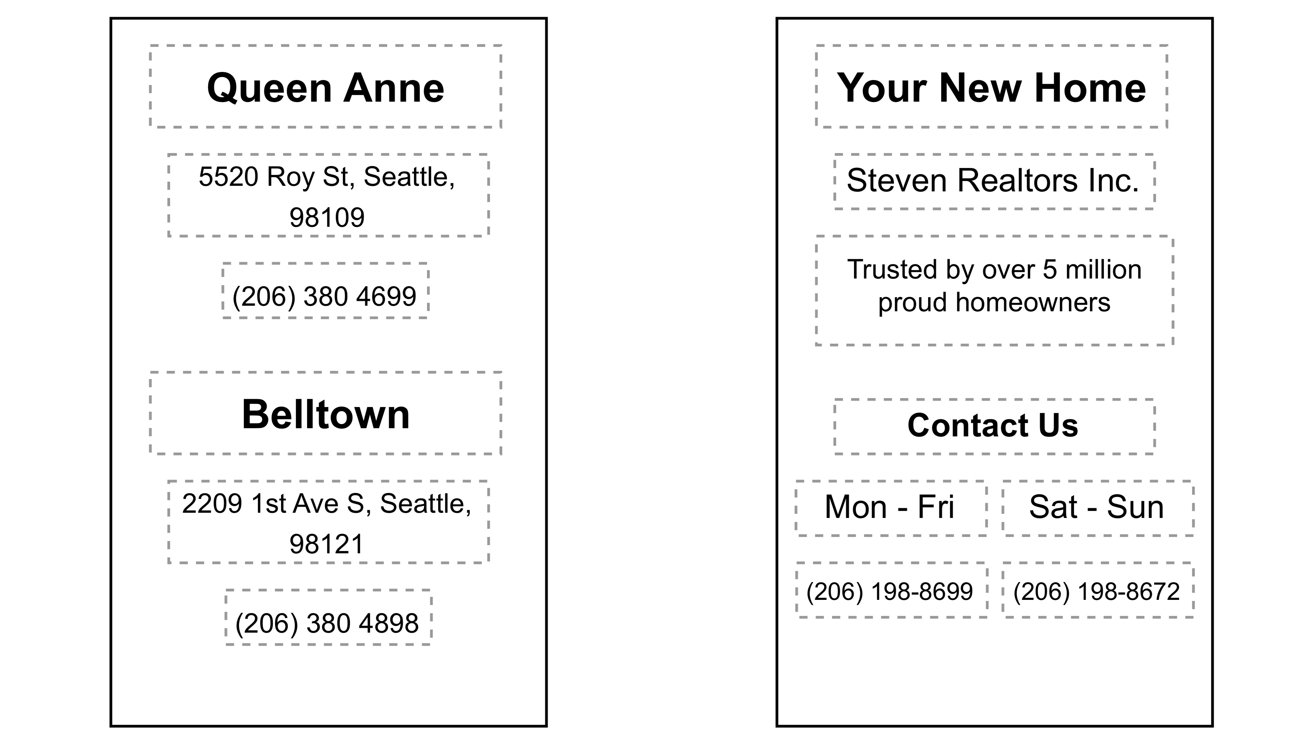

For example, if you're scrolling a website and decide you'd like to call the business, simply saying "call the business" requires Siri to parse what you mean given the context. It would be able to "see" that there's a phone number on the page that is labeled as the business number and call it without further user prompt.

Apple is working to release a comprehensive AI strategy during WWDC 2024. Some rumors suggest the company will rely on smaller on-device models that preserve privacy and security, while licensing other company's LLMs for the more controversial off-device processing filled with ethical conundrums.

Wesley Hilliard

Wesley Hilliard

-m.jpg)

William Gallagher

William Gallagher

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

7 Comments

Makes sense since text is already being extracted from images displayed on-screen.

Meanwhile, GPT5 is COOKing Apple.

Honestly.. When does Apple launch it then? After several years?

After several years, their model will be outdated.

I don't believe it until it gets on the device. Otherwise, it does not reveal, but does claim that it is better than GPT-4.