Researchers at Apple have created Keyframer, a test generative AI app that lets users describe an image and how they want it to animate.

It's not been long since Apple was being described as behind the rest of the technology industry over its adoption of AI. That was always nonsense because Apple's Machine Learning has been key to iOS for years, but then researchers at the company published academic papers including one on AI avatars.

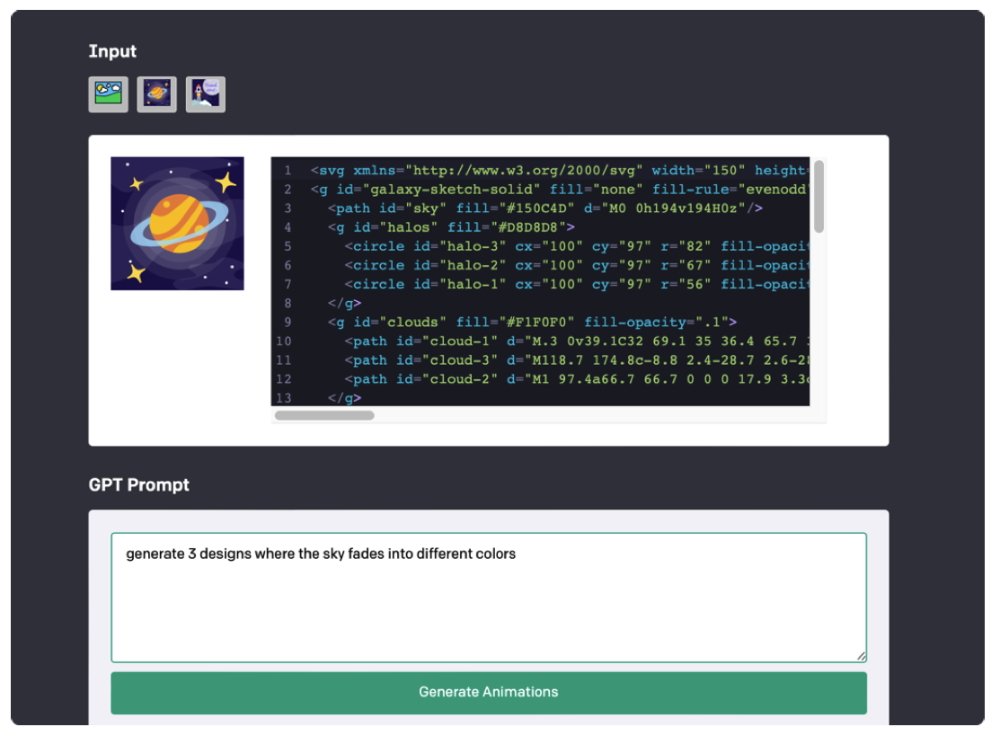

Now another research paper has been published, and this time a trio of Apple researchers have been investigating and testing an app for "empowering animation design using Large Language Models." Called Keyframer, the AI app lets users describe an animation, and it then generates CSS animation code for websites.

Keyframer has not been released publicly, and its testing appears to have been quite limited. The three researchers, Tiffany Tseng, Ruijia Cheng, and Jeffrey Nichols, write that their study was based chiefly on 13 participants.

Those participants began by writing a plain-English description of what image they wanted. So far this is how Adobe Firefly AI works, too.

However, with Firefly and similar existing apps, once an image has been generated, the user can only use the app's manual controls to adjust or enhance it. What Apple's Keyframer was designed to do was let the users iterate through designs by continuing to describe what they need, or what they want removed.

Specifically, the paper describes previous attempts at generative AI image work as "one-shot prompting interfaces." In comparison, Keyframer was built so that a user could continue prompting multiple times on the same image.

"This is just so magical because I have no hope of doing such animations manually...," one novice participant said after using Keyframer. "I would find [it] very difficult to even know where to start with getting it to do this without this tool."

"Part of me is kind of worried about these tools replacing jobs, because the potential is so high," a professional animator told the researchers. "But I think learning about them and using them as an animator — it's just another tool in our toolbox."

"It's only going to improve our skills," he or she continued. "It's really exciting stuff."

While the research paper — a 31 page, 16,000 word document — has been published, Keyframer itself has not been released and is solely an in-house testing app.

Its existence, however, backs up claims that Apple has been extensively testing generative AI. It's rumored that Apple will unveil significant AI-related improvements to the likes of iOS and Siri, at WWDC 2024.

William Gallagher

William Gallagher

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

-m.jpg)

17 Comments

I sure hope some form of this is released soon. I was searching the web for exactly this ability just last week. Generative AI is cool but the ability to iterate and animate would be huge.

Does Apple normally release several research papers at such an early stage in developing features?

This one hasn't really been tested yet, just 13 people have given it a try, but Apple is already announcing it? I've always seen them as keeping their internal development quiet and under-the-radar and competitors guessing, before announcing to the world what they're up to with a consumer-ready feature all but complete. Rather than these PR pieces intended to "delight the customer" it seems more likely this is meant to delight the market.