A new and mandatory terms of service approval for Adobe Creative Cloud requires users agree to the company getting free access to users' projects, for whatever they want to do with it. That's unacceptable.

Companies periodically make changes to the terms of service for their products. Usually, this is to enable new features or to reflect changes in legal standing, such as regulatory orders.

In many cases, users will click to agree to them, sleepwalking into agreement without reading a single word. That's probably not wise to do this time, if you're an artist and have Adobe Creative Cloud.

At first glance, the changes enable Adobe to fight CSAM images and similar objectionable content. But, those same changes and other already existing elements open up some disturbing possibilities.

The changes grant Adobe the option to spy on a user's work, even works protected by confidentiality agreements. Or worse, it allows Adobe to suck up your art and roll it all into its generative AI tools, whether you like it or not.

Creative Spyware

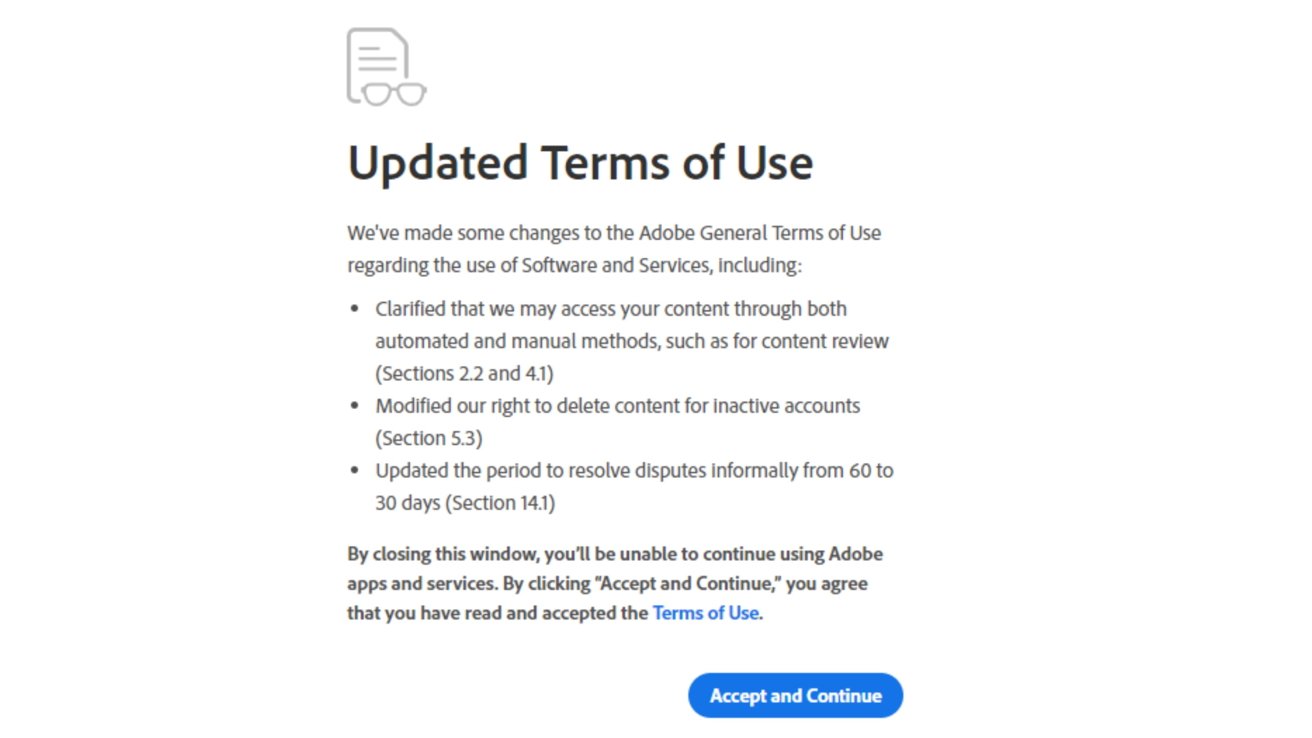

In a notice of updates in the Terms of Use to users of its creative tools, Adobe says it has made some alterations to four sections. The pop-up appears as users access the apps, forcing them to agree to the new rules.

It states Adobe has "clarified that we may access your content through both automated and manual methods, such as for content review." This affects sections 2.2 and 4.1 of the document.

It has also modified section 5.3, updating how it has the right to "delete content for inactive accounts." That is the most reasonable change.

Also, section 14.1 has been changed to reduce the period to resolve disputes informally from 60 days to just 30. This is sketchy too, but not particularly terrible.

The pop-up further tells users that, by closing the window, they cannot continue to use Adobe apps and services. A single blue button is offered, to "Accept and Continue" using the software and agreeing to the conditions.

Users are faced with either agreeing to the new terms and being able to use the apps they handsomely pay Adobe for, or face being frozen out. Oh, and still pay, unless you cancel your service — which can come with financial penalties.

Here's the worst though — the update to section 2.2, "Our Access to Your Content," includes verbiage that Adobe "may access, view, or listen to your Content through both automated and manual methods, but only in limited ways, and only as permitted by law."

As examples, Adobe says it could need to access a user's content to "respond to Feedback or support requests." It may also do so to "detect, prevent, or otherwise address fraud, security, legal, or technical issues."

Adobe says it can also check content to enforce its terms of use.

The "Content," outlined in section 4.1, includes "any text, information, communication, or material, such as audio files, video files, electronic documents, or images, that you upload, import into, embed for use by, or create using the Services and Software."

The wording doesn't completely rule out the monitoring of locally-stored files used in the applications. It certainly does cover anything that is stored in its cloud infrastructure.

As part of section 4.1, Adobe says it may use automated processes, manual review, and third-party vendors to review content.

Further adding to complainant woes is section 4.2, "Licenses to Your Content." This section wasn't changed, but the proximity to the updated section 4.1 brought more attention to it.

Section 4.2 states that users grant a "non-exclusive, worldwide, royalty-free sublicensable, license, to use, reproduce, publicly display, distribute, modify, create derivative works based on, publicly perform, and translate the Content."

Adobe offers as an example that it could sublicense the right to its service providers so that features can operate. This is somewhat consistent with other online services, such as social networks, which offer a similar justification to licensing.

AI and privacy concerns

The changes, as well as the increased focus on the licensing terms, are problematic. For a start, if a subscriber is under a non-disclosure agreement for their workplace or for clients, the Terms of Use gives Adobe the ability to look at the content.

Effectively, this breaks the NDA that the user agreed to.

There's also the problem of AI, as Adobe does offer Firefly and other AI-based tools to users. These have to be trained somehow.

The fear is that Adobe will use the language of the updated terms to justify the potential use of a user's projects and documents to feed its AI training. There is no language to prevent such a thing from happening within the Terms of Use.

There is a separate list of additional terms for Generative AI, though this doesn't include any language specifically mentioning how its AI is trained.

Ultimately, the language permits Adobe to reuse a user's NDA-protected content for training its AI systems. This is in no way a good thing for creatives to agree to at all.

Companies working on generative AI systems have been criticized for scraping content without permission, with most of them saying that it's too hard to properly license, and they shouldn't be held accountable for doing so. This led to complaints and lawsuits where data owners objected to the use of their data.

With the prospect of generative AI able to create images based on different styles and artists works, it quickly becomes a copyright nightmare that won't be resolved without judicial assistance.

Apple at least has decided to steer clear of the mess, by reportedly paying content holders for access. But that approach isn't being adopted elsewhere.

Critical responses include a scathing attack by designer Wetterschneider, who has worked with DC, Nike, and Ravensburger.

"If you are a professional, if you are under NDA with your clients, if you are a creative, a lawyer, a doctor or anyone who works with proprietary files - it is time to cancel Adobe, delete all the apps and programs. Adobe can not be trusted," the designer said.

Sam Santala, a concept artist, wasn't able to contact support staff to question the changes in the terms, unless they agreed to the terms first. "I can't even uninstall Photoshop unless I agree to these terms??" they added.

Not giving users the option to easily opt out of submitting work for AI training is a very bad move, especially in the current climate. For a small section of the creative community, Adobe has shot itself in the foot.

The fact that it's impossible to uninstall the apps without agreeing to the terms of service beforehand is baffling. Couple that with the lack of an automatic service cancellation if you click "disagree," and it's an unacceptable combination.

The uproar is not good news for Adobe. It needs to be addressed by the company now, and loudly. The silence since this got pointed out is deafening.

For the moment, disgruntled creatives have the option of voting with their wallets. That's assuming they can navigate the cancellation process properly, and without extra fees.

Update June 7, 8:10 AM ET Adobe has issued a statement that addresses some of the user concerns.

Mike Wuerthele and Malcolm Owen

Mike Wuerthele and Malcolm Owen

-m.jpg)

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

William Gallagher

William Gallagher

-m.jpg)

43 Comments

The Reason Kyle T. Webster left the company suddenly a few days ago?

Well, now I cancel my overpriced subscription and make sure any and all work is gone from them—though they’ve probably already got it. Those are insane terms and despicable.

It's not yet clear if they are taking our content and using it for their own AI training purposes or just poorly explaining that they need the permissions to do what users request of their AI. Since there is no "Opt-Out," as I presume the EU would require if they were accessing your private data, I suspect it's the latter.

Adobe needs to explain things far better, and soon.

Now if you were talking about Meta instead, yeah they really are claiming ownership of your Facebook interactions for training their LLM models.