Apple Intelligence will be used for image generation in iOS 18, and pictures created with Image Playground will be marked. Here's how it's going to work, and what the limitations of the labels are.

Known as Generative Playground during its development period, Image Playground can create images using Apple Intelligence — Apple's in-house, on-device generative AI software.

With Image Playground, users will be able to create unique AI-generated images across different Apple applications such as Freeform, Messages, and Keynote. This will all be possible once Apple Intelligence becomes available later in 2024.

As AI-powered image generation technology improves with every passing day, it will only become more and more difficult to identify images made through artificial intelligence. Apple has a clear plan to address this issue, as its software will mark AI-generated pictures and prevent the creation of photo-realistic, adult, and copyrighted content.

Apple will mark AI-generated mages through EXIF data

AppleInsider has learned that the company plans to label AI-generated imagery through image metadata, also known as EXIF data.

This means that AI-generated images created through Image Playground would be clearly marked through that metadata. The image source, which typically displays the name and brand of the camera used to take a picture, will display "Apple Image Playground," serving as a clear indication that the image was created by AI.

The company is not going as far as steganography, though. At present, there do not appear to be any plans to embed accreditation to Image Playground in the image itself.

And, image metadata can easily be removed or altered through many publicly available websites and image modification tools. A screen capture of an image also does not preserve metadata.

Labeling an image through EXIF data was not enough for Apple, as the company made sure its software could only generate images in specific, non-realistic styles.

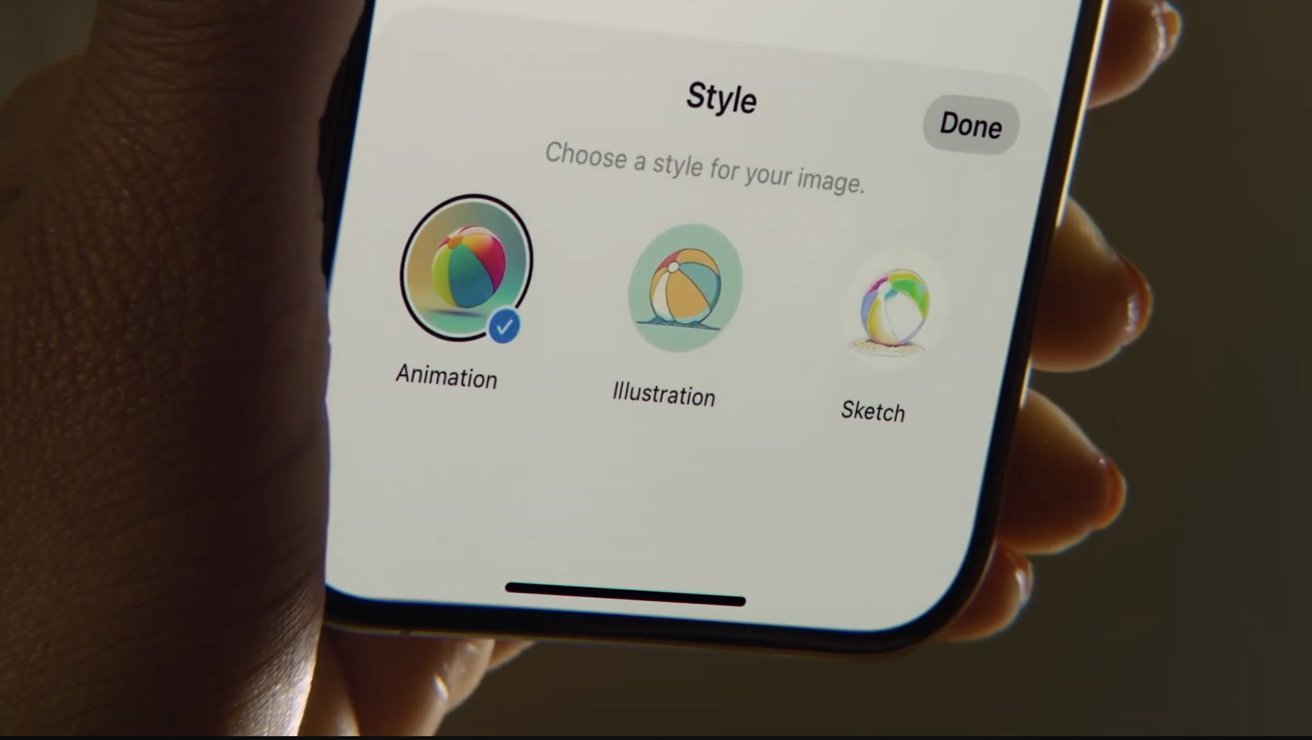

We've also learned that Apple's software will effectively prevent users from generating photorealistic imagery. Instead, users will be able to generate images in the following three Apple-approved styles within Image Playground: Animation, Illustration, and Sketch.

None of these image styles could ever be mistaken for a photograph of a real-world object person or place. The Animation style creates images that vaguely resemble stills from 3D animated films, while the Illustration and Sketch styles generate images of an even more obvious two-dimensional type.

Apple worked with digital artists and asked them to create images in the three aforementioned styles. These images were then used to train the company's generative AI software, so that it could generate images with a similar look.

Image Playground will prevent the creation of copyrighted, photorealistic, and adult content through multiple checks

Speaking to people familiar with the matter, AppleInsider has learned the details of the reference material Apple used for Image Playground.

For the Animation image style, the company used an image of a three-dimensional yellow chick, resembling a character from a Pixar or Dreamworks animated film with cartoon-style eyes.

For Image Playground's Sketch style, Apple used an obvious drawing of a pink and orange flower. To train AI software for the Illustration style, the company used an image of a person in the fairly recognizable and somewhat abstract style known as Corporate Memphis.

Image Playground will give users the option to create AI-generated images in the following three styles: Animation, Illustration, Sketch

Image Playground will give users the option to create AI-generated images in the following three styles: Animation, Illustration, SketchThrough our independent research, AppleInsider has learned of a fourth image style known as Line Art, which Apple abandoned at some point during the development of Image Playground. To date, there has been no reference to this image style, and it didn't made it to the first developer betas of Apple's latest operating systems.

By creating these different image styles and commissioning reference material, Apple wanted to train its AI to create specific types of images that were not photorealistic, and thus could not be confused with a real-life image of an entity or location. Image Playground will also have the ability to create obvious AI-generated images of people the user knows, through integration with the user's photo library.

During WWDC, we also learned that Apple has apparently created "multiple checks" to prevent the generation of copyrighted and adult material. There will also be a user feedback option, which will give users a way of reporting copyrighted or adult content inadvertently created within the app.

These new copyright and adult content checks, along with the labeling of images through metadata, appear to have been added late during the development period of Image Playground, according to people familiar with the matter. We were told that early development versions of Image Playground do not appear to have these security checks in place, as they were not meant for outside use.

In essence, this all means that Apple went to great lengths to prevent the creation of photorealistic, adult, and copyrighted content. The company wants its AI-generated imagery to be easily identifiable, which is why such images are marked through metadata which can be checked by anyone.

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Charles Martin

Charles Martin

Amber Neely

Amber Neely

Andrew Orr

Andrew Orr

Christine McKee

Christine McKee

1 Comment

Keeping stupid "adult" (porno) imagery away from our eyes is always a good thing, but there are times when a photorealistic image of say a building or landscape would be a very nice thing to have. I'd even love to have the freedom to tell it to modify artworks in a photorealistic way, like say, "recreate the Mona Lisa wearing a ladies hat." (It's just an example.) Sure there's all that silly copyright mess when it comes to more modern artworks or copyrighted items, but I think you all know what I mean.

So hopefully this is just a small start and later we can get some more photorealistic features. I think it can be done without offending everyone, which is admittedly hard these days when most people are offending at the least little thing.