A child protection organization says it has found more cases of abuse images on Apple platforms in the UK than Apple has reported globally.

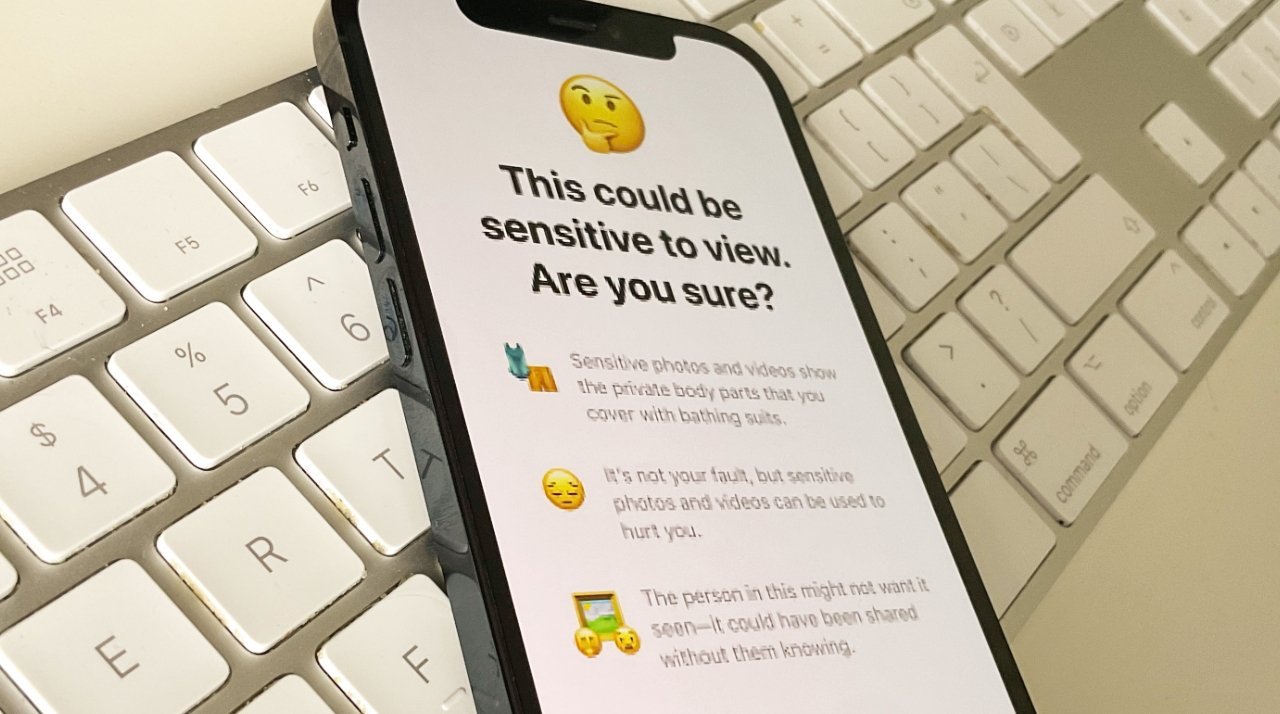

In 2022, Apple abandoned its plans for Child Sexual Abuse Material (CSAM) detection, following allegations that it would ultimately be used for surveillance of all users. The company switched to a set of features it calls Communication Safety, which is what blurs nude photos sent to children.

According to The Guardian newspaper, the UK's National Society for the Prevention of Cruelty to Children (NSPCC) says Apple is vastly undercounting incidents of CSAM in services such as iCloud, FaceTime and iMessage. All US technology firms are required to report detected cases of CSAM to the National Center for Missing & Exploited Children (NCMEC), and in 2023, Apple made 267 reports.

Those reports purported to be for CSAM detection globally. But the UK's NSPCC has independently found that Apple was implicated in 337 offenses between April 2022 and March 2023 — in England and Wales alone.

"There is a concerning discrepancy between the number of UK child abuse image crimes taking place on Apple's services and the almost negligible number of global reports of abuse content they make to authorities," Richard Collard, head of child safety online policy at the NSPCC said. "Apple is clearly behind many of their peers in tackling child sexual abuse when all tech firms should be investing in safety and preparing for the roll out of the Online Safety Act in the UK."

In comparison to Apple, Google reported over 1,470,958 cases in 2023. For the same period, Meta reported 17,838,422 cases on Facebook, and 11,430,007 on Instagram.

Apple is unable to see the contents of users iMessages, as it is an encrypted service. But the NCMEC notes that Meta's WhatsApp is also encrypted, yet Meta reported around 1,389,618 suspected CSAM cases in 2023.

In response to the allegations, Apple reportedly referred The Guardian only to its previous statements about overall user privacy.

Reportedly, some child abuse experts are concerned about AI-generated CSAM images. The forthcoming Apple Intelligence will not create photorealistic images.

William Gallagher

William Gallagher

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Amber Neely

Amber Neely

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

12 Comments

More pressure to take the privacy poison pill. Stand firm, Apple.

This is chalk and cheese. Facebook and Google both have social media platforms hosting user content for consumption by others. Apple does not. Where is the recognition of that rather significant difference in the “findings“?

There are bad people out there.

That is not an excuse to go full draconian and spy on the law abiding good people.

No reporting system is perfect. There are likely more than reported just like with other evil things.

but don't try to turn that into a movement to treat the good guys like criminals, losing our freedoms, privacy, and dignity in the process.