AI is taking the world by storm, and while you could use Google Bard or ChatGPT, you can also use a locally-hosted one on your Mac. Here's how to use the new MLC LLM chat app.

Artificial Intelligence (AI) is the new cutting-edge frontier of computer science and is generating quite a bit of hype in the computing world.

Chatbots - AI-based apps which users can converse with as domain experts - are growing in popularity to say the least.

Chatbots have seemingly expert knowledge on a wide variety of generic and specialized subjects and are being deployed everywhere at a rapid pace. One group - OpenAI - released ChatGPT a few months ago to a shocked world.

ChatGPT has seemingly limitless knowledge on virtually any subject and can answer questions in real-time that would otherwise take hours or days of research. Both companies and workers have realized AI can be used to speed up work by reducing research time.

The downside

Given all that however, there is a downside to some AI apps. The primary drawback to AI is that results must still be verified.

While usually mostly correct, AI can provide erroneous or deceptive data which can lead to false conclusions or results.

Software developers and software companies have taken to "copilots" - specialized chatbots which can help developers write code by having AI write the outline of functions or methods automatically - which can then be verified by a developer.

While a great timesaver, copilots can also write incorrect code. Microsoft, Amazon, GitHub, and NVIDIA have all released copilots for developers.

Getting started with chatbots

To understand - at least at a high level how chatbots work, you must first understand AI basics, and in particular Machine Learning (ML) and Large Language Models (LLMs).

Machine Learning is a branch of computer science dedicated to the research and development of attempting to teach computers to learn.

An LLM is essentially a natural language processing (NLP) program that uses huge sets of data and neural networks (NNs) to generate text. LLMs work by training AI code on large data models, which then "learn" from them over time - essentially becoming a domain expert in a particular field based on the accuracy of the input data.

The more (and more accurate) the input data, the more precise and correct a chatbot that uses the model will be. LLMs also rely on Deep Learning while being trained on data models.

When you ask a chatbot a question, it queries its LLM for the most appropriate answer - based on its learning and stored knowledge of all subjects related to your question.

Essentially chatbots have precomputed knowledge of a topic, and given an accurate enough LLM and sufficient learning time, can provide correct answers far faster than most people can.

Using a chatbot is like having an automated team of PhDs at your disposal instantly.

In January 2023, Meta AI released its own LLM called LLaMA. A month later, Google introduced its own AI chatbot, Bard, which is based on its own LLM, LaMDA. Other chatbots have since ensued.

Generative AI

More recently, some LLMs have learned how to generate non-text-based data such as graphics, music, and even entire books. Companies are interested in Generative AI to create things such as corporate graphics, logos, titles, and even digital movie scenes which replace actors.

For example, the thumbnail image for this article was generated by AI.

As a side effect of Generative AI, workers have become concerned about losing their jobs to automation driven by AI software.

Chatbot assistants

The world's first commercial user-available chatbot (BeBot) was released by Bespoke Japan for Tokyo Station City in 2019.

Released as an iOS and Android app, BeBot knows how to direct you to any point around the labyrinth-like station, help you store and retrieve your luggage, send you to an info desk, or find train times, ground transportation, or food and shops inside the station.

It can even tell you which train platforms to head to for the quickest train ride to any destination in the city by trip duration - all in a few seconds.

The MLC chat app

The Machine Learning Compilation (MLC) project is the brainchild of Apache Foundation Deep Learning researcher Siyuan Feng, and Hongyi Jin as well as others based in Seattle and in Shanghai, China.

The idea behind MLC is to deploy precompiled LLMs and chatbots to consumer devices and web browsers. MLC harnesses the power of consumer graphics processing units (GPUs) to accelerate AI results and searches - making AI within reach of most modern consumer computing devices.

Another MLC project - Web LLM - brings the same functionality to web browsers and is based in turn on another project - WebGPU. Only machines with specific GPUs are supported in Web LLM since it relies on code frameworks that support those GPUs.

Most AI assistants rely on a client-server model with servers doing most of the AI heavy lifting, but MLC bakes LLMs into local code that runs directly on the user's device, eliminating the need for LLM servers.

Setting up MLC

To run MLC on your device, it must meet the minimum requirements listed on the project and GitHub pages.

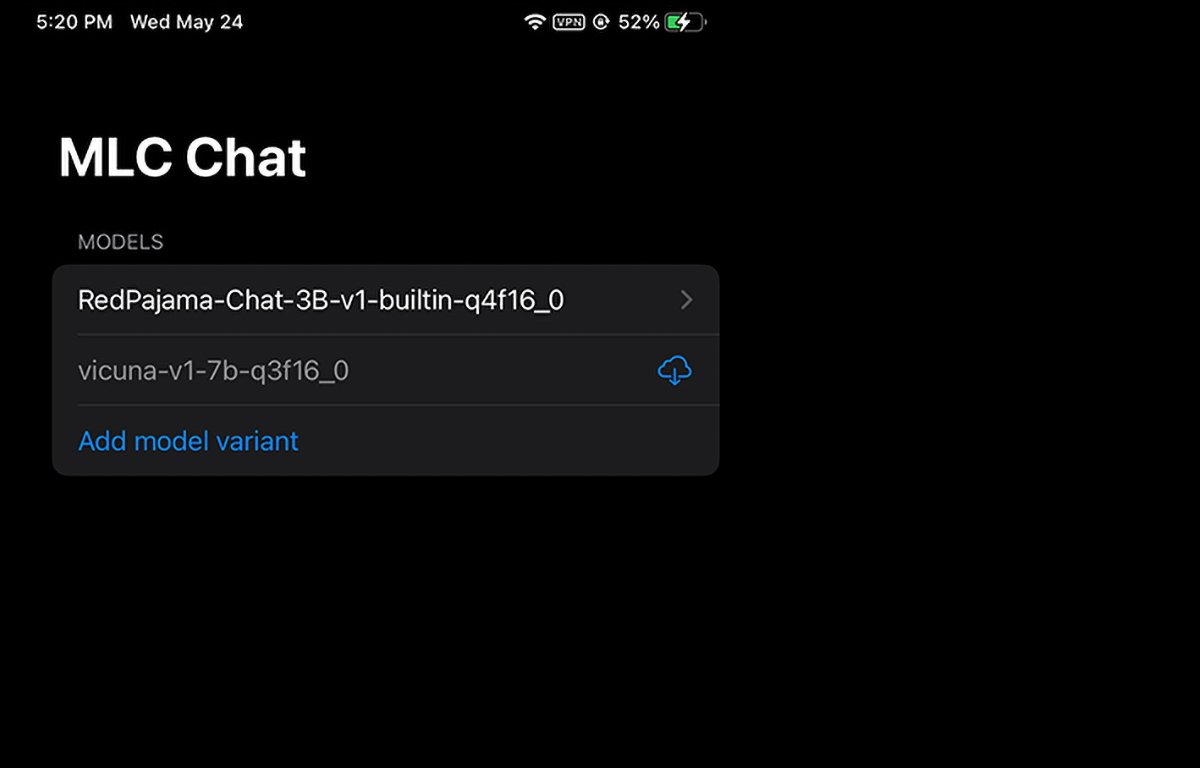

To run it on an iPhone, you'll need an iPhone 14 Pro Max, iPhone 14 Pro, or iPhone 12 Pro with at least 6GB of free RAM. You'll also need to install Apple's TestFlight app to install the app, but installation is limited to the first 9,000 users.

We tried running MLC on a base 2021 iPad with 64GB of storage, but it wouldn't initialize. Your results may vary on iPad Pro.

You can also build MLC from sources and run it on your phone directly by following the directions on the MLC-LLM GitHub page. You'll need the git source-code control system installed on your Mac to retrieve the sources.

To do so, make a new folder in Finder on your Mac, use the UNIX cd command to navigate to it in Terminal, then fire off the git clone command in Terminal as listed on the MLC-LLM GitHub page:

https://github.com/mlc-ai/mlc-llm.git and press Return. git will download all the MLC sources into the folder you created.

Installing Mac prerequisites

For Mac and Linux computers, MLC is run from a command-line interface in Terminal. You'll need to install a few prerequisites first to use it:

- The Conda or Miniconda Package Manager

- Homebrew

- Vulkan graphics library (Linux or Windows only)

- git large file support (LFS)

For NVIDIA GPU users, the MLC instructions specifically state you must manually install the Vulkan driver as the default driver. Another graphics library for NVIDIA GPUs - CUDA - won't work.

For Mac users, you can install Miniconda by using the Homebrew package manager, which we've covered previously. Note that Miniconda conflicts with another Homebrew Conda formula, miniforge.

So if you have miniforge already installed via Homebrew, you'll need to uninstall it first.

Following directions on the MLC/LLM page, the remaining install steps are roughly:

- Create a new Conda environment

- Install git and git LFS

- Install the command-line chat app from Conda

- Create a new local folder, download LLM model weights, and set a LOCAL_ID variable

- Download the MLC libraries from GitHub

All of this is mentioned in detail on the instructions page, so we won't go into every aspect of setup here. It may seem daunting initially, but if you have basic macOS Terminal skills, it's really just a few straightforward steps.

The LOCAL_ID step just sets that variable to point to one of the three model weights you downloaded.

The model weights are downloaded from the HuggingFace community website, which is sort of a GitHub for AI.

Once everything is installed in Terminal, you can access MLC in the Terminal by using the mlc_chat_cli

command.

Using MLC in web browsers

MLC also has a web version, Web LLM.

The Web LLM variant only runs on Apple Silicon Macs. It won't run on Intel Macs and will produce an error in the chatbot window if you try.

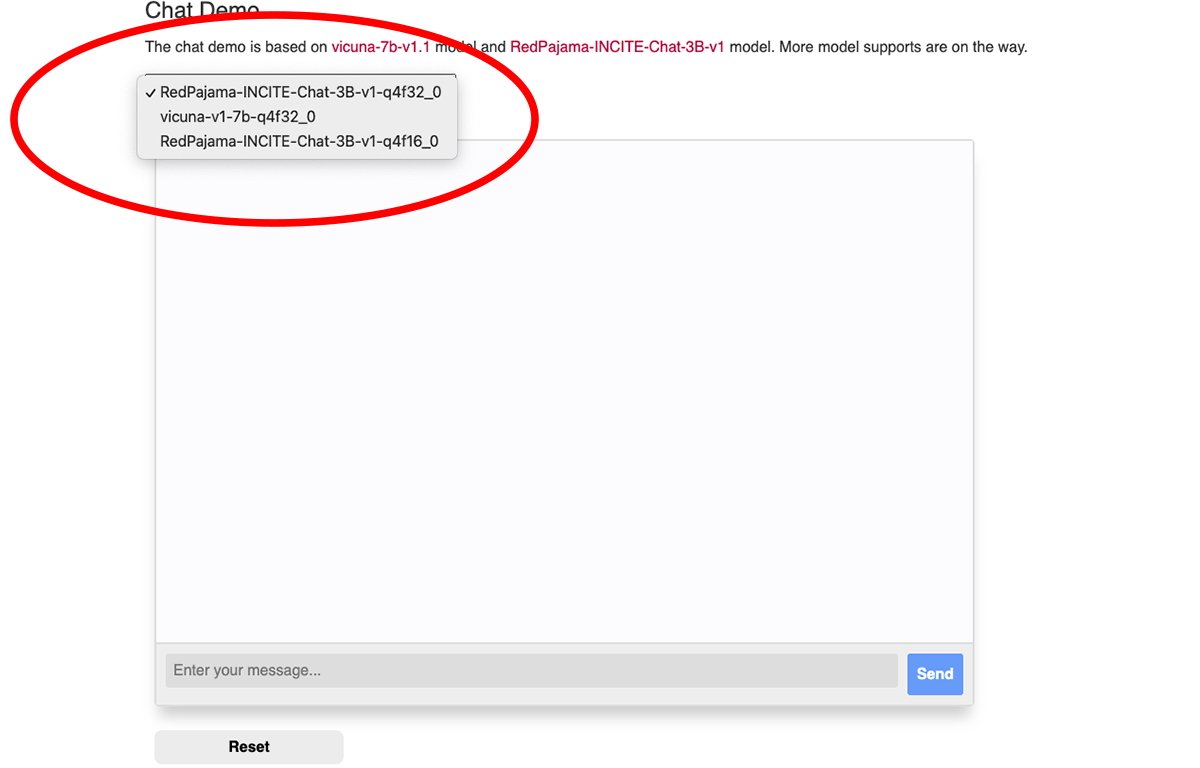

There's a popup menu at the top of the MLC web chat window from which you can select which downloaded model weight you want to use:

You'll need the Google Chrome browser to use Web LLM (Chrome version 113 or later). Earlier versions won't work.

You can check your Chrome version number from the Chrome menu in the Mac version by going to Chrome->About Google Chrome. If an update is available, click the Update button to update to the latest version.

You may have to restart Chrome after updating.

Note that the MLC Web LLM page recommends you launch Chrome from the Mac Terminal using this command:

/Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome --enable-dawn-features=allow_unsafe_apis,disable_robustness

allow_unsafe_apis' and disable_robustness' are two Chrome launch flags that allow it to use experimental features, which may or may not be unstable.

Once everything is set up, just type a question into the Enter your message field at the bottom of the Web LLM web page's chat pane and click the Send button.

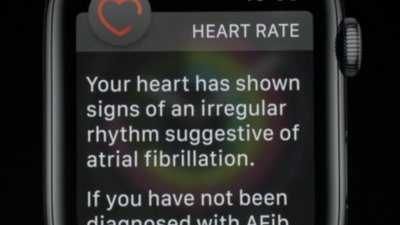

The era of true AI and intelligent assistants is just beginning. While there are risks with AI, this technology promises to enhance our future by saving vast amounts of time and eliminating a lot of work.

Chip Loder

Chip Loder

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

Amber Neely

Amber Neely