In an invitation-only meeting at Tuesdays Neural Information Processing Systems conference, Apple's head of machine learning broke down Apple's specific goals for its artificial intelligence developments, and detailed where it feels it has an advantage of its competitors, giving a rare look at Apple's research process.

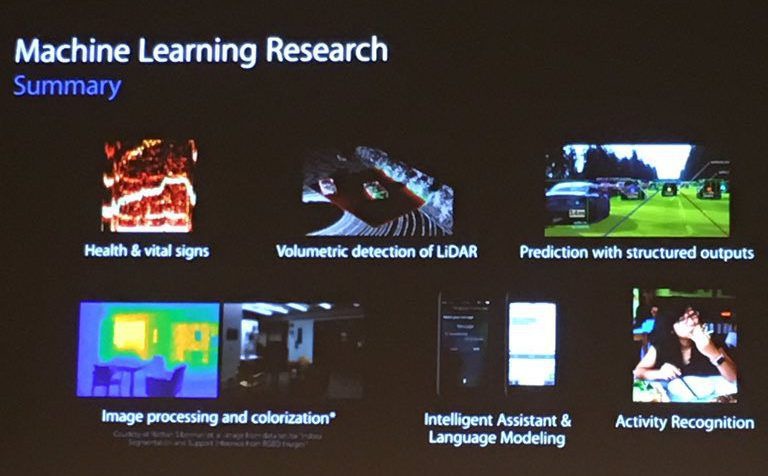

According to presentation slides supplied to Quartz, Apple's over-arching theme for artificial intelligence research is roughly the same as everybody else's: image identification, voice recognition for use in digital assistants, and user behavior prediction. Apple uses all of these techniques with Siri on macOS and iOS now.

However, without specifically mentioning that Apple was working on a vehicle or systems associated with self-driving autos, the presentation by machine learning head Russ Salakhutdinov also discussed "volumentric detection of LiDAR," or measuring and identifying objects at-range with lasers.

LIDAR is a surveying method that measures distance to a target by illuminating that target with a laser light, and is commonly used for automotive guidance systems. The core technology also sees frequent use in map-making for a wide array of sciences.

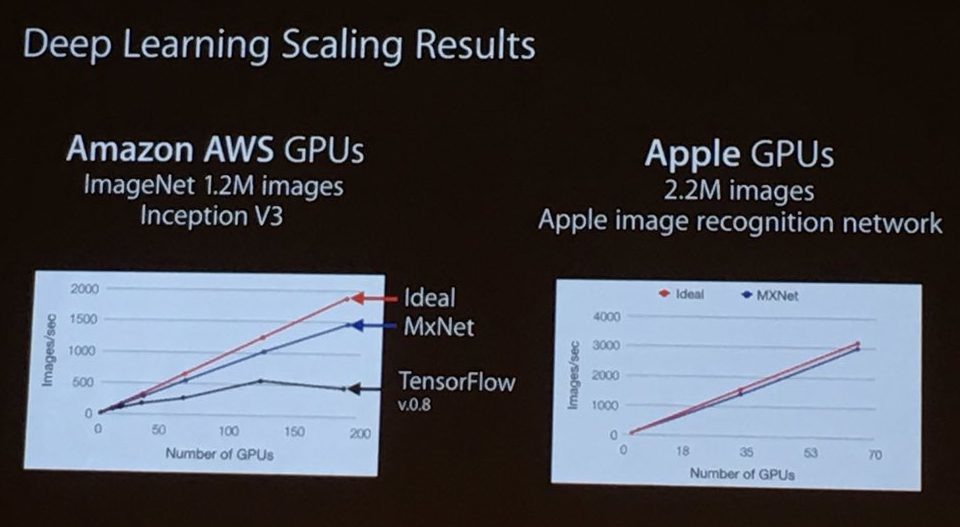

Apple claimed in the meeting that Apple's GPU-based implementation of image recognition was capable of processing twice as many photos per second as Google's system, cranking out 3,000 images per second, besting a claimed 1,500. Apple's system is more efficient as well, with Salakhutdinov noting that the array was comprised of one third of the GPUs used by Google, with both tapping Amazon's cloud computing services.

Delving deeper into what Apple is working on, one item is parent-teacher neural networks, where a larger neural network with an equally large amount of data transfers a neural network to a smaller device, like an iPhone, with no compromise in decision-making quality. Implementation of this feature would allow for a subset of a vast artificially intelligent system to make decisions on a mobile device of lesser power, instead of being forced to feed any relevant data to a larger processing network and then the device waiting on a response to the query.

Before the meeting about Apple's artificial intelligence focus on Tuesday, Apple publicly announced that its scientists would be allowed to publish and collaborate with the larger research community for the first time.

Cook has also recently revealed that Apple's Yokohama, Japan research facility will boast "deep engineering" for machine learning, far different from Apple's Siri voice assistant.

Apple is also rumored to be utilizing the technology for self-driving car systems, as an offshoot of the "Project Titan" whole-car program.

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Charles Martin

Charles Martin

Oliver Haslam

Oliver Haslam

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

20 Comments

I've assumed that Apple's approach to AI would involve smarter client devices than Google's (which skews towards thinner clients).

Not only is that consistent with Apple's privacy policies, it's also consistent with Apple's comparative advantages in SOC design.

I like the idea of Apple having a large ecosystem of smart devices that talk to each other rather than dumb devices that all act as the tentacles of a Cloud Monster.

It bums me out, though, when Apple seems unable to keep all of those smart devices up to date!

BUT BUT BUT APPLE IS NOT WORKING ON ANYTHING NEW, THEY HAVE NO VISION AND NO PIPELINE

/troll

Product events perform a different task, as do Mr. Cooks references to what you describe as 'social crusades'. All a part of a complete package, promoting Apple, conservation and and moving the needle a little more into the righteous column. How do you find fault with that?