Apple Watch 'particle cloud' pairing method likely revealed in new patent

Last updated

Lost amidst a sea of flashy hardware technologies introduced with Apple Watch was a new image-based pairing technique distinguished by a "particle cloud" effect. On Tuesday, Apple received a patent detailing what appears to be the bedrock of this unique pairing procedure, hinting at its use in future products.

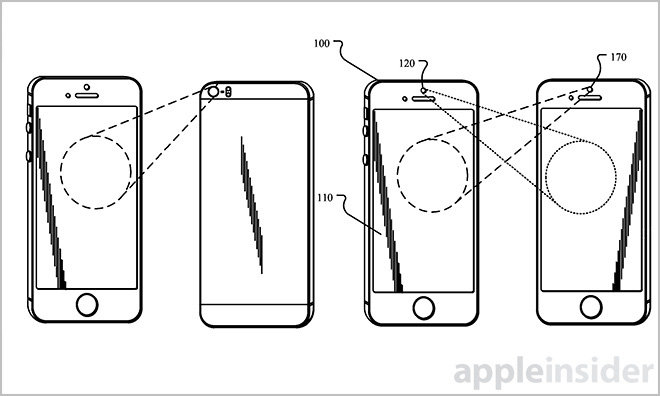

The U.S. Patent and Trademark Office awarded Apple sequential U.S. Patent Nos. 9,022,291 and 9,022,292, both titled "Invisible optical label for transmitting information between computing devices," laying the foundation for machine readable codes like those seen with Apple Watch. Specifically, the invention outlines a method by which data-rich optical labels are encoded and decoded in a manner imperceptible to end users.

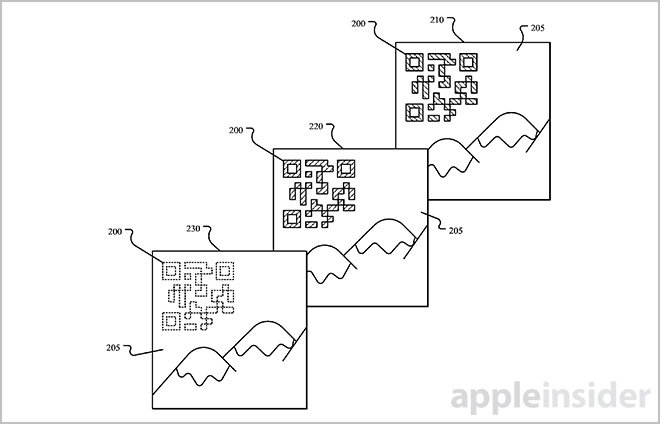

According to the patent, an optical label is used to transmit data from one electronic device to another, much like quick response codes (QR codes) that are themselves descendants of UPC barcodes. In fact, Apple's invention supports traditional 2D machine readable schemes, but applies a display process that makes the matrices invisible against a static or dynamic background.

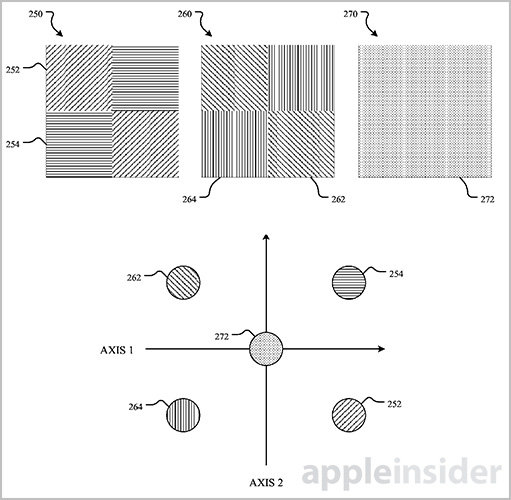

The invention relies heavily on human pyschovisual perception. By encoding and coloring a specific portion of a matrix in two alternating frames, Apple can control how a user perceives that area's temporal chrominance, or essentially the color of the picture.

For example, first and second portions of frame A are encoded using two colors, perhaps blue and magenta. Third and forth portions generated on frame B correspond to the first and second portions of frame A are applied opposing colors orange and green. Alternating between the two frames, the temporal average equates to a lack of color, or gray. To ensure chroma space changes go unnoticed, frames alternate at frequencies of 60 fps to 120 fps and beyond.

Assigning temporal averages selectively across a matrix allows it to blend in with a variety of background images, from icons to wallpapers. For example, a portion of the alternating frames can be encoded to output a temporal average of blue to match the blue section of an image, while another area may correspond to an orange section.

Since the human eye is more sensitive to changes in luminance, or brightness, than chrominance, color changes to the specially encoded optical label will likely go unnoticed. For an imaging sensor, however, chrominance changes are easily distinguishable. By capturing encoded frames at a different rate than what is being displayed by a transmitting device, a recipient device is able to to pick out the underlying matrix for decoding and processing.

Finally, displaying matrices in alternating frames enables embeds in moving images, a major departure from static black-and-white or color QR codes.

It looks like Apple already applied its newly granted patent with Apple Watch. As seen in the image at the top of this article, Apple is using a visual pairing method to link Watch with iPhone. During the setup process, owners are instructed to use an iPhone to capture images of a dynamically generated optical code — resembling a roiling Oort cloud — displayed on their Watch.

As Apple's invention is designed to trick the human eye, it is difficult to prove inclusion in Apple Watch's pairing process. Taking the shipping version's pairing speed, accuracy and sophistication into account, however, Apple's invisible optical label technology seems like a good fit.

Apple's invisible optical label patents were filed for in July 2014 and credit Rudolph van der Merwe and Samuel G. Noble as inventors.

Mikey Campbell

Mikey Campbell

Amber Neely

Amber Neely

Thomas Sibilly

Thomas Sibilly

AppleInsider Staff

AppleInsider Staff

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

21 Comments

When I paired my iPhone and Apple Watch, I thought of a camera app Apple provided developers back in 2010. The app was named iCone and recognized shapes and colors. Using that camera technology along with AirDrop technology (using Bluetooth), the iPhone and Apple Watch communicated with each other for the pairing. I think the Apple Watch is periodically sending out a Bluetooth signal to recognize other Bluetooth enabled devices to communicate with. To me, this patent seems to be something different... Something in the realm of 3D, virtual reality. I see an Apple app that scans an environment and recognizes encoded information that once decoded can be used to display information on Apple devices. Indoor mapping, treasure hunts, guided tours, furniture shopping, etc. I did get a nervous feeling reading about encoded information that the human eye cannot see though. Even though the eye cannot "see" the information, the information is there and can be recognized on a subconscious level. That felt Orwellian to me.

Don't worry, I am sure they coded a 'Don't sweat it' message in there also, so all good.

[quote name="Rob Bonner" url="/t/186120/apple-watch-particle-cloud-pairing-method-likely-revealed-in-new-patent#post_2719686"]Don't worry, I am sure they coded a 'Don't sweat it' message in there also, so all good. [/quote] Thanks! While reading the actual patent, I learned I was on target with Bluetooth and AirDrop, but off target with the 3D, virtual reality stuff. Oh well.

Theoretically file or photo data could be represented similarly as a seemingly random swirl of dense data for faster transfers between devices via the camera. I could also see this as an in-person method for anonymous and secure funds transfer, a la Bitcoin or other blockchain-based transactions, including private contracts. Avoiding the Internet, email or texting ensures greater security and privacy.

The 'particle cloud' pairing did not work for me during set up. But set-up was easy and smooth nonetheless.