Apple appears to be applying heavy-handed moderation tactics to a newly introduced gif search iMessage app in iOS 10 that inadvertently retrieved pornographic images, as queries for seemingly innocuous words no longer yield results.

Apple released iOS 10 on Tuesday with a new iMessage API that opens the once walled-off messaging platform to developers. The feature offers extensibility to standalone apps and controlled access to web-based assets, among other data.

While developers are already taking advantage of the API to market bite-sized apps through the iMessage App Store, Apple also includes its own set of first-party titles to get users started. Unfortunately, Apple seemingly forgot the internet is full of porn.

As Gizmodo reports, users trying out Apple's #Images iMessage app, which returns gifs and images related to a search term, inadvertently stumbled onto questionable content — questionable content being hard core porn. For example, Deadspin discovered a particularly graphic gif when querying the term "hard."

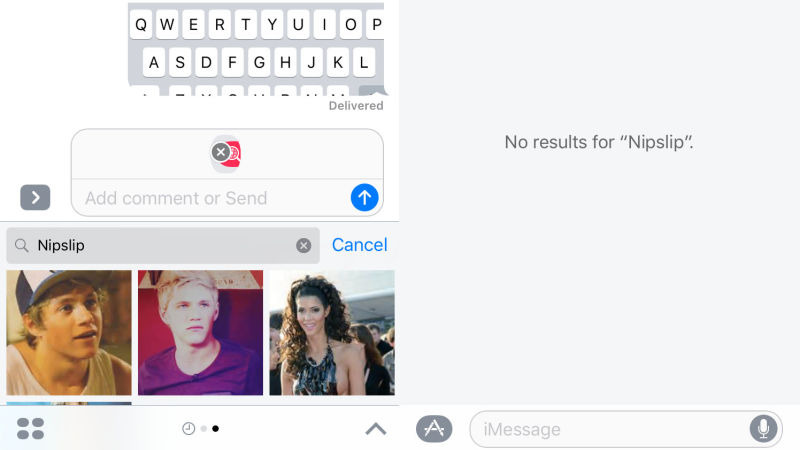

Apple caught wind of the situation and is putting the clamps on its own app. Since the #Images iMessage app is powered by Bing, Apple is blocking requests for terms that could lead to provocative content, a severe but effective method of content control. Alongside obviously explicit words, Apple is also blacklisting seemingly innocent terms like "huge" and "bounce" as part of the roving ban.

It also appears that Apple is cleaning results where it can, for example "sideboob" yields fewer — and cleaner — results than previous queries for the same term, the report said.

AppleInsider Staff

AppleInsider Staff

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

Sponsored Content

Sponsored Content

Charles Martin

Charles Martin

24 Comments

Why worry, tried Google?

Sometimes, it's fun to be journalist.

Of course Apple will monitor this, its not a big deal unless you are a filthy minded journo looking for some page hi.....

gid damn it ..... you got me to click

Oh, someone's gonna file a class action suit.

How is "Nipslip" and "sideboob" seemingly innocuous?