An Apple patent application published Thursday details an intelligent method of introducing photo background blur using a depth map compiled from data captured by a stereo camera system. The document could shed light on the inner workings of Portrait Mode on iPhone 7 Plus, details of which are sparse.

The methodology described in Apple's application for "Photo-realistic shallow depth-of-field rendering from focal stacks" aligns with what little is known about Portrait Mode, an iOS feature that harnesses iPhone 7 Plus hardware to generate natural looking background blur in digital images.

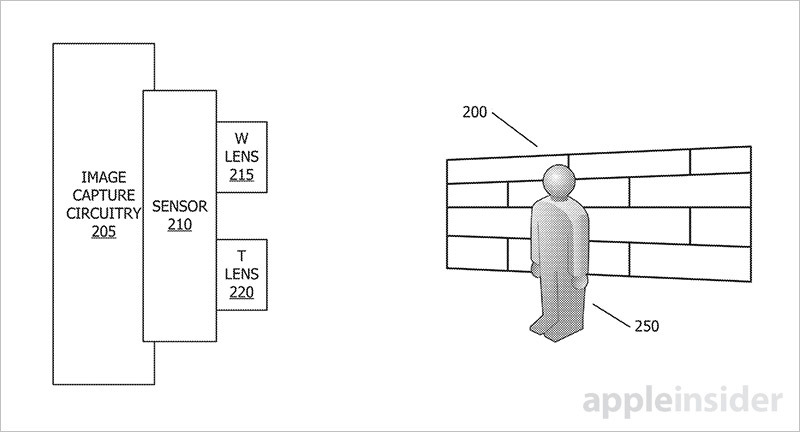

Released with iOS 10.1 in October, Portrait Mode uses wide angle and "telephoto" lenses, complex computer vision algorithms and depth mapping technology to create a series of image layers. The system is able to selectively focus certain layers, like those containing a picture's subject, and unfocus others, resulting in a naturally blurred background with added bokeh.

Today's patent application details an almost identical technique, except instead of introducing blur the proposed system selects layers, or frames, that are already out of focus.

In some embodiments, the invention involves snapping a preset number of frames of a particular scene, with each frame captured at a different focus depth. Each frame represents a distinct layer in the focus stack — a set of images at various levels of sharpness or blur that will ultimately be used to generate a final shot.

Next, the system generates a depth map using depth-from-focus or a depth-from-defocus method. The resulting information will be used to determine which layers contain a target object and which contain a scene's background.

A goal blur level is then derived based on any number of artificial constraints. In some embodiments, blur is decided based on a camera's aperture, while another example sets fuzziness depending on how much focus is needed to clearly render an object of a certain size at a given distance. A lookup table might also be implemented to replicate the look of an SLR camera.

Correct blur is the secret sauce of Apple's Portrait Mode feature, and the company has not elaborated on what models or blur methods are used to create its creamy bokeh. The patent application allows for any number of blur function possibilities, including size for a given focus distance, object distance and aperture size, meaning it can mimic a wide range of SLR/lens combinations.

Importantly, the method requires an alignment of the focus stack so that any pixel in any frame is correctly lined up with corresponding pixels in other frames. With all pixels in alignment, a specialized imaging algorithm is able to determine points or planes of focus using edge detection, sharpness metrics or any similar technique. Conversely, the system is able to detect background pixels at different depths and select them if they match a target blur. This explains Portrait Mode's ability to parse minute object details like hair from the background.

The final output image is comprised of a tapestry of pixels sampled from various frames within the focus stack, and as such, at various focus settings. Alternatively, if no pixels in the focus stack match the goal blur level, two or more images may be combined, blended or otherwise interpolated to accomplish the desired effect.

Lending credence to the theory that today's application is indeed the basis of Portrait Mode, the document goes on to explain that multi-camera setups are ideal for previewing simulated depth-of-field. Apple notes a single lens camera system can be used to capture the focus stack, though multi-lens arrays like the wide angle and telescopic modules employed in iPhone 7 Plus offer a more diverse sample set. With two lenses, for example, the system can capture two images at the same time, then compare blur to extrapolate depth map data.

In one example, live images from a telescopic camera might be used to focus on a subject, while a wide angle camera gathers frames for the focus stack. An extremely similar, if not identical, implementation is employed with Portrait Mode. Further, the patent helps explain how iOS is able to determine when subjects are too close the objective lens (Portrait Mode comes with a variety of warning notifications, including one for subject proximity).

Interestingly, the invention includes contingencies for manually adjusting background focus, a feature not yet available to iPhone owners. In its current form, Portrait Mode automatically adjusts focus levels to achieve just the right amount of natural-looking blur.

Whether today's patent filing completely covers Portrait Mode is unclear, though a number of details obviously made their way into the final product. As for techniques that were mentioned in the document, but have yet to see implementation, Apple might be saving the features for a future iOS update. Alternatively, functions like manually adjustable blur could pop up in a future iPhone.

Apple's patent application covering depth-of-field effects was first filed for in September 2015 and credits Thomas E. Bishop, Alexander Lindskog, Claus Molgaard and Frank Doepke as its inventors, all in-house engineers.

Mikey Campbell

Mikey Campbell

-m.jpg)

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

5 Comments

I really like my iPhone 7+'s camera and Portrait mode.

I wanted to take a good-quality self-portrait a few days ago, so I put the phone on a tripod and used my Apple Watch's camera remote app to compose and take the shots with the phone's main camera. The watch's remote app lets you see tiny previews of the shots, too.

What's also great about this camera is its auto focus. My portrait shots were tack sharp on the eyes.

"Released with iOS 10.1 in October, Portrait Mode uses wide angle and "telephoto" lenses, complex computer vision algorithms and depth mapping technology to create a series of image layers."

Why is "telephoto" in quotes? That's what narrow arc lenses are called (although on Jeopardy the other night they accepted "zoom lens" as the correct answer).