Apple's new A11 Bionic chip used in iPhone 8 and the upcoming iPhone X packs in an array of processing cores and sophisticated controllers, each optimized for specific tasks. We only know a bit about these, let alone what else is packed into this SoC. Here's a look at the new Apple GPU, Neural Engine, its 6 core CPU, NVMe SSD controller and new custom video encoder inside the package.

A new 3 Core GPU for graphics, GPGPU & ML

Apple's first internally-designed GPU, built into the A11 Bionic Application Processor, claims to be 30 percent faster than the Imagination-based GPU used in iPhone 7 models— which was already the leading graphics architecture in smartphones.

Just as impressively, Apple's new GPU isn't just faster but more efficient, allowing it to match the work of the A10 Fusion GPU using only half the energy.

GPUs were originally created to accelerate graphics, but for years they have been tasked with doing other kinds of math with a similar repetitive nature, often referred to "General Purpose GPU." Apple initially created OpenCL as an API to perform GPGPU, and more recently folded GPGPU Compute into its Metal API that's optimized specifically for the GPUs Apple uses in its iOS devices and Macs. The latest version, Metal 2, was detailed at WWDC17 this summer.

Now that Apple is designing both the graphics silicon and the software to manage it, expect even faster progress in GPU and GPGPU advancement. Additionally, Apple is also branching out into Machine Learning, one of the tasks that GPUs are particularly good at crunching. ML involves building a model based on a variety of known things— such as photos of different flowers— and using that model of "knowledge" to find and identify matches— things that could be flowers in other new photos, or in the camera viewfinder.

Apple hasn't yet provided many technical details about its new GPU design, other than that it has "three cores." Different GPU designs are optimized for specific tasks and strategies and define "core" in radically different ways, so it's impossible to make direct, meaningful comparisons against GPUs from Intel, Nvidia, AMD, Qualcomm, ARM Mali and others.

TB;DR

It is noteworthy that Apple describes its new mobile A11 Bionic GPU Family 4 graphics architecture as using Tile Based Deferred Rendering. TBDR is a rendering technology created for mobile devices with limited resources. It effectively only finishes rendering objects that will be visible to the user in the 3D scene. On desktop PC GPUs (as well as Qualcomm Adreno and ARM Mali mobile GPUs) "Immediate Mode" rendering is performed on every triangle in the scene, running through rasterization and fragment function stages and out to device memory even if it may end up being covered up by other objects in the final scene.

TBDR skips doing any work that won't be seen, breaking down a scene into tiles before analyzing what needs to be rendered for each. The output is saved temporarily to high speed, low latency Tile Memory. This workflow enables it to better use the entire GPU because it can perform vertex and fragment asynchronously. Apple notes: "the vertex stage usually makes heavy use of fixed function hardware, whereas the fragment stage uses math and bandwidth. Completely overlapping them allows the device to use all the hardware blocks on the GPU simultaneously."

As a technique, TBDR is closely associated with Imagination's PowerVR, which developed in parallel to desktop GPUs on a road less traveled, then emerged around the launch of the first iPhone as the perfect mobile-optimized GPU architecture, with efficiency advantages scaled down PC GPUs couldn't rival.

But while Imagination initially complained that Apple hadn't 'proven that it wasn't infringing' its IP this spring, it does not now appear to be continuing any claim that Apple's new GPU uses any unlicensed PowerVR technology, and instead sold itself off at a huge discount after losing Apple's business.

Additionally, TBDR isn't an approach that is completely unique to Imagination, although there have only ever been a few successful GPU architectures (among the many experimental approaches that have failed). This is similar to the CPU world— currently dominated by ARM in mobile devices and Intel's x86 in PCs and servers— despite many failed attempts to disrupt the status quo by competitors (and even Intel itself).

Apple's Metal 2 now exposes the details of TBDR to developers for its A11 Bionic GPU so they can further optimize memory use and to "provide finer-grained synchronization to keep more work on the GPU." The company also states that its new GPU "delivers several features that significantly enhance TBDR," allowing third-party apps and games to "realize new levels of performance and capability."

Dual core ISP Neural Engine

Creating an entirely new GPU architecture "wasn't innovative enough," so A11 Bionic also features an entirely new Neural Engine within its Image Signal Processor, tuned to solve very specific problems such as matching, analyzing and calculating thousands of reference points within a flood of image data rushing from the camera sensor.

Those tasks could be sent to the GPU, but having logic optimized specifically for matrix multiplications and floating-point processing allows the Neural Engine to excel at those tasks.

The Neural Engine itself has two parallel cores designed to handle real-time processing, capable of performing 600 billion operations per second. That means in addition to applying sophisticated effects to a photo, as Apple has been doing in previous generations of its ISP, it can now perform effects on live video. Beyond effects, this also appears to be what enables the camera system to identify objects and their composition in a scene, allowing it to track and focus on the subject you are filming.

This Neural Engine is credited with giving the A11 Bionic its name. "Bionic" generally refers to a human having electromechanical enhancements, and suggests the idea of superhuman abilities due to those enhancements. One could think of A11 Bionic being the opposite of this, as it is actually a machine enhanced with human-like capabilities. Alternatively, you could think of the chip as a bionic enhancement of the human using it, allowing the user to leap over tasks ordinary Androids can't.

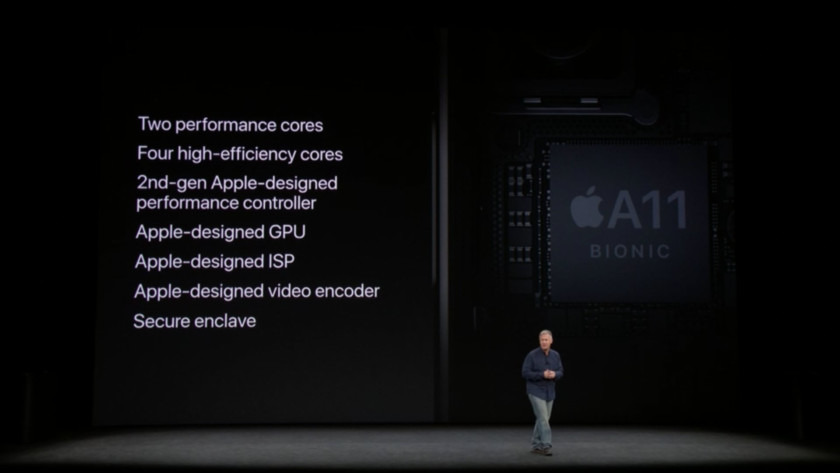

Apple's 6 new CPU cores, 2G performance controller

The third chunk of the A11 Bionic is Apple's original custom implementation of ARM Architecture CPU cores. Apple delivered its original customized A4 SoC back in 2010, and has rapidly iterated on its design. In 2013 it created A7, first 64-bit ARM chip, sending chip rivals into a tailspin.

Last year's A10 Fusion got its name from a new architecture that managed tasks between a pair of performance cores and a pair of efficiency cores, enabling flexibility between running at full power and at an efficient idle.

This year, Apple is touting its "second-generation performance controller," designed to scale tasks across more low-power cores, or to surge the workflow to its even faster high-power cores— or even light up the entire 6 core CPU in bursts. Using asymmetric multiprocessing, the A11 Bionic can ramp up to activate any number of cores individually, in proportion to the task at hand.Using asymmetric multiprocessing, the A11 Bionic can ramp up to activate any number of cores individually, in proportion to the task at hand

Scaling a queue of incoming tasks across multiple cores requires more than just multiple cores on the SoC; apps and OS features have to be designed to take advantage of those multiple cores. That's something Apple has been working on at the OS level— and with its third developers— for years before the iPhone even existed.

Apple has detailed its software OS strategies oriented around turning off unnecessary processor units and efficiently ordering processes to so they can be scheduled to run as quickly and efficiently as possible. It's now implementing those same kinds of practices in silicon hardware. Other mobile device makers, including Samsung and LG, have never needed to develop their own PC OS platforms.

Google, which adapted Android from its origins as portable (JavaME) mobile platform, isn't selling it to users who pay for performance. It has no real tablet or desktop computing business, and its phone platform is aimed at hitting an average selling price of less than $300— Android One phones have an aggressive price target of $100. Android buyers are an audience for advertisers, not customers demanding UI polish, app performance or sophisticated features like multiprocessing support. Android apps are optimized to deliver ads.

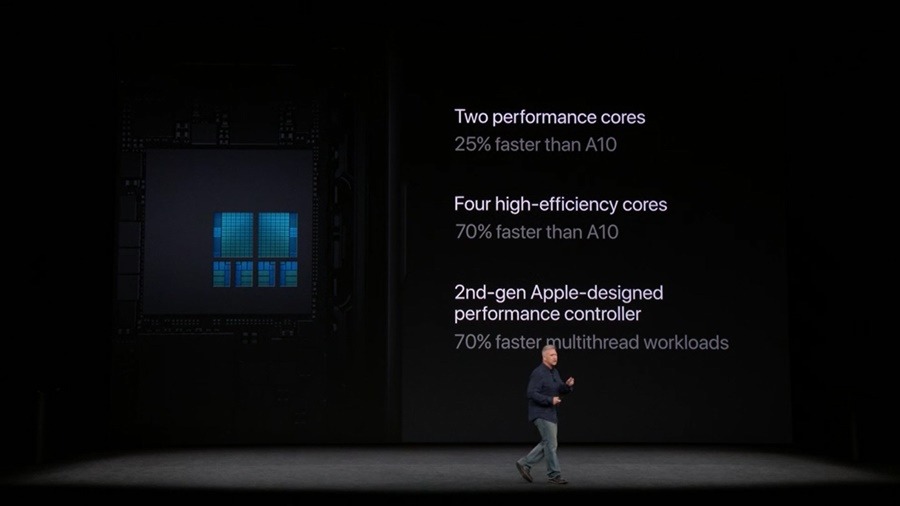

Apple states that the two performance-optimized general-purpose CPU cores of the A11 Bionic are up to 25 percent faster than those in last year's A10 Fusion; even larger gains come from its efficiency cores, which have doubled in number to four, and are now up to 70 percent faster.

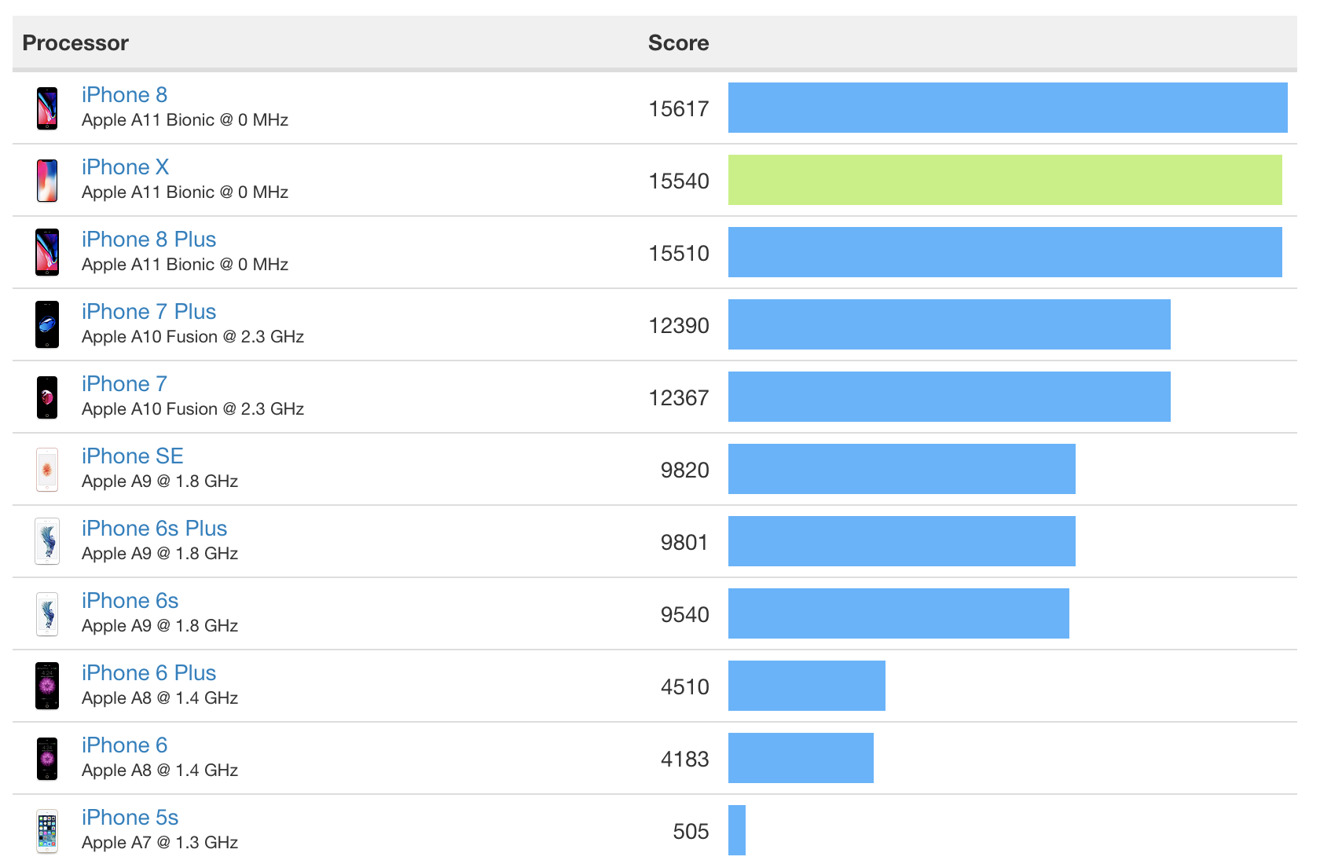

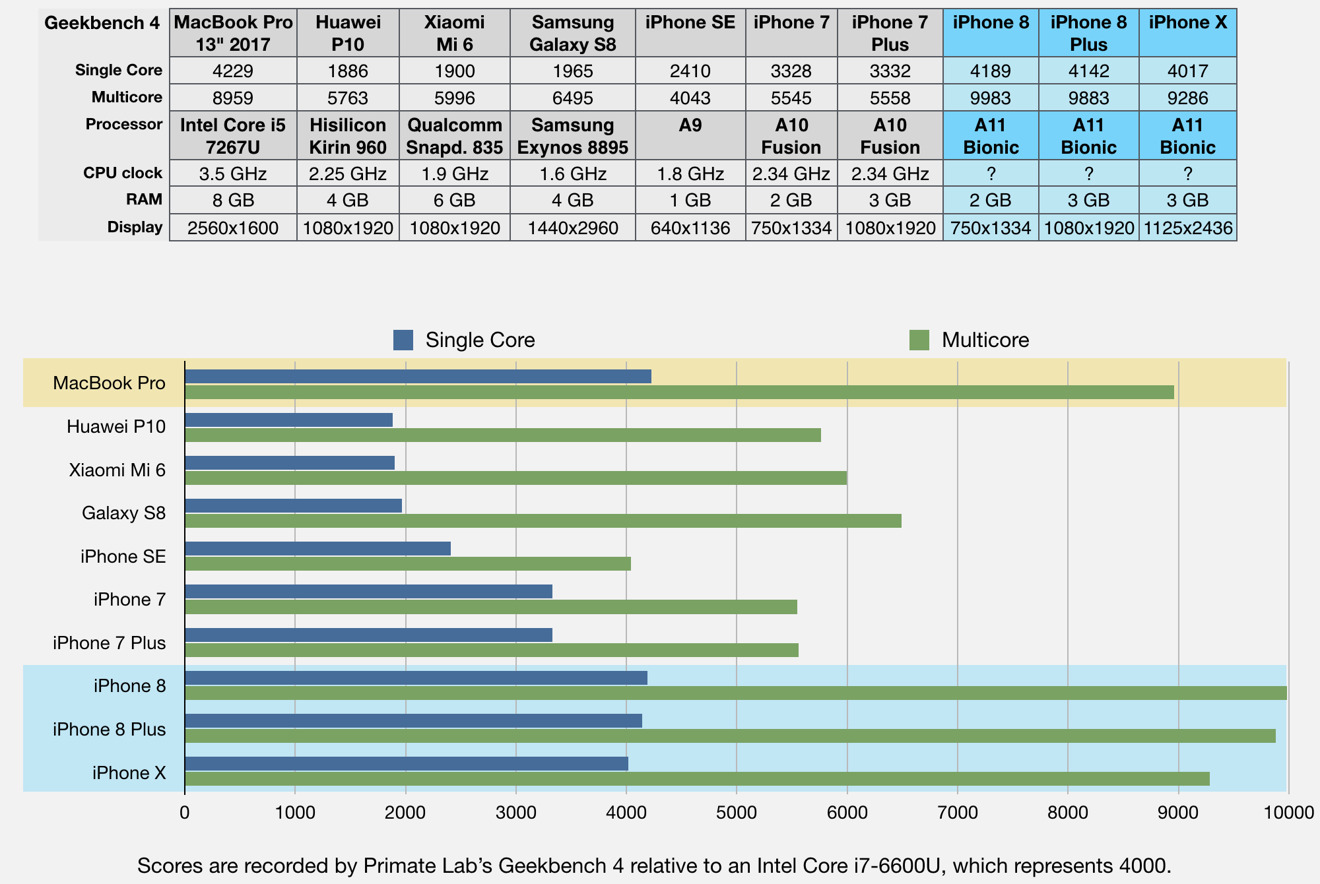

In Geekbench tests comparing the similarly specced iPhone 7 to iPhone 8 (they share the same RAM and same display resolution), the A11 Bionic scored 25 percent faster in single core and 80 percent faster in multicore scores.

This is particularly noteworthy because Apple's latest chip also delivers its new Neural Engine, GPU, camera ISP and other capabilities that are above and beyond what a generic processor benchmark effectively measures.

In stark contrast, Samsung has for years been marketing "octa-core" processors that are actually slower in per-core performance and run an OS that isn't optimized to take effective advantage of multiple cores in apps outside of benchmarks. Google itself once even bragged up its poorly built Nexus 7 at launch as having "basically 16 cores," (the sum of its CPU and GPU cores) a purely false, meaningless marketing claim that didn't make it any faster. It was actually not speedy to begin with and rapidly lost performance over time.

Rather than excessively bragging about its quantities of abstract technical specifications, Apple's marketing focuses on real-world applications, noting, for example, that the A11 Bionic is "optimized for amazing 3D games and AR experiences," claims that can be experienced every day by App Store visitors.

Separate from the CPU, Apple also designed the Secure Enclave in A7 to handle storage of sensitive data (fingerprint biometrics) in silicon walled off the rest of the system. Apple said it made improvements in the A11 Bionic, but didn't discuss what these involved.

Secret sauce SSD silicon speeds, secures storage

There are also other specialized features of the A11 Bionic, including its super speedy SSD storage controller with custom ECC (error-correcting code) algorithms, as Johny Srouji, Apple's senior vice president of Hardware Technologies, detailed in an interview with Mashable. This isn't just for speed. "When the user buys the device," Srouji noted, "the endurance and performance of our storage is going to be consistent across the product."

In other words, data stored on the device (documents, apps, photos) is better protected from corruption and storage failure (as SSD cells wear out, literally), lowering the prospect of losing your memories and documents, and the frustration of having a device that mysteriously gets slower as time wears on. That's a common problem with many Android devices.

Apple first introduced its own custom NVMe SSD storage controller for 2015 MacBooks, enabling it to optimize the hardware side of reading and writing from Solid State Storage (ie chips, rather than spinning hard drives).

The company then brought that technology to its iOS devices within the A9, starting with iPhone 6s. NVMe was originally created with the enterprise market in mind, rather than consumer electronics. There are no off the shelf solutions for adding an NVMe controller to a phone, and there are cheaper existing (albeit archaic) protocols to access SSD storage. Apple built and wrote its own.

A11 Fusion delivers what is apparently Apple's third-generation iOS storage controller. What's more interesting is that Apple didn't even talk about this on stage, because it had too much else to talk about that was even sexier.

A new Apple-designed video encoder

Two years ago, Apple's A9 introduced a hardware-based HEVC decoder, enabling devices to efficiently play back H.265 / "High Efficiency" video content. Last year's A10 Fusion introduced a hardware encoder, enabling iPhone 7 to create and save content in the format. The advantage with these High Efficiency formats is that they greatly reduce the space taken up by high-resolution photos and video

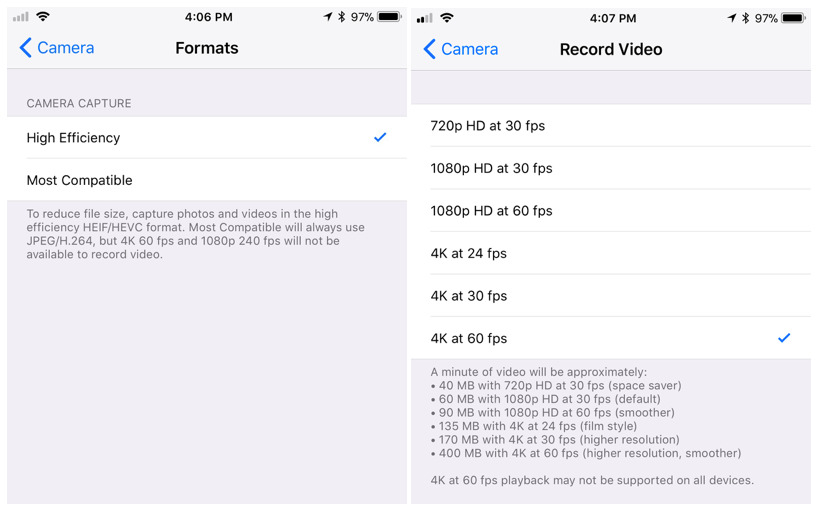

The new feature was made available in iOS 11, and is exposed as a preference in Camera Settings for "High Efficiency camera capture." When turned on, photos are compressed using HEIF (High Efficiency Image Format) and video is recorded using HEVC (High Efficiency Video Codec).

The advantage with these High Efficiency formats is that they greatly reduce the space taken up by high-resolution photos and video.

Apple states that a minute-long 4K 30fps video recorded in the new HEVC format will be about 170MB, while the same thing using the previous H.264 would be 350MB— more than twice as large.

To play this HEVC content back a device needs to be able to decode it. iOS devices earlier than A9 can decode in software, but this takes longer and has a larger hit on the battery than having efficient, dedicated hardware decoding.

HEIF video can be transcoded to H.264 (which takes conversion time), or users can also default to "Most Compatible," which continues to save photos as JPG and videos in H.264. However this disables the new video recording options for capturing 4K video at 60fps (as well as the 24fps cinematic setting new to A11 Bionic iPhones).

It is interesting that Apple developed its own video encoder for the A11 Bionic, and also that it made this fact public. In the past, Apple has used off-the-shelf components in its iPods and other devices that incorporated support for a variety of proprietary audio and video codecs, including Microsoft's WMA, WMV and VC-1. Apple didn't activate this capability, preferring instead to use industry standards developed by MPEG LA partners.

It's not clear if Microsoft got licensing royalties from the Windows Media IP on the chips Apple bought, but the larger issue was that Apple had to pay for components with stuff it didn't want to use. By building its own video encoder, it can optimize for only the formats it supports, rather than the generic package of codecs its chip providers select.

Google's YouTube initially partnered with Apple to provide H.264 video content to iOS users. But the company has since tried to advance its own VP8 and VP9 codecs acquired from On2. While it continues to send H.264 video to iOS users, it is not publishing its higher resolution 4K YouTube content in H.264 or the new H.265/HEVC, which makes YouTube 4K unavailable to Safari users on the web.

This has also created the narrative that Apple TV 4K "can't play YouTube 4K content," when in reality it is Google that is refusing to provide the content Apple TV 4K is designed to decode. It remains to be seen how that will work out, and whether Google will also refuse to support 4K on iOS devices going forward.

Having an efficient, optimized HEVC encoder on iPhone 7, 8 and X (along with iPad, Apple TV and recent generations of Intel Core processors used in Macs) means that users will be able to store far more photos and videos— generally the largest storage hog— in less space. It would also conceivably reduce wear on SSD storage, because there's half as much to write out, move around and subsequently erase.

However, another thing that HEVC makes possible is recording higher frame rate content. iPhone 8 and X can now enable capture of 4K video at 60fps, for smoother camera pans. Existing 4K video on iPhone 7 is sharply detailed, but if your camera or the subject moves too quickly, it can have a jittery effect. With smooth 60fps capture, videos look a lot better.

However, that's twice as many frames, meaning that without more advanced compression, a minute of video would consume about 800MB. Using HEVC, the end video is comparable in size to 4K 30fps capture. Note that 60fps video in HEVC requires significant processing power or a dedicated hardware decoder to play. Older Macs already have trouble playing existing 4K clips from iPhone 7.

One of the remaining interesting facets of the A11 Bionic is that if you stack together the parts of the chip that Apple detailed, there's a whole lot of surface area on it that remains completely mysterious.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

Christine McKee

Christine McKee

Thomas Sibilly

Thomas Sibilly

119 Comments

Awesome article DED, as always you don’t disappoint.

Any iPhone or Apple naysayers are just ignorant and need to be educated on the beasts that run inside these phones. What they fail to realise is the fact that since Apple design both the hardware and the software, they are truly optimised to work hand in hand offering the best performance. Hats off Apple!

Daniel is getting mellow in his old age. I didn’t figure him for the author until half way through the article.

Good stuff.

It will be interesting to the what ChipWorks comes up with when they dismantle the A11, although mostly I'm interested in the size of the die itself.