Between 2006 and 2013, AMD & Nvidia fumbled the ball in mobile chips, losing their positions as the world's leading GPU suppliers by failing to competitively address the vast mobile market, enabling Apple to incrementally develop what are now the most powerful mainstream Application Processor GPUs to ship in vast volumes. Here's how it happened, the lessons learned and how Apple could make it happen again.

Building upon How Intel lost the mobile chip business to Apple, a second segment examining how Apple could muscle into Qualcomm's Baseband Processor business, and a subsequent segment After eating AMD & Nvidia's mobile lunch, Apple Inc could next devour their desktop GPU business, this article examines:

How AMD & Nvidia lost their mobile GPU business, just like Intel

Apple developed its own mobile GPU without help from AMD or Nvidia, much the same way it created mobile CPUs without help from Intel. In both cases, Apple's silicon design team adapted existing, licensed processor core designs: CPU cores from ARM, and PowerVR GPU cores from Imagination Technology.

In a sort of bizarrely parallel fashion, both PowerVR and ARM originated as desktop PC technologies 20 to 30 years ago, then jumped into the mobile market after a decade of struggling for relevance in PCs. Both subsequently flourished as mobile technologies thanks to limited competition, and both were selected by Apple as best of breed technologies for the original iPhone in 2007.

Due in large part to rapid technology advances funded by heavy investment from Apple (thanks to its profitability and economies of scale), both ARM and PowerVR have now become mobile technology leaders capable of shrugging off the competitive advances of some of the same vendors that previously had squeezed them out of the desktop PC market. Apple developed its own mobile GPU without help from AMD or Nvidia, much the same way it created mobile CPUs without help from Intel

Intel's parallel failure to foresee the potential of Apple's iPhone and adequately address the mobile market— which allowed ARM to jump virtually unimpeded from basic mobile devices to sophisticated smartphones and tablets— has so much in common with the history of mobile GPUs that it's valuable to compare How Intel lost the mobile chip business to Apple's Ax ARM Application Processors, which also details how Apple rebuilt its silicon design team after the iPhone first appeared.

Looking at the evolution of GPUs provides similar insight into the future and helps to explain how Apple leapfrogged the industry over the past decade— particularly in mobile devices— in the commercial arena of specialized graphics processors that were once considered to be mostly relevant to video games.

The origins of GPUs to the duopoly of ATI and Nvidia

The first consumer systems with dedicated graphics processing hardware were arcade and console video games in the 1980s. Commodore's Amiga was essentially a video game console adapted to sell as a home computer; it delivered advanced graphics and video the year after Apple shipped its original Macintosh in 1984. The Mac opened up a professional graphics market for desktop publishing and CAD, among the first non-gaming reasons to buy expensive video hardware.

While Apple and other early PC makers initially developed their own graphics hardware, two companies founded in 1985— ATI and VideoLogic— began selling specialized video hardware, initially for other PC makers and later directly to consumers, to enhance graphics performance and capabilities.

By 1991 ATI was selling dedicated graphics acceleration cards that worked independently from the CPU. By enhancing the gameplay of titles like 1993's "Doom," ATI's dedicated video hardware began driving a huge business that attracted new competition. In 1993, CPU designers from AMD and Sun founded Nvidia, and the following year former employees of SGI created 3dfx; both firms (along with several smaller competitors of the era) targeted the rapidly growing market for hardware accelerated graphics.

Microsoft identified video games— and diverse video card support— as strategic to driving sales of Windows 95. Competition between Microsoft's hardware-abstracted DirectX (and SGI's existing OpenGL) and faster, bare metal APIs driving graphics cards like those from 3dfx initially gave an advantage to the latter, but as abstracted graphics APIs improved there was a brutal shakeout among video card vendors that mirrored the mass extinction of alternative computer platforms (apart from Apple, barely) when Windows arrived.

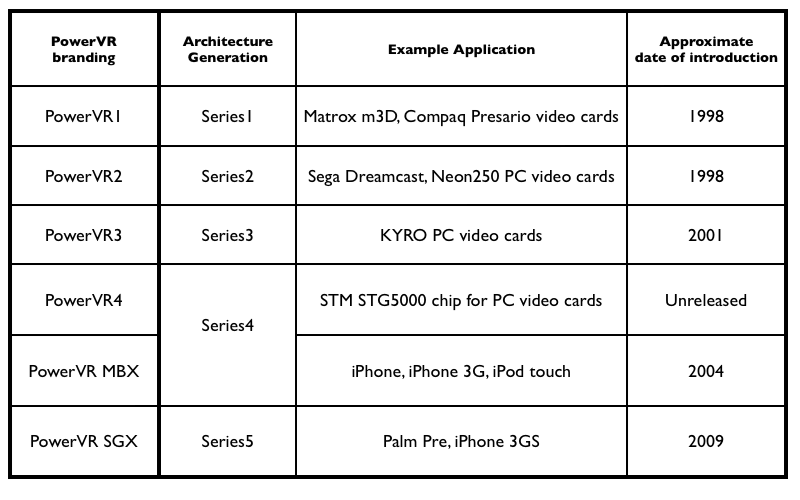

Two early casualties of that consolidation were 3dfx and VideoLogic's unique PowerVR technology. VideoLogic licensed its technology to NEC to develop graphics for Sega's 1998 Dreamcast games console, and the success of that arrangement prompted the company to exit the PC video card business and pursue a strategy of licensing its technology to other companies instead. In the process, it changed its name to Imagination Technologies.

Conversely, after 3dfx lost that Dreamcast contract, Nvidia bought up its remains of the once-leading but now struggling company, leaving just two major PC video card vendors by the end of the 1990s: ATI and Nvidia. In 1999, Nvidia marketed its new GeForce as "the world's first GPU," and while ATI attempted to call its own advanced, single chip "visual" processor a "VPU," the label coined by Nvidia stuck.

Apple jumps on GPUs

Apple, still struggling to survive as a PC maker in the mid-90s, began bundling third party video cards in its high-end Macs rather than trying to duplicate the work of developing all of its own advanced graphics hardware. After the return of Steve Jobs in 1997, Apple expanded its efforts to take advantage of the rapid pace of new graphics technology available to PCs, integrating ATI's Rage II 3D graphics accelerators into the design of its PowerMac G3, and in the new iMac the next year.

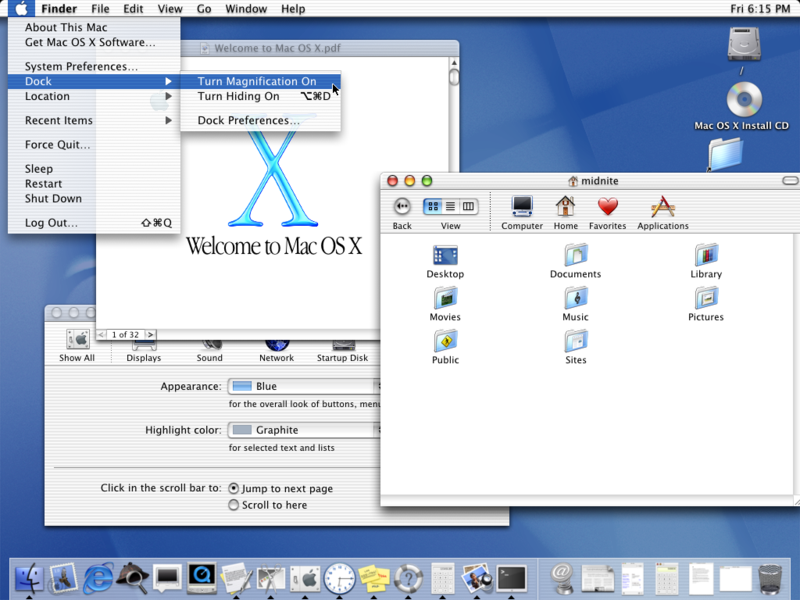

When Jobs introduced the new architecture of Mac OS X, he emphasized two important new strategies related to graphics and GPUs. First, rather than trying to maintain development of its own 3D graphics API, Apple would now back the industry standard OpenGL, enabling it the flexibility to support standard hardware from both ATI and Nvidia— and allowing the company to easily switch back and forth between the two GPU vendors in its Macs.

Secondly, the entire user interface of the new OS X would be rendered via an advanced new Quartz graphics compositing engine using technologies developed for video games. This enabled smooth animations, live video transforms, alpha translucency and shadows, and eventually full 3D video hardware acceleration, a huge step beyond the simple 2D pixel grid of the Classic Mac OS or Microsoft's Windows.

Rather than GPUs only being used to play video games and select graphics-intensive programs, Macs were now leveraging the GPU to make the entire computing environment "magical," responsive and visually appealing. Despite a long lead in gaming, Microsoft didn't catch up with Apple in delivering a similarly advanced graphics engine until Windows Vista at the very end of 2006, providing Apple with a half decade of strongly differentiated computers.

However, Apple remained distantly behind Windows in video games, in part because the money driving gaming was flowing into portable game or home consoles on the low end, or high-end PC gaming that was deeply entrenched in Microsoft's proprietary technologies, particularly its DirectX graphics APIs. The relatively small installed base of Mac users simply wasn't big enough to attract big game developers to the platform.

That had the side effect of keeping the best high-end desktop GPUs exclusive to Windows PCs, and erected a Catch-22 where the lack of games was both a cause and an effect of not having the best GPU technology on the Mac platform. That, in turn, hurt Mac adoption in other graphically intensive markets such as high-end CAD software.

Profits solve problems: 2000-2006

In the 2000s, Jobs' Apple pursued three strategies that reversed those issues. The first was iPod, which dramatically expanded Apple's revenues, shipping volumes and its visibility among consumers in retail stores funded by and displaying iPods. The second was its 2005 migration to Intel, which enabled Macs to run Windows natively and enabled developers to easily port popular PC games to the Mac. The third was iPhone, which essentially turned OS X into a handheld device that shipped in even greater volumes than iPod had, generating tens of billions of dollars in free cash flow.

iPod was essentially a hard drive connected to a self-contained ARM-based component developed by PortalPlayer, a fabless chip designer that had been shopping around a media player concept. Across the first six generations of iPods, Apple was buying 90 percent of PortalPlayers' components. However, after the supplier failed to deliver in 2006, Apple abruptly dropped the company and sourced a replacement ARM chip from Samsung for the final iPod Classic— the same year Apple also moved its Macs from PowerPC to Intel after IBM similarly failed to deliver the processors Apple wanted.

The following year another reason for Apple's jump to Samsung became apparent: the iPhone. Samsung was on a very short list of chip fabs on earth with the capability to deliver reliable, high-volume supplies of state of the art Application Processors.

Samsung— along with Texas Instruments and Intel's XScale group— additionally had the ability deliver both advanced ARM processor cores and PowerVR mobile graphics from Imagination. Samsung already had proven itself to Apple as a memory and hard drive supplier, while Intel simply declined Apple's business.

GPU plays a key role in iPhone

After Apple dumped PortalPlayer, Nvidia acquired the firm at the end of 2006, reportedly with the hope that it could win back Apple's iPod contract. But Apple had grander aspirations than simply building the sort of embellished media player that Nvidia aimed to power with its upcoming Tegra chip.

Apple's iPhone was intended to not only run a complete, mobile-optimized OS X environment on its CPU, but to also host a richly animated, GPU-accelerated UI conceptually similar to (but even easier for developers to use than) the Quartz engine OS X had delivered a half-decade earlier.

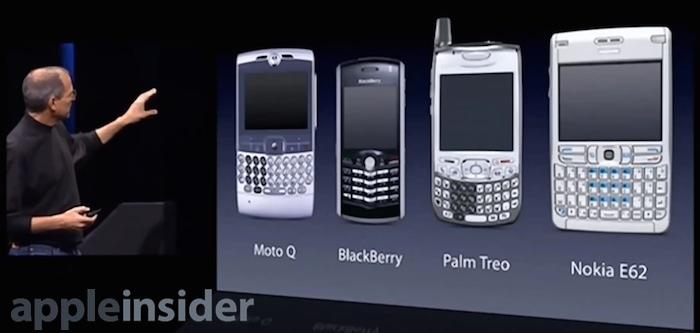

Just as OS X had leaped five years ahead of Windows by leveraging GPUs, the new mobile iOS environment would similarly leap far ahead in both respects over existing smartphones. Nokia's Symbian, Palm OS and BlackBerry were essentially glorified PDAs or pagers; and Sun's JavaME, Google's Android and Qualcomm's BREW (which it had licensed to Microsoft) were all equally simplistic JVM-like environments.

The only company that had even considered scaling down a real desktop operating system for use in mobile devices was Microsoft, and its Windows CE had only directly carried over the part that made the least sense to port: the desktop UI it had appropriated from Apple a decade earlier: windows, a mouse pointer and desktop file icons. That desktop UI would be essentially the only part of the Mac that Apple didn't port to the iPhone.

GPU focus kept iOS ahead of Android

As it turned out, Apple's "desktop class" mobile OS and development tools— which required a significantly powerful mobile processor— were an important aspect of the iPhone's success and its ability to outpace rival efforts to catch up. None of the competing mobile platforms that were being sold when iPhone arrived have survived.

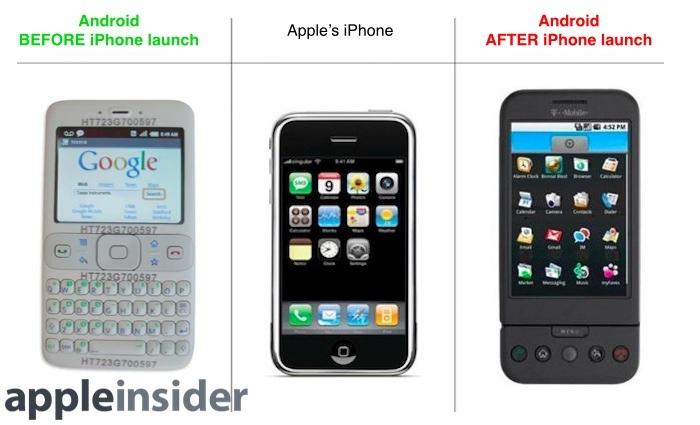

However, the richly intuitive, visually attractive and simply fun to use graphical environment of iOS— with critical acceleration from the GPU— was equally important in driving intense curiosity and rapid sales of Apple's new phone. Google in particular missed all of that completely.

Despite seeing what Apple had introduced and rushing back to the drawing board to more closely copy iPhone, Google left Android with primitive graphics (just as Microsoft had with Windows) until Android 3.0 in 2011— and that release was so focused on copying the new iPad that it didn't address smartphones for another year until Android 4.0 shipped— essentially five years after Apple introduced iPhone.

The iPhone's strategic focus on GPU capabilities also had the side effect of making it great for playing games. Video games made up a large proportion of Apple's App Store sales from the beginning, turning around the company's reputation for "not getting video games." iPhone and iPod touch— along with the iPad in 2010— rapidly disrupted the market for standalone portable gaming devices.

AMD, Nvidia flub mobile GPUs

A couple years after Imagination had refocused its PowerVR graphics to target the emerging mobile devices market, graphics pioneer ATI followed suit, launching its mobile Imageon product line in 2002. ATI was subsequently acquired by AMD in 2008, principally as part of a strategy to integrate ATI's desktop graphics with AMD's CPUs in a bid to win back x86 market share from a resurgent Intel.

By 2008, AMD decided to get rid of Imageon and sold it to Qualcomm, which renamed the mobile GPU as Adreno and incorporated it into its new Snapdragon Application Processors. That was a reversal of Qualcomm's previous, myopic BREW "feature phone" strategy, a move no doubt inspired by the success of Apple's iPhone and the powerful combination of its mobile CPU and GPU.

At the same time, while Nvidia had been keeping up with ATI in desktop graphics, it too had completely missed mobile. The company appeared to realize this with its development of Tegra, which initially aimed at powering a video-savvy, iPod-like product. Rumors in 2006 suggested that Nvidia had won Apple's business, but Nvidia ended up unable to even ship its first Tegra until 2009, when Microsoft used the new chip to power its Zune HD, two years behind Apple's iPod touch.

Apple begins designing custom mobile chips

By that point, Apple was already deeply invested in building its own internal silicon design team. It had acquired PA Semi in 2008 and had secretly lined up architectural licenses that enabled it to not simply arrange outside CPU and GPU core designs (called "Semiconductor Intellectual Property" or SIP blocks) on a chip, but actually to begin making significant core design modifications.

Apple's chip fab, Samsung LSI, continued manufacturing parts for iOS devices, but was itself continuing to build generic ARM chips for other companies, typically paired with basic graphics rather than the more expensive and more powerful PowerVR GPUs that Apple ordered.

Basic graphics generally mean ARM Mali, which was a GPU project that started at the University of Norway and was spun off as Falanx to make PC cards before running out of funding and subsequently selling itself to ARM in 2006.

Samsung doesn't care for its own chips

Even though Samsung had been supplying Apple with ARM chips for iPods since 2006, it selected Nvidia's Tegra to power its own M1 iPod-clone in 2009.

That same year, Samsung also introduced its Symbian-powered Omnia HD flagship phone, which it positioned against Apple's six-month-old iPhone 3G. But rather than also using its own chips, Samsung selected Texas Instruments' OMAP 3, which featured a next-generation Cortex-A8 CPU and PowerVR SGX530 graphics. The new Palm Pre and Google's Android 2.0 flagship the Motorola Droid also used an OMAP 3.

At the time, there was a lot of talk that Apple's iPhone 3G would be blown out of the water by one of the phones using TI's more advanced OMAP 3 chip. Apple's Samsung chip had an earlier ARM11 CPU and simpler PowerVR MBX Lite graphics. However, that summer Apple released iPhone 3GS with an even more advanced SGX535 GPU, retaining its lead among smartphones in hardware specs while also offering a superior, GPU-optimized UI over its Android competitors.

Apple's A4 vs. Samsung's 5 different CPUs & GPUs

Six months later at the start of 2010, Apple released A4, created in collaboration with Samsung (which marketed it as Hummingbird, S5PC110 and later rebranded it as Exynos 3). Apple used the chip in its original iPad and iPhone 4. Samsung used it in its original Galaxy S and the Google-branded Nexus S, as well as its Galaxy Tab.

While Samsung's new tablet didn't reach the market until 11 months after iPad it copied, the two smartphones reached the market before iPhone 4, providing Samsung with a home field advantage as the manufacturer of Apple's chips.

However, because Samsung was basing its device designs upon Apple's, the Galaxy S looked like the previous year's iPhone 3GS, eventually touching off Apple's lawsuit aimed at stopping Samsung from cloning its products. Samsung released five subsequent versions of its original Galaxy S, but those sub-model variants each used a different CPU and GPU

Early the next year, Apple released a CDMA version of iPhone 4 to launch on Verizon Wireless. It retained the same A4 Application Processor but substituted its Infineon Baseband Processor with a Qualcomm one, making the difference essentially invisible to app developers and end users.

Samsung released five subsequent versions of its original Galaxy S, but those sub-model variants each used a different CPU and GPU: two different generations of Qualcomm's Snapdragon with different Adreno graphics; an ST-Ericsson CPU paired with ARM Mali graphics, and a Broadcom CPU paired with a VideoCore GPU. That's four different CPU architectures and GPU designs (each with significantly different capabilities) on five versions of the same brand name.

Apple's A5 vs. Samsung's even more CPU/GPUs

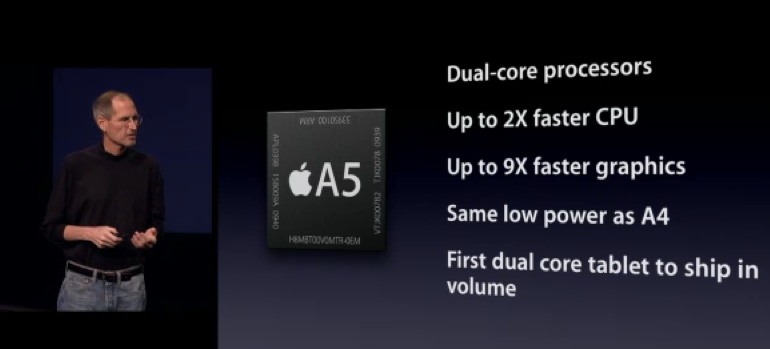

In March 2011 Apple launched its new iPad 2 powered by a dual-core A5 with dual-core SGX543 graphics. Steve Jobs noted during its introduction that Apple would be the first company to ship a dual-core tablet in volume, and emphasized that its new GPU would deliver up to 9 times faster graphics at the same power consumption of A4. As it had the previous year, Apple later repurposed the iPad's A5 in iPhone 4S.

Google had just released its own tablet-specific Android 3.0 Honeycomb, exemplified by the Motorola Xoom powered by Nvidia's new Tegra 2.

Samsung— which had annoyed Google by launching its original Galaxy Tab the previous fall in defiance of its request that licensees not ship Android 2.x tablets prior to the Android 3.0 launch— also unveiled its own Android 3.0 tablet, the "inadequate" Galaxy Tab 10.1. Bizarrely, it also used Nvidia's Tegra 2 rather than Samsung's own chips.

Unlike A4, Apple's new A5 didn't have a Samsung twin. Instead, Samsung created its own Exynos 4 using lower-end Mali graphics. It used the chip in one version its Galaxy SII, but also sold three different versions of that phone: one with an OMAP 4 with PowerVR graphics; a second with a Broadcom/VideoCore chip and a third using a Snapdragon S3 with Adreno graphics.

GPU fragmentation takes a toll on Android, hardware vendors

While Apple was optimizing iOS to work on one GPU architecture, Google's Android was attempting to support at least five very different GPUs that shipped just in Samsung's premium flagship model, in addition to a bewildering variety of other variations from Samsung and other, smaller licensees.

And because Apple was by far selling the most tablets and phones with a single graphics architecture, it could invest more into the next generation of graphics technology, rather than trying to support a variety of architectures that were each selling in limited quantities.

Additionally, the best GPUs among Android products were often seeing the fewest sales, because licensees were achieving volume sales with cheap products. That forced Google and its partners to optimize for the lowest common denominator while higher quality options were failing to achieve enough sales to warrant further development.

The failure of a series of OMAP-based products clearly played a role in Texas Instruments' decision to drop future development and exit the consumer market in 2012, and Nvidia later followed suit in smartphones.

While Apple's premium volume sales were driving investment in new high-end, state of the art GPU development, the majority of Android devices were low-end products with budget-oriented Mali graphics. Despite selling in volume, the market was clearly demanding cheap new Mali designs that could be used in the basic products of the future.

Apple's A5X & A6: fast, not cheap

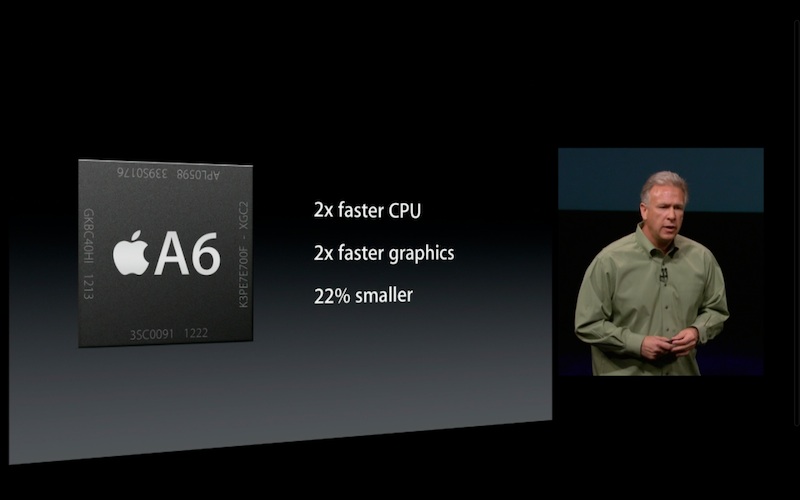

That became evident the following year when Apple launched the "new iPad" with 2,048x1,536 Retina Display resolution, powered by an A5X with a quad-core PowerVR SGX543MP4 GPU. That fall, Apple followed up with an even more powerful A6X for the enhanced iPad 4, featuring a new GPU: PowerVR SGX554MP4. The new iPhone 5 used A6, which also delivered twice the graphics performance of the earlier A5.

While Apple pushed upmarket, Google and its Android licensees rapidly raced to the bottom in 2012. Google's Tegra 3-powered Nexus 7 tablet made various sacrifices to reach an incredibly low target price of $199, resulting in a severely flawed product with serious software defects that weren't addressed until a year after the product shipped.

However, the tech media showered the product (and its 2013 successor) with accolades for its low cost, further establishing among buyers that Android tablets were cheap devices with poor performance, in stark contrast to the reputation Apple had been establishing with more expensive products that were reliable, supported and had fast, smooth graphics because Apple wasn't using substandard components to reach an impressive price target.

Apple's A7, A8: 64-bit, Rogue 6 GPUs

That set the stage for Apple to introduce the A7-powered iPad Air the following year, with faster 64-bit performance in a thinner body without sacrificing battery life. The performance of the GPU also increased so dramatically that Apple didn't need to create a separate version of the chip for iPad; that allowed the company to focus on the transition to 64-bit, which further pushed ahead Apple's performance lead.

The differentiation afforded by last year's A7 forced Google to reverse its cheap tablet strategy with 2014's Nexus 9, selecting the only competitive processor option left (given the departure of TI's OMAP): Nvidia's Tegra K1. That required the company to double its asking price to $399, amid a sea of extremely cheap Android tablets that appear to cost as little as $50 (but are all defective).

In parallel, Apple introduced Metal, and leading developers launched titles to take advantage of the new API, which enables games and other graphics-intensive apps to bypass the overhead of OpenGL to take direct advantage of the advanced GPUs in Apple's latest 64-bit Application Processors sold over the last year.

Apple already had a graphics speed advantage very evident in mobile gaming; with Metal that advantage is increased by up to a factor of ten.

Last year, after Blizzard launched its "Hearthstone: Heroes of Warcraft" title for iOS, the game's production director Jason Chayes explained to Gamespot why it was so difficult to port games to Android.

"Just the breadth of different screen resolutions," he said, "different device capabilities— all of that is stuff we have to factor in to make sure the interface still feels good."

While Apple's GPU advantage is particularly apparent in gaming, the company profiled a series of apps involving image correction and video editing to show how Metal can not only increase performance, but actually enable tasks that simply weren't possible before on mobile hardware.

What's next for AMD & Nvidia?

Nvidia can't be pleased to have its fastest tablet chip just "nearly as fast" as Apple's A8X (without considering Metal), while having nothing to sell in the smartphone market, and no plans to address that much larger potential market anytime soon.

AMD, meanwhile, not only lacks a mobile GPU in the same ballpark but lacks any market presence, putting it in a position just behind Intel, which is at least paying companies to use its products (and losing over $4 billion a year to do that).

The fact that the world's two deeply established GPU leaders— as well as Intel and Texas Instruments, the world's two co-inventors of the microprocessor— have all been effectively pushed out of the mobile business by Apple— leaving nothing but low-end commodity part makers to compete against Apple's high end, high volume sales of iOS device— says something remarkable.

It also indicates that whatever Apple plans to do next should probably be taken seriously, certainly more so than the marketing claims of firms like Samsung, Microsoft, Google and Nvidia, which have been waving lots of bravado around but failing to deliver.

In addition to Qualcomm the next segment explores: After eating AMD & Nvidia's mobile lunch, Apple Inc could next devour their desktop GPU business.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

-m.jpg)

65 Comments

A nice overview, with an impressive timespan going all the way back to the Amiga 500.

AMD provide the GPUs for both the Xbox One and Playstation 4, so they're not going anywhere. And Nvidia is favored by PC owners which, due to sheer computing power, is still the best experience for game lovers. "PC Master Race" as they say. So Nvidia aren't going anywhere either. Unless gaming goes fully mobile of course.

But I think the future holds a bifurcation, with mobile gaming evolving in to Augmented Reality games and Desktop gaming evolving in to full Virtual Reality (headset?) games. Since Virtual Reality is ultimately more flexible than Augmented Reality, alllowing more artistic expression, in the long run the majority of gaming will move back to the desktop. So AMD and Nvidia just need to stick to their desktop guns and be the first to make a GPU powerful enough to run one of these VR headsets at your desk.

Samsung doesn't care for its own chips

Even though Samsung had been supplying Apple with ARM chips for iPods since 2006, it selected Nvidia's Tegra to power its own M1 iPod-clone in 2009.That same year, Samsung also introduced its Symbian-powered Omnia HD flagship phone, which it positioned against Apple's six month old iPhone 3G. But rather than also using its own chips, Samsung selected Texas Instruments' OMAP 3, which featured a next generation Cortex-A8 CPU and PowerVR SGX530 graphics. The new Palm Pre and Google's Android 2.0 flagship the Motorola Droid also used an OMAP 3.

The main reason for Samsung Mobile being so vendor-neutral is that Samsung Semiconductor treated them as just another customer. No special discounts, no special relationship, no input into Samsung Semiconductor's roadmap. This has changed only recently.

As for the case of the Omnia HD specifically, the reason to go with OMAP was simple - Symbian was already optimised for OMAP processors. The effort required to move to TI's most advanced chip was a lot less than moving to another vendor.

Why do app developers even bother with Android? With piracy rates on Android around 90% for popular apps it's like throwing your development dollars away. Even if Google provides a way to clean up the screen resolution and functional capabilities challenges in their fragmented ecosystem, until they clean up their act on allowing blatant and unobstructed thievery, any businesses who value their intellectual property and business investments are foolish to support Android. Sorry Google, but I much prefer a walled garden over an open cesspool.

Great article. Speaking of Macs, as noted in the article, GPU manufacturers have hurt Mac adoption in the past and continue to be Apple's Achilles heel to this day. I would love to see Apple's in-house expertise not only dominate mobile but also take the laptop market under their control. It is probably expecting too much to expect Apple to worry about the Mac Pro market segment though.

[quote name="ascii" url="/t/184466/how-amd-and-nvidia-lost-the-mobile-gpu-chip-business-to-apple-with-help-from-samsung-and-google#post_2665417"]A nice overview, with an impressive timespan going all the way back to the Amiga 500. AMD provide the GPUs for both the Xbox One and Playstation 4, so they're not going anywhere. And Nvidia is favored by PC owners which, due to sheer computing power, is still the best experience for game lovers. "PC Master Race" as they say. So Nvidia aren't going anywhere either. Unless gaming goes fully mobile of course. But I think the future holds a bifurcation, with mobile gaming evolving in to Augmented Reality games and Desktop gaming evolving in to full Virtual Reality (headset?) games. Since Virtual Reality is ultimately more flexible than Augmented Reality, alllowing more artistic expression, in the long run the majority of gaming will move back to the desktop. So AMD and Nvidia just need to stick to their desktop guns and be the first to make a GPU powerful enough to run one of these VR headsets at your desk. [/quote] With all the fuss about VR again and now holograms, I was just thinking ... I wonder if this might be another of those market places that Apple will keep out if for a few years ... then 'do it right'?