New research from Apple and Carnegie Mellon University delves into how smart devices could learn about their surroundings to better understand requests by knowing when and where they are being talked to.

Academics from Apple, and Carnegie Mellon University's Human-Computer Interaction Institute, have published a research paper describing how devices such as Siri and HomePod could be improved by having them listen to their surroundings. While many Apple devices listen, they are explicitly waiting to hear the phase "Hey, Siri," and anything else is ignored.

It's the same with Alexa, or at least it is in theory, but these researchers advocate having smart devices actively listen in order to determine details of their environment — and what people are doing there.

"Listen Learner," they say in their paper, "[is] a technique for activity recognition that gradually learns events specific to a deployed environment while minimizing user burden."

Currently, HomePods automatically adjust their audio output to suit the environment and space that they are in. And Apple has filed patents that would see future HomePods using the position of people in a room to direct audio to them.

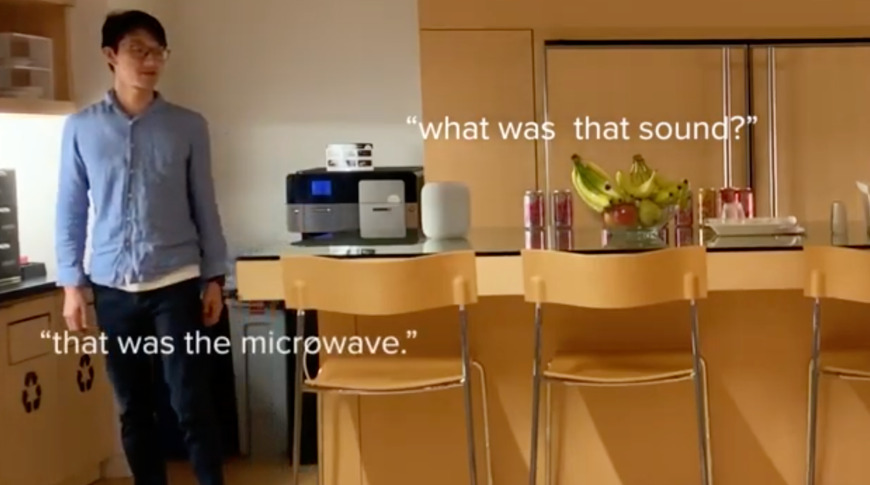

The idea behind this paper's research is that similar sensors could listen for sounds and detect where they are coming from. It could then group them so that, for instance, it recognizes what direction the bleeps from a microwave are coming. Understanding the context of where someone is standing and what noises are being heard from which directions, could make Siri better able to understand requests, or to volunteer information.

"For example, the system can ask a confirmatory query: 'was that a doorbell?', in which the user responds with a 'yes,'" it continues. "Once a label is established, the system can offer push notifications and other actions whenever the event happens again. This interaction links both physical and digital domains, enabling experiences that could be valuable for users who are e.g., hard of hearing."

While the paper repeatedly and exclusively mentions HomePods, it is really concerned with any device with microphones. It suggests that since we all now have an ever-increasing number of devices that are capable of listening, then we already have tools to improve voice control.

In a video accompanying the paper, the researchers demonstrate how listening like this can improve accuracy, and also how it's more successful than previous attempts to train devices.

The paper, "Automatic Class Discovery and One-Shot Interactions for Acoustic Activity Recognition," proposes that a device be able to listen continuously, although "no raw audio is saved to the device or to the cloud." It keeps doing this, effectively creating labels or tags that are triggered by certain sounds, until it's basically heard enough.

"Eventually, the system becomes confident that an emerging cluster of data is a unique sound, at which point, it prompts [the user] for a label the next time it occurs," explains the paper. "The system asks: 'what sound was that?', and [the user] responds with: 'that is my faucet.' As time goes on, the system can continue to intelligently prompt Lisa for labels, thus slowly building up a library of recognized events."

As well as a general "what sound was that?" kind of question, it might be able to guess and so try asking a more specific question. "The system might ask: 'was that a blender?'" says the paper. "In which [case the user] responds: 'no, that was my coffee machine.'"

While the paper is chiefly concerned with the effectiveness of a device asking the user questions like this, the researchers explain that they also tried specific use cases. "We built a smart speaker application that leverages Listen Learner to label acoustic events to aid accessibility in the home.," it says.

There is no indication of Apple or other firms integrating this idea into their smart speakers yet. Instead, this was a short-term focused test, and the team have recommendations for further research.

However, it's promising because they conclude that this test "provides accuracy levels suitable for common activity-recognition use cases," and brings "the vision of context-aware interactions closer to reality."

Keep up with all the Apple news with your iPhone, iPad, or Mac. Say, "Hey, Siri, play AppleInsider Daily," — or bookmark this link — and you'll get a fast update direct from the AppleInsider team.

William Gallagher

William Gallagher

-m.jpg)

Amber Neely

Amber Neely

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Andrew O'Hara

Andrew O'Hara

16 Comments

If I were Tim Cook, I would invest big bucks in a "audio OS" skunkworks. The standard people "expect" is the computer on Star Trek and it's going to take a lot of additional effort to get there. Ironically Apple is now in the position they usually put their competitors in: they made a big splash by being an early market presence with Siri, but now Hey Google and Alexa are the top brands. It would be great to see Apple leapfrog itself and roll out a new Siri that puts all the current offerings to shame (like the original iPhone). That's not going to be cheap in terms of R&D. I'm sure Apple is investing $$$ now, but I'd invest even more.

For one thing, I'd set up a panel of volunteers who are told to act as if Siri can do anything and learn the heck out of what types of things people want from this. Hell, have a small army of humans acting as "virtual Siris" so the experience is realistic.

Here's a trivial example of something I experience with Siri the other day. At the end of a board game, there can be a lot of math to calculate scores so I tried Siri. It worked! But she is rather annoying.

me: "Hey Siri what is 15 plus 21 plus 4 plus 45 plus 16 plus 9?"

Siri: "15 plus 21 plus 4 plus 45 plus 16 plus 9 is 110"

Using the Star Trek standard I should be able to interrupt with "just the answer" and she would omit echoing my question and just say 110. And the next time I ask she would also skip to the answer.

I know, we'd have this conversation/debate 100 times already. I personally don't care is Siri is better than Alexa right now or not. The fact is this is like debating which pre-iPhone smart phone was the best. I'm sure Apple is working on this; I just hope they are really working on it.

The HomePod Mini is the one in your pocket!

/s

Apparently Apple invented nothing and HomePod is a knockoff of Alexa somehow.

Seriously though, it's a shame an argument even exists. The sad part is Apple already has technology that blows the Siri wannabes away but they haven't implemented it. Either because of technical issues or they're holding back for a special update/product, I do not know.

Might be good to get Siri to understand the absolute basics first. My HomePod was playing yesterday, and after a while I asked Siri to pause. I then asked Siri to set a timer for 20 minutes; all fine. I then asked Siri to resume. "The timer is already running." Ok, so I asked Siri to resume playing. "The timer is already running." ...Third try, Siri resume playing music. "Sorry, I can't do that on HomePod." Music playback wouldn't work until I rebooted the HP. Thats just one example of many.

I find Siri's understand of what you said is really good, it rarely makes a mistake. But then the comprehension is terrible.

Another really annoying thing is it's impossible to talk to a particular Siri. Sometimes if my watch is on the top of my wrist and I ask Siri how long is left on a timer for example, watch Siri pipes up and says there are no timers, but then HomePod Siri stays silent despite a timer running. Either we need to be able to assign devices names, or it needs to be something like "Hey HomePod" or "Hey watch". When I had just a HP and an iPhone it wasn't an issue, but when I have HP, multiple iPhones, an Apple Watch and AirPods all active at once, it's pot luck which one will activate.

I know several people with an AW and they never really use Siri as it's so bad. I asked it to delete an email it just read the other day, and it said it wasn't "allowed" to do that. What?