New research reveals that Apple is looking at how to make Siri detect particular voices, and also determine their location, solely through vibrations.

Two newly-revealed patent applications show that Apple is investigating different ways that devices can detect people, or interact with them. The major one would have Siri able to recognize individual people and their spoken commands — without the device needing a regular microphone.

"Self-Mixing Interferometry Sensors Used to Sense Vibration of a Structural or Housing Component Defining an Exterior Surface of a Device," is concerned with using self-mixing interferometry (SMI). SMI involves detecting a signal "resulting from reflection [or] backscatter of emitted light" from a device.

"As speech recognition is improving and becoming more widely available, microphones are becoming increasingly important as input devices for interacting with devices (making the devices interactive devices)," says the patent application.

However, it continues, there are disadvantages to this.

"In a conventional microphone, sound waves are converted to acoustic vibrations on the membrane of the microphone, which requires a port for air to move in and out of the device beneath the microphone," says the application. "The port can make the device susceptible to water damage, clogging, and humidity, and can be a cosmetic distraction."

Consequently, due to "sensitivities that are much better than the wavelength of the used light," Apple proposes that an array of SMI sensors be used. "An SMI sensor may sense vibrations induced by sound and/or taps on a surface. Unlike a conventional diaphragm-based microphone, an SMI sensor can operate in an air-tight (or sealed) environment."

These SMI sensors need not solely detect vibrations, such as those caused by sound. Nor need they be the sole sensor in a device.

"By way of example," says Apple, "the sensor system(s) may include an SMI sensor, a heat sensor, a position sensor, a light or optical sensor, an accelerometer, a pressure transducer, a gyroscope, a magnetometer, a health monitoring sensor, and an air quality sensor, and so on."

What this would mean in practice is that a device could "be configured to sense one or more type of parameters, such as but not limited to, vibration; light; touch; force; heat; movement; relative motion; biometric data (e.g., biological parameters) of a user; air quality; proximity; position; connectedness; and so on."

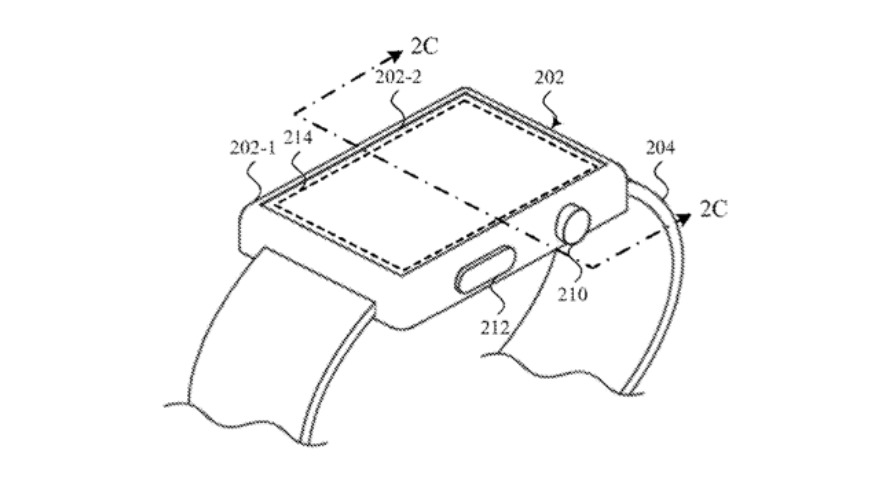

Apple describes how a device, such as an Apple Watch, can determine where it is and what's nearby.

"For example, if the source is determined to be within a room in which [a] TV is installed," says the patent application, "the TV may transition its electronic display from a low power or no power state to an operating power state after identifying... a voice of a person carried in the vibratory waveform, or a voice of a particular person."

So you could walk into your living room and ask your Watch to turn on the TV. It would recognize a spoken command, even if the Watch didn't have a traditional microphone.

It would also specifically identify you. Knowing both that you are authorized to use the TV, and which TV is nearby, the device could then turn that television set on.

Apple's proposal is for a mixture of different ways of detecting a user's requests, and even calculating the odds that a vibration comes from a person. Such devices, whether wearable or static like an Apple TV, would determine "from the source of a vibratory waveform is likely a person."

It would do this "based on information contained in the vibratory waveform," which includes "a determined direction or distance of a source." Such information would also include any change in location, such as "footsteps suggesting that a person is moving to a predetermined viewing or listening location."

Apple refers to the device having "an enclosure defining a three-dimensional input space," and suggests that it would effectively map its surroundings. That's similar to what the original HomePod does when first set up.

And it's similar to Apple and Carnegie Mellon University's "Listen Learner" research. That paper proposes "activity recognition" that sees Siri mapping the room.

This first of two newly-revealed patents on similar topics, is credited to three inventors, Ahmet Fatih Cihan, Mark T. Winkler, and Mehmet Mutlu. The last two were previously credited on a patent application to do with multiple devices detecting each other's location.

Mehmet Mutlu is also one of three inventors credited on the separate but related "Input Devices that Use Self-Mixing Interferometry to Determine Movement Within an Enclosure" application.

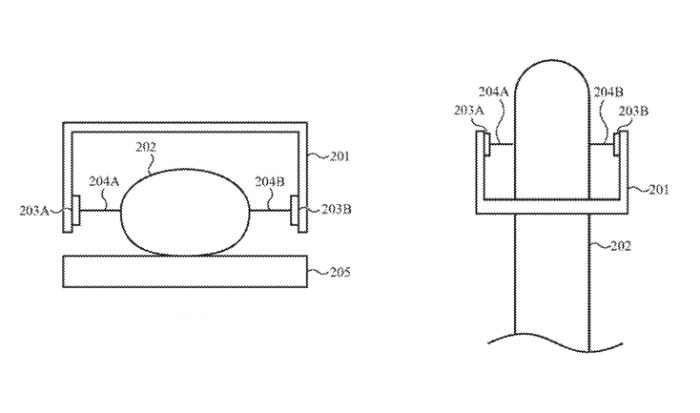

Detail from the patent showing (left) a front and (right) top view of a finger being detected without touch sensors

Detail from the patent showing (left) a front and (right) top view of a finger being detected without touch sensorsCreating new virtual keyboards

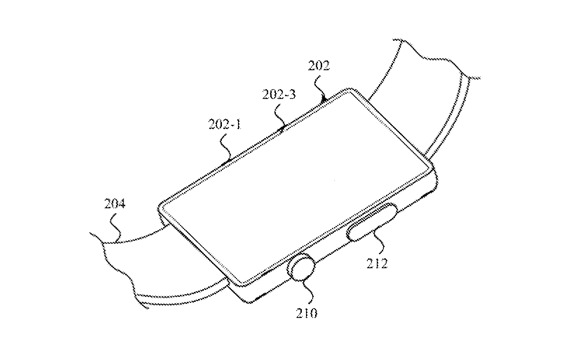

The first patent application is ultimately about ways of using self-mixing interferometry sensors to detect what is going on around a device. This second application is instead concerned with detecting what happens within a device, or a specified "three-dimensional input space."

"Different input devices are suited to different applications," says Apple. "Many input devices may involve a user touching a surface that is configured to receive input, such as a touch screen. Such an input device may be less suited to applications where a user may be touching another surface."

Typical virtual keyboards present the user with an image of keys, and rely on touch sensors to register actual presses. With SMI, "movement of the body part," for instance, a finger, could be detected instead.

"In some examples, a body part displacement or a body part speed and an absolute distance to the body part may be determined using the self-mixing interferometry signal and used to determine an input," continues Apple.

Using SMI in this way would mean the device need not have a touch-sensitive display. So it could be thinner, or it could be a device that is only occasionally used for input.

William Gallagher

William Gallagher

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

3 Comments

If I’m following this correctly, they are considering the same phenomenon used in laser mikes, which is an intriguing proposition.

Whoever wrote the descriptive text for this patent submission seems to have a somewhat loose grip on the physics of sound.

"In a conventional microphone, sound waves are converted to acoustic vibrations on the membrane of the microphone..."

Huh? Sound is a physical force. Sound is vibration, no conversion is required. Physically, sound is the compression and rarefaction of the physical medium through which sound pressure propagates. Modeling sound as "waves" is a convenience and a way to represent sound pressure variations in graphical and mathematical models, but sound waves are exactly the same as acoustic vibrations.

Combining interferometry with acoustics was a technique that was used during the Cold War era to remotely spy on persons talking in a room by bouncing laser beams off a window in the room. The sound pressure from people speaking in the room would be coupled to the window panes and the (laser) interferometer would be used to remotely sense the window vibrations and convert them back to acoustic signals on the listener side. Likewise, identifying and classifying individual "sound sources" by analyzing their acoustic signature is a several decades old technique used for everything from military applications to machinery failure prediction. All identification/classification techniques require the application of learning, intelligence, and probability.

If only there was a way to record someone's voice and play it back again, you could easily hack into any voice authentication device. It's a good thing that advanced technology like that is still far in the future.