As part of making virtual objects appear to fit in with the real-world environment, "Apple Glass" may adjust AR elements depending on light and other factors in the real world.

There are certain types of shirts, blouses, and ties, that you will never see worn on television because they cause interference patterns. Now it appears that Apple has identified similar concerns for augmented reality, as new Apple AR research shows it working to counteract problems.

"Modifying Display Operating Parameters Based on Light Superposition From a Physical Environment," is a newly-revealed patent application that is particularly concerned with how lighting can cause issues with different virtual objects.

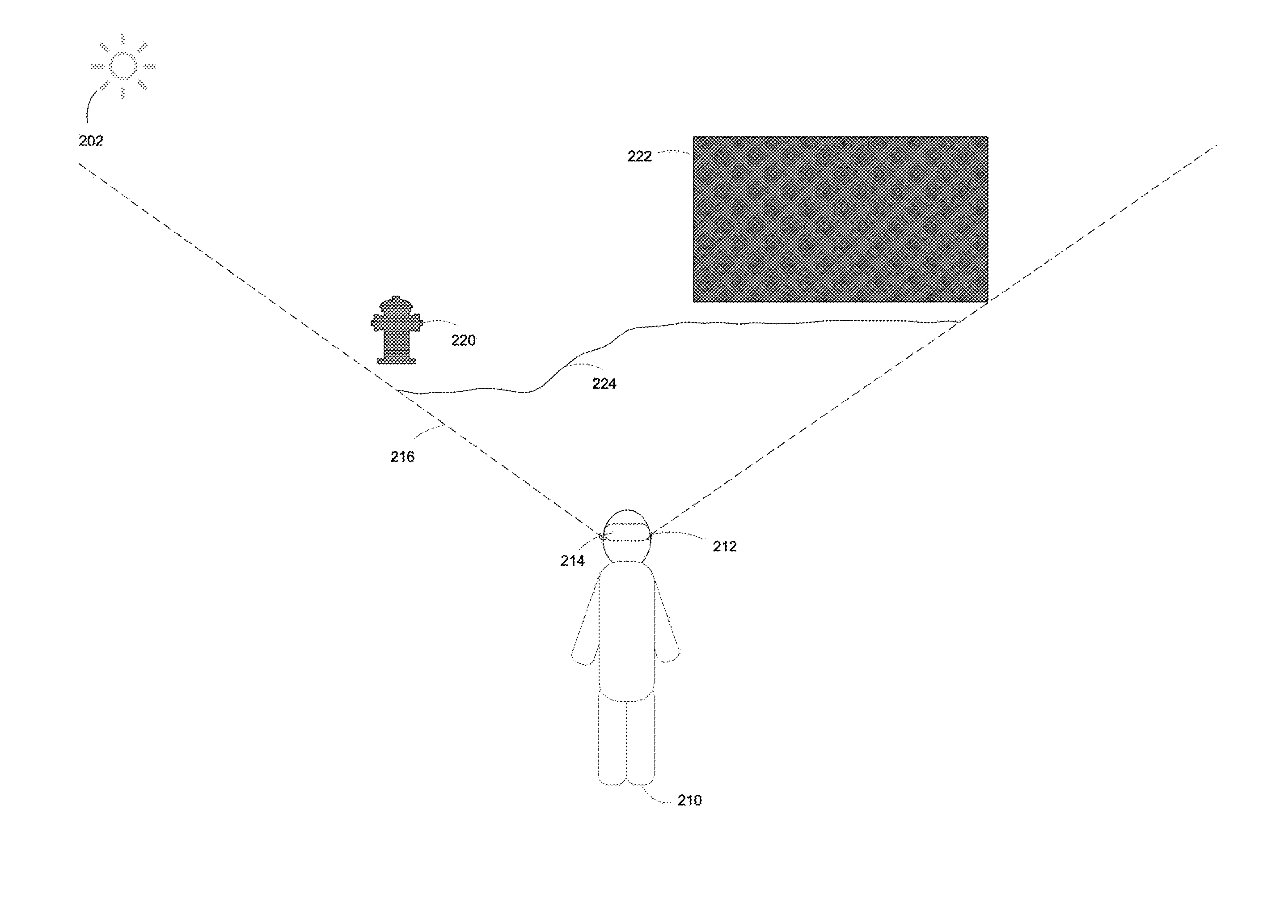

"In augmented reality (AR), computer-generated content is composited with a user's physical environment in order to comingle computer generated visual content with real-world objects," it says. "A user may experience AR content by wearing a head-mountable device (HMD) that includes a translucent or transparent display, which, in turn, allows the pass-through of light from the user's physical environment to the user's eyes."

Apple describes the HMD as being "an additive display," meaning that it adds "computer-generated content to the light from the user's physical environment."

"In some circumstances... light from the physical environment has a color composition and/or brightness that interferes with computer-generated content in a manner that degrades the AR experience," continues Apple. "For example, light from the physical environment limits a level of contrast between the physical environment and displayed computer-generated content."

It's also an issue if "predominantly one color" is present as that, "may interfere with the color composition of displayed computer-generated content by providing dominant hues that are difficult to mask using additive display methods and hardware."

Apple isn't the first to spot the issue, but the patent application is dismissive of previous attempts to solve it with techniques "similar to sunglasses."

"Some previously available systems include a physical fixed dimming layer that is integrated with a translucent display and the physical environment," it says. "However, the display displays a constantly darker version of the user's physical environment, thereby degrading the user's experience and preventing use of such systems in low light situations."

Consequently, Apple's proposal is more granular than pulling down a shade over everything. Instead, "Apple Glass," or a similar device, would continuously look for "a plurality of light superposition characteristic values," or when ambient light is interfering with AR objects.

If the wearer moves so that the fire hydrant is in front of the darker area, "Apple Glass" will adjust its brightness so it can still be seen

If the wearer moves so that the fire hydrant is in front of the darker area, "Apple Glass" will adjust its brightness so it can still be seen"[This is detected when the] ambient light emanates from the physical environment towards one side of the translucent display," says Apple. The system then "quantifies interactions with the ambient light," to measure what changes or corrections are needed for the AR object to be clearly seen.

Sensing the ambient light levels, and sensing the composition of objects within the user's field of view, the system already knows the "predetermined display characteristics of a computer-generated reality (CGR) object."

Consequently, the system can then adjust "one or more display operating parameters" of the HMD, "in order to satisfy the predetermined display characteristics of the CGR object within a performance threshold."

Determining where a user is looking

"Apple Glass," like any other AR device, has to balance performance and features with battery life. One way Apple repeatedly works to maximize that balance is by concentrating solely on what a wearer is looking at now.

The requirement to alter a HMD's AR objects to avoid problems with the surroundings is practically negated if the wearer is currently staring at something else. So alongside its many previous gaze-detection patents, a second newly-revealed patent is concerned with what people are looking at — and how that object can then react.

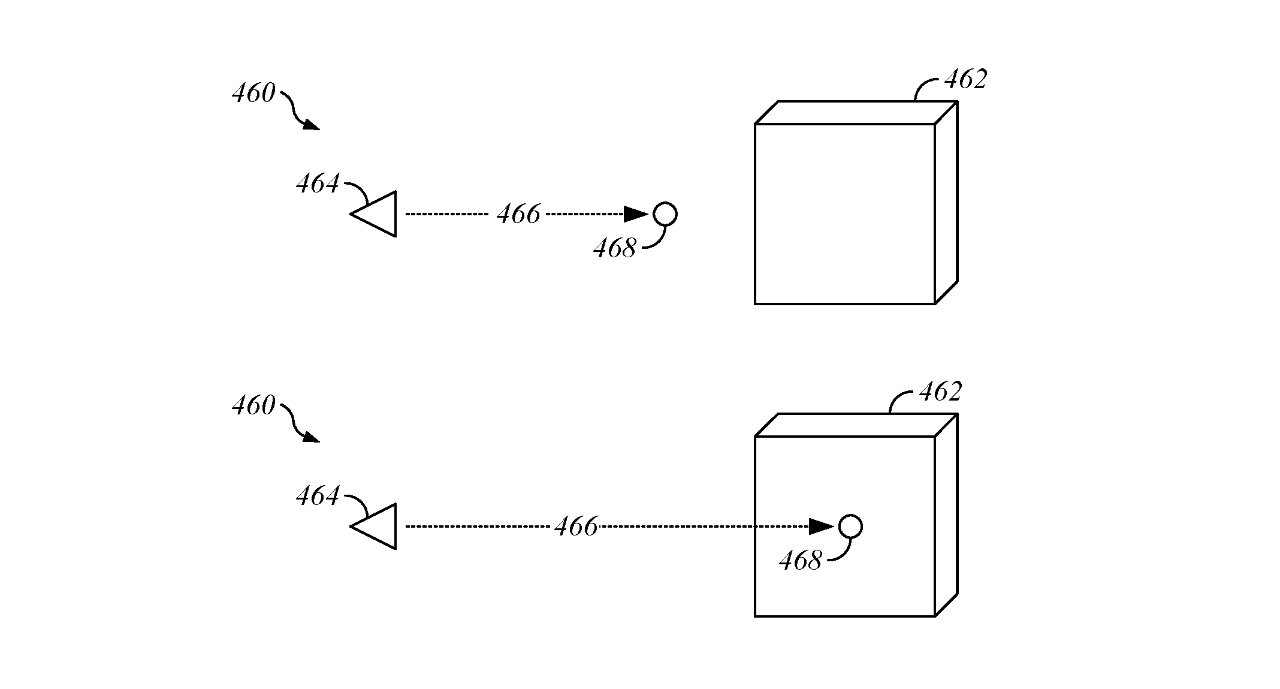

"Focus-Based Debugging And Inspection For A Display System," determines "a focus point relative to a viewing location in a virtual environment," using the "eye focus depth."

"Gaze-direction dependent displays are used in computer-generated reality systems, such as virtual reality systems, augmented reality systems, and mixed reality systems," says the patent application. "Such environments can include numerous objects, each with its own properties, settings, features, and/or other characteristics."

So depending on where the user is looking, and what both the AR and the real-world environments are, there may be many virtual objects to see and manipulate. Using the methods described in this patent application, the AR system can also calculate when one virtual object is obscuring another.

If adjusting objects to match ambient light is like True Tone, this patent application is reminiscent of how the Mac originally drew windows. Originally, the Mac used to save processing resources by not redrawing and refreshing all of a window when part of it was covered by another one.

Detail from the patent examining different eye focus depths on an object

Detail from the patent examining different eye focus depths on an object"[In Apple AR this involves] transitioning a first object from the virtual environment from a first rendering mode to a second rendering mode based on a location of the virtual focus point relative to the first object," continues Apple, "wherein visibility of the second object from the virtual view location is occluded by the first object..."

The True Tone-style patent application is credited to Anselm Grundhoefer, and Michael J. Rockwell. Grundhoefer's previous work includes a process for using sound to direct users' attention in AR.

Of the four credited inventors on the eye focus depth patent application, both Norman N. Wang, and Tyler L. Casella previously worked on how AR objects could be shared and edited.

Follow all the details of WWDC 2021 with the comprehensive AppleInsider coverage of the whole week-long event from June 7 through June 11, including details of all the new launches and updates.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Andrew O'Hara

Andrew O'Hara

Sponsored Content

Sponsored Content

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

4 Comments

Not sure why AI is using the image of the VR headset when this patent is related to the AR glasses.

Don’t think anyone who has experienced even a mild episode of hallucinatory psychosis would be the least bit interested in “augmented reality.”