Apple is researching how to make "Apple Glass," or other AR devices, sense and automatically adjust the ambient lighting around the wearer using HomeKit.

There still aren't all that many HomeKit devices, compared to ones for Google's Alexa, but Apple may be planning to add one more. A newly revealed patent shows that "Apple Glass," or any other Apple AR head-mounted display (HMD), could integrate with a user's lighting.

As is typical for a patent, "Dynamic ambient lighting control" describes systems as broadly as it can and so only specifically mentions HomeKit once in its 7,500 words. But the entire patent is concerned with how ambient light can be sensed, and then that information used to appropriately control other devices.

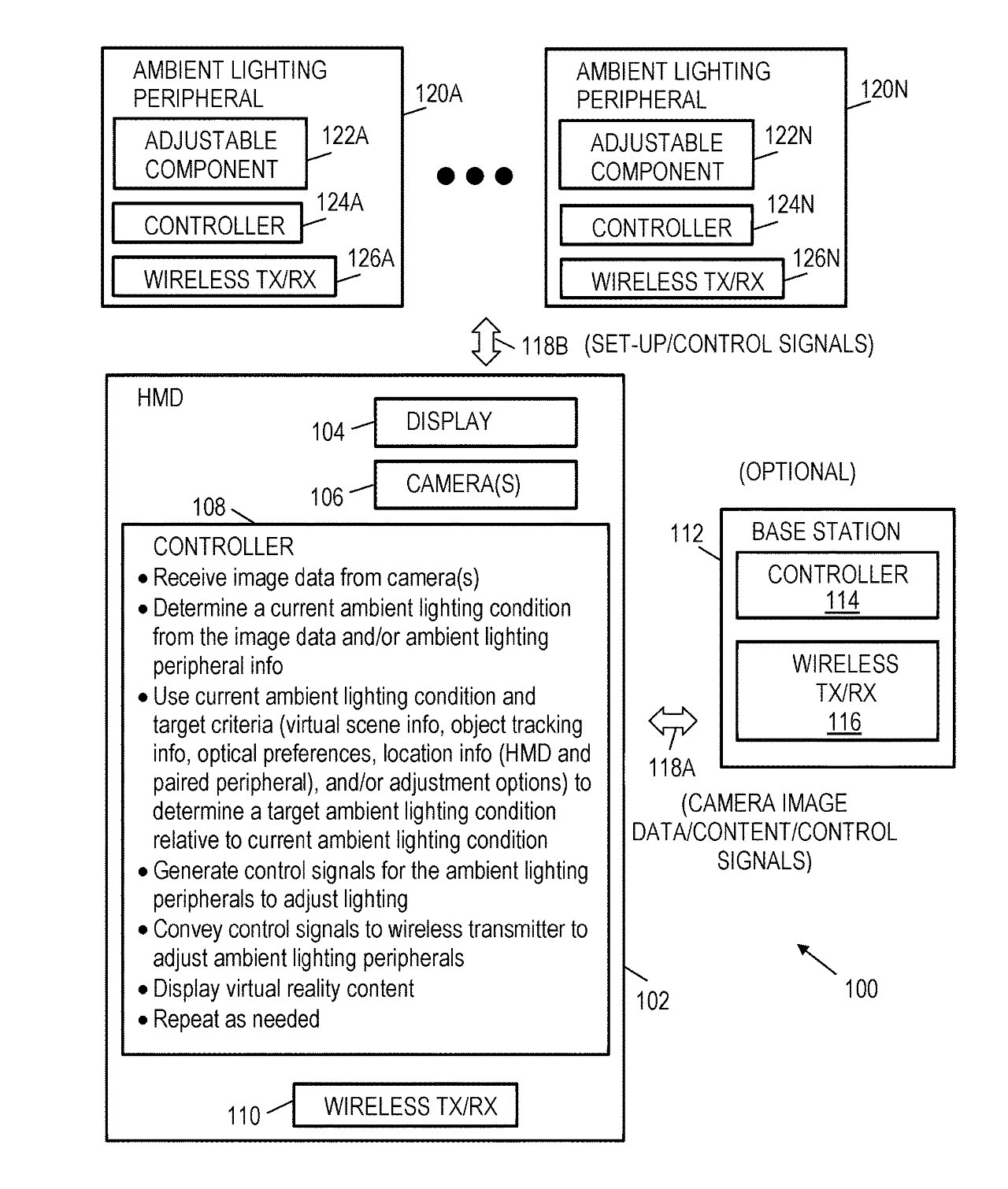

"The controller [in the HMD] is configured to determine a target lighting condition in a room relative to a current lighting condition in the room," says the patent. "The controller is also configured to generate a control signal with instructions to adjust an ambient lighting peripheral in the room based on the determined target lighting condition."

As with any smart home controlling device, there is the argument that a user could just turn off the light switch. But Apple sees this control as being integral to AR/VR.

"One of the challenges for successfully commercializing CGR devices relates to providing a quality experience to the user," continues the patent. "Merely providing a head-mounted device and CGR content is not effective if the quality of the CGR experience is deficient."

So it's more than just dimming the lights for the start of the movie, this is closer in spirit to Apple's True Tone-like feature for matching a virtual object's brightness to its real-world surroundings.

There is overlap with that idea, in that this patent covers a method of "displaying computer-generated reality content on a head-mounted device after adjustment of the ambient lighting peripheral using the control signal."

However, this patent is more concerned with determining the "current ambient lighting condition," and then acting on it. The headset receives "image data captured by at least one camera," and based on unspecified "target criteria," it conveys "a control signal to adjust an ambient lighting peripheral.

The target criteria could be something set by the AR application being used, or something adjustable by the wearer. The patent doesn't specify, but it's not concerned with what precisely is done with any information, just with how that data is gathered and can be acted on.

"In this scenario, captured camera images can be combined with CGR content to display what has been referred to as 'mixed reality' content on the head-mounted device," says Apple. "Improving the quality of CGR content, including mixed reality content, is not a trivial task."

"There are conflicting limitations related to cameras, content generation, display quality, processing speed, and system cost that have not been adequately resolved," it continues.

The patent is credited to four inventors, including Timothy R. Oriol. His previous work includes a wider-ranging Apple AR patent application that is concerned with creating immersive and high-quality images.

High quality

High quality is a concern in that prior patent application, and it's part of what the newly-revealed one is referring to when discussing limitations. A separate new patent attempts in part to address the issue.

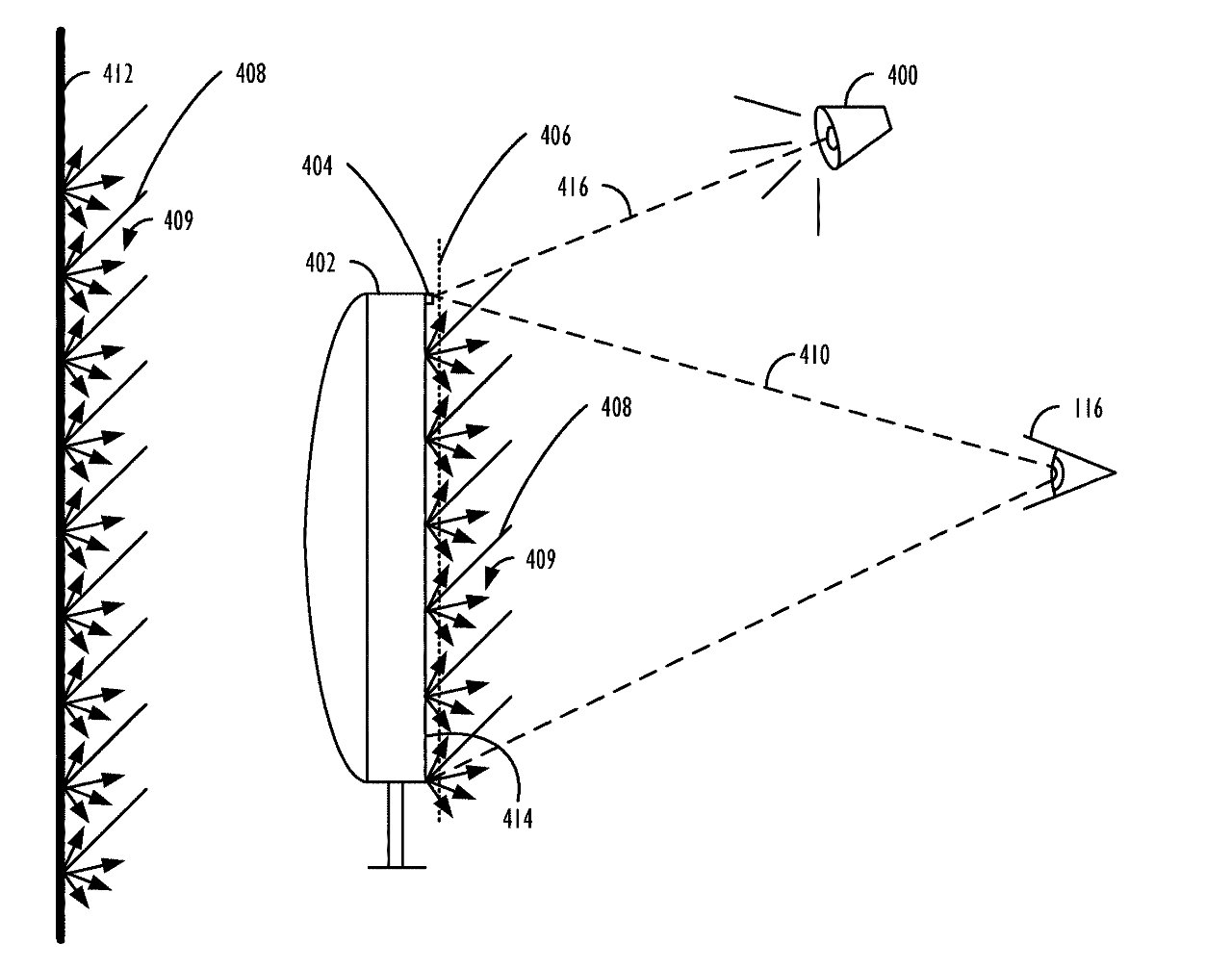

"Adaptive transfer functions," is concerned with how the highest-quality data can be sent to and from the HMD in the most efficient way.

In part, it involves using ambient environment gathered from the HMD, "to predict a viewer of the display device's current visual adaptation." That's "adaptation" as in the physical ability of the wearer's eye.

"The full dynamic range of human vision covers many orders of magnitude in brightness," continues the patent. "For example, the human eye can adapt to perceive dramatically different ranges of light intensity, ranging from being able to discern landscape illuminated by star-light on a moonless night... to the same landscape illuminated by full daylight."

Apple says that to provide high-quality images that could contain every possible level of brightness would require immense amounts of data. And even if that were possible, it would not necessarily produce a desirable display.

"It should be mentioned that, while linear coding of images is required mathematically by some operations, it provides a relatively poor representation for human vision," continues the patent, "which, similarly to most other human senses, seems to differentiate most strongly between relative percentage differences in intensity (rather than absolute differences)."

"Linear encoding tends to allocate too few codes (representing brightness values) to the dark ranges, where human visual acuity is best able to differentiate small differences leading to banding," says Apple, "and too many codes to brighter ranges, where visual acuity is less able to differentiate small differences leading to a wasteful allocation of codes in that range."

Consequently, Apple's proposal is for a "perceptually-aware and/or content-aware system" that recognize what is being displayed. As well as what the media requires, the patent is also concerned with being able to "dynamically adjust a display." to optimize what the user sees.

"Successfully modeling the user's perception of the content data displayed" would mean they got a better experience, "providing optimal encoding" that allowed for high quality with lower data rates.

This patent is credited to Kenneth I. Greenebaum. His previous work includes patents on video processing either on the server-side or display-side.

Follow all the details of WWDC 2021 with the comprehensive AppleInsider coverage of the whole week-long event from June 7 through June 11, including details of all the new launches and updates.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

William Gallagher

William Gallagher

-m.jpg)

Bon Adamson

Bon Adamson

Marko Zivkovic

Marko Zivkovic

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

-m.jpg)

3 Comments

“

so while this statement may be true, why even say it? It would be relevant to say, there are no good security cams for HomeKit (but that is not true). It would also be relevant to say there are many security cams for Alexa that don’t have end to end encryption (I have no idea if this is true).