Apple's introduction of CSAM tools may be due to its high level of privacy, with messages submitted during the Epic Games App Store trial revealing Apple's anti-fraud chief thought its services was the "greatest platform for distributing" the material.

Apple has a major focus on ensuring consumer privacy, which it builds as far as possible into its products and services. However, it seems that focus may have some unintended consequences, in enabling some illegal user behavior.

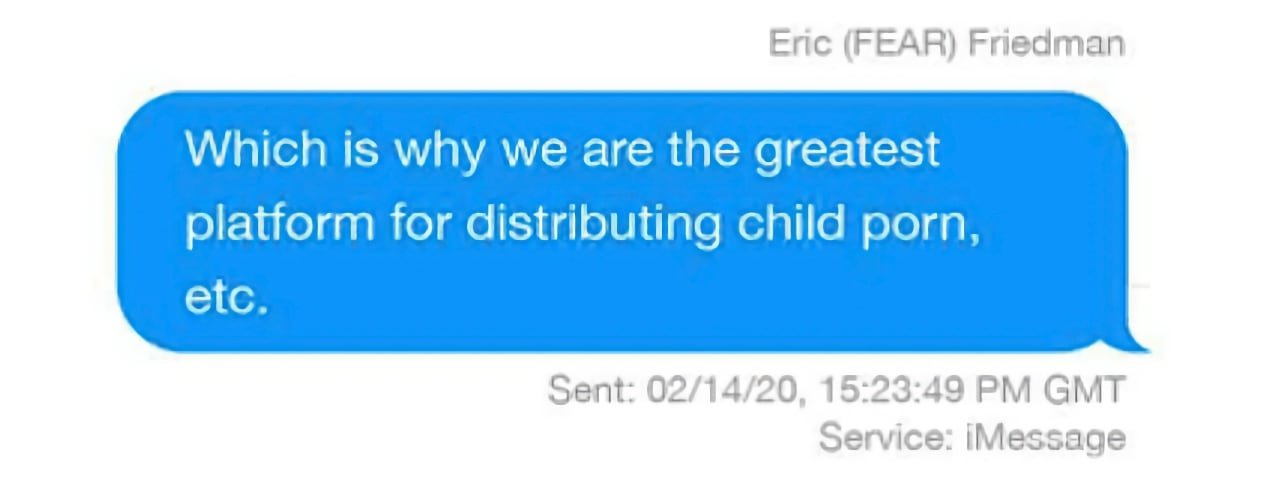

Uncovered from documents submitted during the Apple-Epic Games trial and discovered by The Verge, an iMessage involving Apple Fraud Engineering Algorithms and Risk head Eric Friedman from 2020 seems to hint at the reasons behind Apple's introduction of CSAM tools.

In the thread about privacy, Friedman points out that Facebook's priorities differ greatly from Apple's, which has some unintended effects. While Facebook works on "trust and safety," in dealing with fake accounts and other elements, Friedman offers the assessment "In privacy, they suck."

"Our properties are the inverse," states the chief, before claiming "Which is why we are the greatest platform for distributing child porn, etc."

When offered that there are more opportunities for bad actors on other file-sharing systems, Friedman highlights "we have chosen to not know enough in places where we really cannot say." The fraud head then references a graph from the New York Times depicting how firms are combatting the issue, but then suggests "I think it's an underreport."

Friedman also shares a slide from a presentation he was going to make on trust and safety, with "child predator grooming reports" listed as an issue for the App Store. It is also deemed an "active threat," as regulators are "on our case" on the matter.

While the findings aren't directly attributable to the introduction of CSAM tools, they do indicate that Apple has considered the problem for some time, and is keenly aware of its faults that led to the situation.

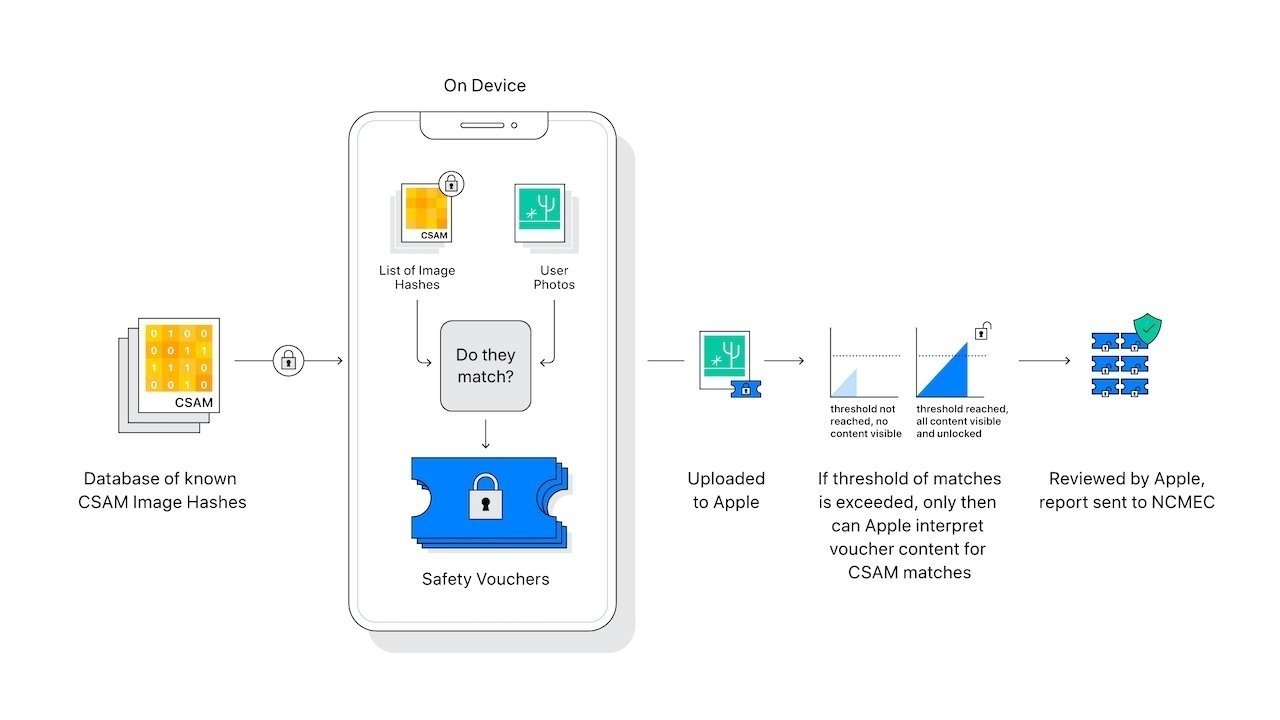

Despite Apple's efforts on the matter, it has received considerable pushback from critics over privacy. Critics and government bodies have asked Apple to reconsider, over concerns that the systems could lead to widespread surveillance.

Apple, meanwhile, has taken steps to de-escalate arguments, including detailing how the systems work and emphasizing its attempts to maintain privacy.

Malcolm Owen

Malcolm Owen

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Amber Neely

Amber Neely

7 Comments

No matter how much “deescalating and explaining” Apple does: it cannot change the fundamental fact, that once the infrastructure is in place, there’s no TECHNICAL limit for what it can be used, but there may be LEGAL limits in Apple’s ability to tell people when the use or the reporting or the databases change based on a variety of governments’ “lawful requests”.

It isn’t Apple’s job to know or prevent criminal behavior.

Sony doesn’t have cameras that try to detect what pictures you take, paper vendors aren’t concerned with the fact that you jot down notes of an assassination plot on the paper you bought, the post office doesn’t care if you mail a USB stick with snuff videos on it.

It’s not Apple’s job to know, care, prevent, or report crime, unless as a service at the request of the owner (“find my stolen phone!”)

So Apple’s very thinking is flawed, as they hold themselves responsible for something that’s fundamentally none of their business, literally and figuratively.

How do they know that they are the greatest platform to distribute CSAM? I believe in their honesty and I don’t even consider such a possibility as they browse user photos leisurely. Then the only way* for them to know that is the law enforcement operations that successfully revealed so many CSAM. Read this as “law enforcement requests we fulfilled by delivering unencrypted iCloud data revealed so many CSAM”. If so, then there is already an effective mechanism in place. What is the point in playing the street vigilante?

* If they know that by CSAM hashing iCloud photos on their servers then again there is an already effective mechanism that revealed so many CSAM. Then what is the point of injecting a warrantless blanket criminal search mechanism into the user’s property?

Of all the CSAM whiners of late, I haven’t seen any address what their thoughts are on the fact that Google, Dropbox, Microsoft, Twitter, already do this... Nobody allows images to be stored on their servers w/o scanning hashes to ensure it isn't known child porn. Microsoft has PhotoDNA, and Google has its own tools:

https://www.microsoft.com/en-us/photodna

https://protectingchildren.google/intl/en/

…are they also outraged about it? Are they going to give up Gmail and Dropbox because they too scan hashes for child pornography?