Apple removed all signs of its CSAM initiative from the Child Safety webpage on its website at some point overnight, but the company has made it clear that the program is still coming.

Apple announced in August that it would be introducing a collection of features to iOS and iPadOS to help protect children from predators and limit the spread of Child Sexual Abuse Material (CSAM). Following considerable criticism from the proposal, it appears that Apple is pulling away from the effort.

The Child Safety page on the Apple Website had a detailed overview of the inbound child safety tools, up until December 10. After that date, reports MacRumors, the references were wiped clean from the page.

It is unusual for Apple to completely remove mentions of a product or feature that it couldn't release to the public, as this behavior was famously on display for the AirPower charging pad. Such an action could be considered as Apple publicly giving up on the feature, though a second attempt in the future is always plausible.

Apple's features consisted of several elements, protecting children in a few ways. The main CSAM feature proposed using systems to detect abuse imagery stored within a user's Photos library. If a user had iCloud Photos on, this feature scanned a user's photos on-device and compared them against a hash database of known infringing material.

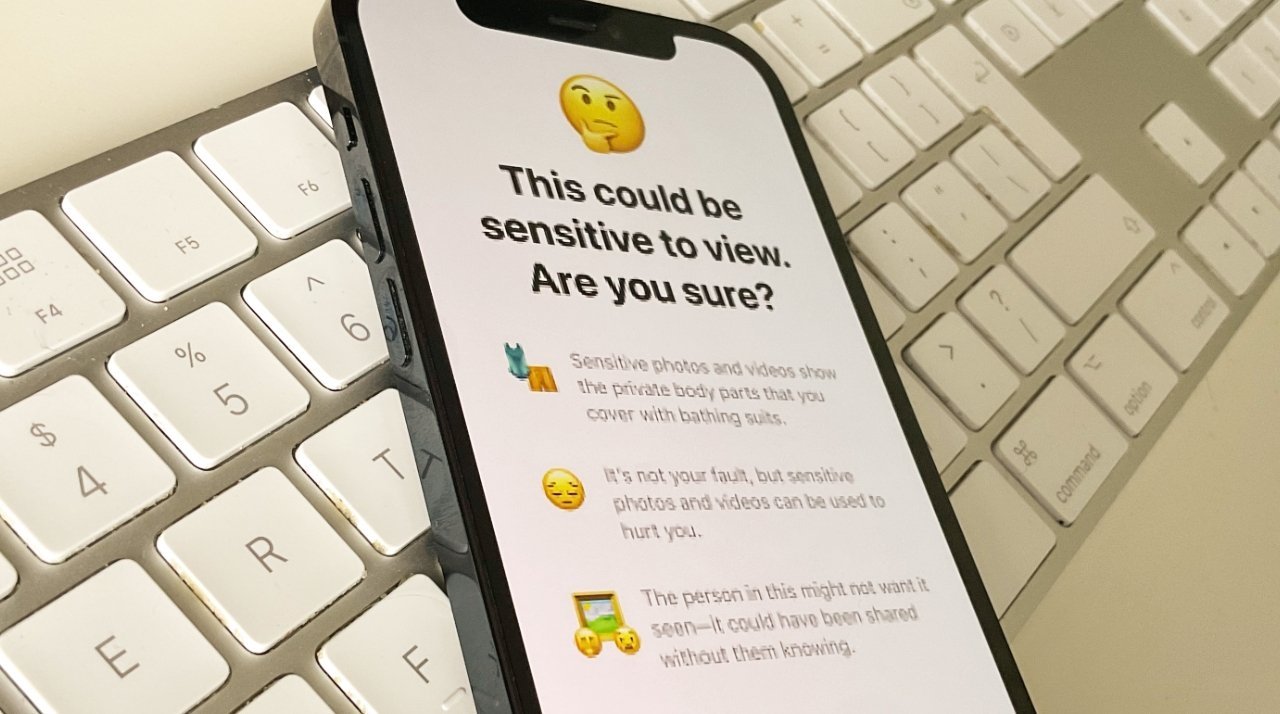

A second feature has already been implemented, intending to protect young users from seeing or sending sexually explicit photos within iMessage. Originally, this feature was going to notify parents when such an image was found, but as implemented, there is no external notification.

A third added updates to Siri and Search offering additional resources and guidance.

Of the three, the latter two made it into the release of iOS 15.2 on Monday. The more contentious CSAM element did not.

The Child Safety page on the Apple Website previously explained Apple was introducing the on-device CSAM scanning, in which it would compare image file hashes against a database of known CSAM image hashes. If a sufficient quantity of files were to be flagged as CSAM, Apple would have contacted the National Center for Missing and Exploited Children (NCMEC) on the matter.

The updated version of the page removes not only the section on CSAM detection, but also references in the page's introduction, the Siri and Search guidance, and a section offering links to PDFs explaining and assessing its CSAM process have been pulled.

On Wednesday afternoon, The Verge was told that Apple's plans were unchanged, and the pause for the re-think is still just that -- a temporary delay.

Shortly after the announcement of its proposed tools in August, Apple had to respond to claims from security and privacy experts, as well as other critics, about the CSAM scanning itself. Critics viewed the system as being a privacy violation, and the start of a slippery slope that could've led to governments using an expanded form of it to effectively perform on-device surveillance.

Feedback came from a wide range of critics, from privacy advocates and some governments to a journalist association in Germany, Edward Snowden, and Bill Maher. Despite attempting to put forward its case for the feature, and an admittance it failed to properly communicate the feature, Apple postponed the launch in September to rethink the system.

Update December 15, 1:39 PM ET: Updated with Apple clarifying that its plans are unchanged.