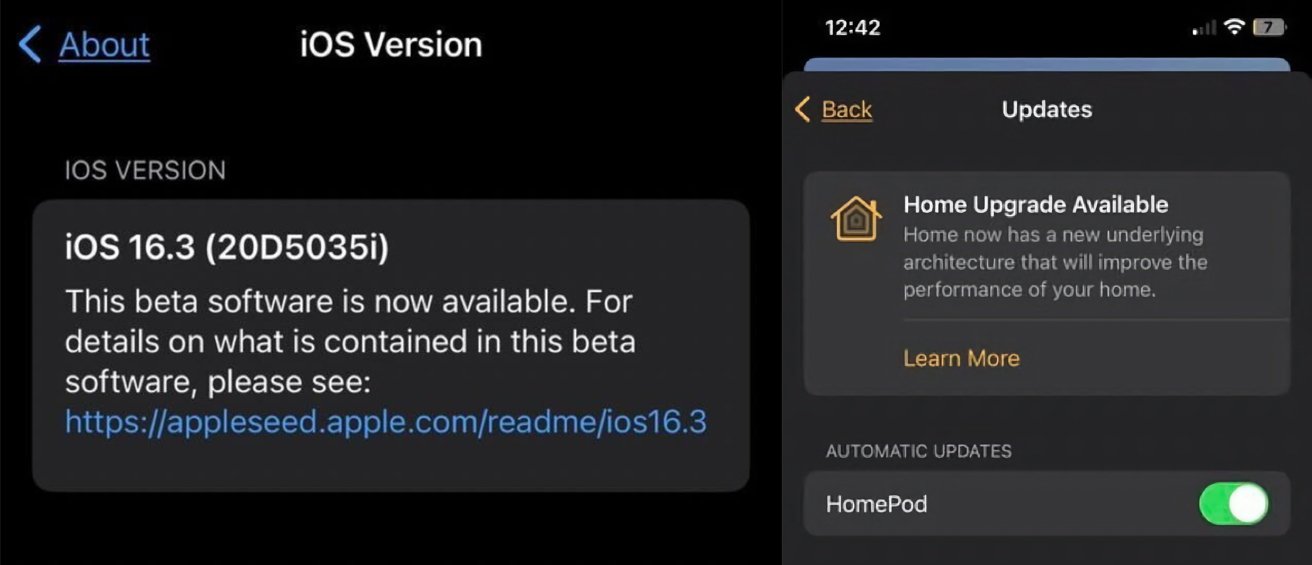

After halting its rollout of HomeKit's new architecture in iOS 16.2, Apple has resumed testing of the platform, with it resurfacing in the iOS 16.3 beta.

In December, Apple withdrew the option to upgrade Homekit to the new architecture, following reports the update wasn't working properly for users. It now seems that Apple is preparing to try it all again for the next set of operating system updates.

Screenshots from the iOS 16.3 beta show there is a message in the Home app confirming there is a "Home Upgrade Available," with a "new underlying architecture that will improve the performance of your home." This is the same update message that appeared in iOS 16.2 before being pulled.

The inclusion of the notification in the beta is a strong indication that Apple believes all is fine with the update, and will be trying to release it to the public once again.

For the previous attempt, users reported seeing devices stuck in an "updating" mode after the upgrade completed, with some seeing devices unresponsive or failing to update fully. At the time, it was unclear what had caused the problems as there wasn't any spottable commonalities between accounts of the issue.

With the appearance in the iOS 16.3 beta, it seems Apple is confident it's worked out the problems and is willing to give it a second try.

Malcolm Owen

Malcolm Owen

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

10 Comments

I’ll be the first person to let a whole bunch of other people try this for several days before I feel confident in trying myself.

I'm stuck in limbo where I can't invite anyone who has ever opened the Home app to my home, because no one can join the upgraded architecture without upgrading their own home first for some ridiculous reason. Therefore since the rollout was cancelled people can't upgrade their home, and so it's impossible for them to join.

I can't downgrade my home even if I reset everything, because despite the upgrade being cancelled new homes still use the new architecture. And besides that resetting doesn't work properly anymore either, even with the special home it reset profile. It's a mess.

That said, the upgrade seemed to improve the responsiveness and reliability of homekit. I'm sure they could have used the homekit hubs as a bridge between old and new versions - though less incentive to upgrade of course.