The White House convened with heads of seven companies to drive voluntary commitments to develop safe and transparent AI, but Apple was not among those present, and it's not clear why.

The administration has recently announced that it has secured voluntary commitments from seven leading artificial intelligence (AI) companies to manage the risks posed by AI. This initiative underscores safety, security, and trust principles in developing AI technologies.

The companies that have pledged their commitment include Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI. Interestingly, despite Apple's work in AI and machine learning, the company is missing from the talks.

The absence of Apple from this initiative raises questions about the company's stance on AI safety and its commitment to managing the risks associated with AI technologies.

AI commitments and initiatives

One of the primary commitments made by these companies is to ensure that AI products undergo rigorous internal and external security testing before they are released to the public. The testing aims to guard against significant AI risks like biosecurity, cybersecurity, and broader societal effects.

Furthermore, these companies have taken the responsibility to share vital information on managing AI risks. Sharing that information won't be limited to the industry but will extend to governments, civil society, and academia.

The goal is to establish best practices for safety, provide information on attempts to bypass safeguards and foster technical collaboration.

In terms of security, these companies are investing heavily in cybersecurity measures. A significant focus is protecting proprietary and unreleased AI model weights, which are crucial components of an AI system.

The companies have agreed that these model weights should only be released when intended and after considering potential security risks. Additionally, they facilitate third-party discovery and reporting of vulnerabilities in their AI systems, ensuring that any persisting issues are promptly identified and rectified.

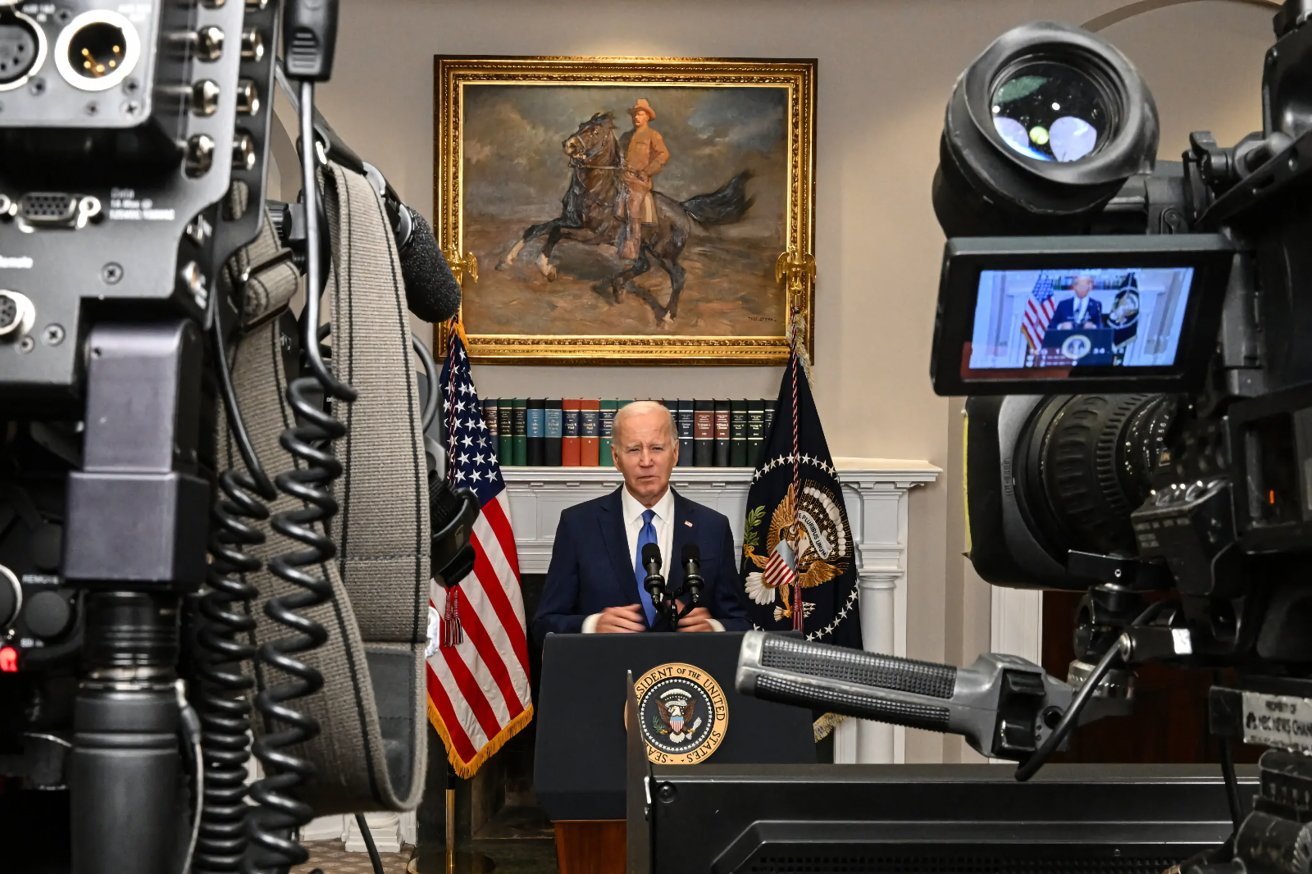

President Biden meets with AI companies on safety standards and commitments. Source: Kenny Holston/The New York Times

President Biden meets with AI companies on safety standards and commitments. Source: Kenny Holston/The New York TimesTo earn the public's trust, these companies are developing robust technical mechanisms to ensure users can identify AI-generated content, such as through watermarking systems. That approach allows creativity with AI to thrive while minimizing the risks of fraud and deception.

Moreover, these companies have committed to publicly reporting on their AI systems, covering security and societal risks to address areas such as the effects of AI on fairness and bias.

Research is also a significant focus. The companies are prioritizing research into the societal risks that AI systems can pose, which includes efforts to avoid harmful bias and discrimination and to protect user privacy.

They are also committed to leveraging AI to address some of society's most pressing challenges, ranging from cancer prevention to climate change mitigation.

The government is also developing an executive order and pursuing bipartisan legislation to promote responsible AI innovation. They have consulted with numerous countries, including Australia, Brazil, Canada, France, Germany, India, Japan, and the UK, to establish a robust international framework for AI development and use.

Andrew Orr

Andrew Orr

-m.jpg)

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

17 Comments

Tesla is also absent.

Maybe because Apple is currently a consumer rather than purveyor of AI? Only rumors say otherwise; no announced product.

Apple should not show up and did not. The government should be visiting Apple. Real Presidents visit an assembly line of Apple production. I'm not political - just stating a Fact. Slow Joe would need a stretcher.

Did the AIs agree?