Apple has finally nailed down the release date of the Apple Vision Pro in the United States, with it reaching customer hands starting on February 2, following a brief pre-order period.

Since its introduction at WWDC 2023, Apple has repeatedly said the Apple Vision Pro will be available sometime in early 2024, without pinning down an exact date. Now, Apple has finally confirmed when its mixed reality headset will become available.

Apple's headset will ship to customers on February 2. Preorders begin on January 19.

Just as explained shortly after its introduction, Apple will be making the Apple Vision Pro available only in the United States at launch, with a view to bringing it to more countries later in the year.

Sales will take place via Apple's website as well as a limited number of Apple Store locations across the country. Since headsets and lenses will be personally adjusted for each customer, and it's not clear exactly how that's going to happen at this point.

We've reached out to Apple about this matter, and have been told that there is no information to share about the process at this point.

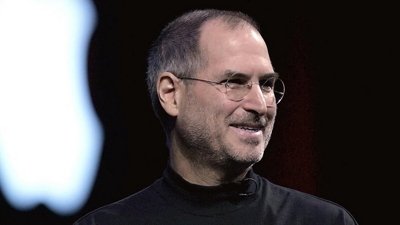

The era of spatial computing has arrived! Apple Vision Pro is available in the US on February 2. pic.twitter.com/5BK1jyEnZN

— Tim Cook (@tim_cook) January 8, 2024

Pricing for the Apple Vision Pro has been known for quite some time, and remains at $3,499, before adding accessories.

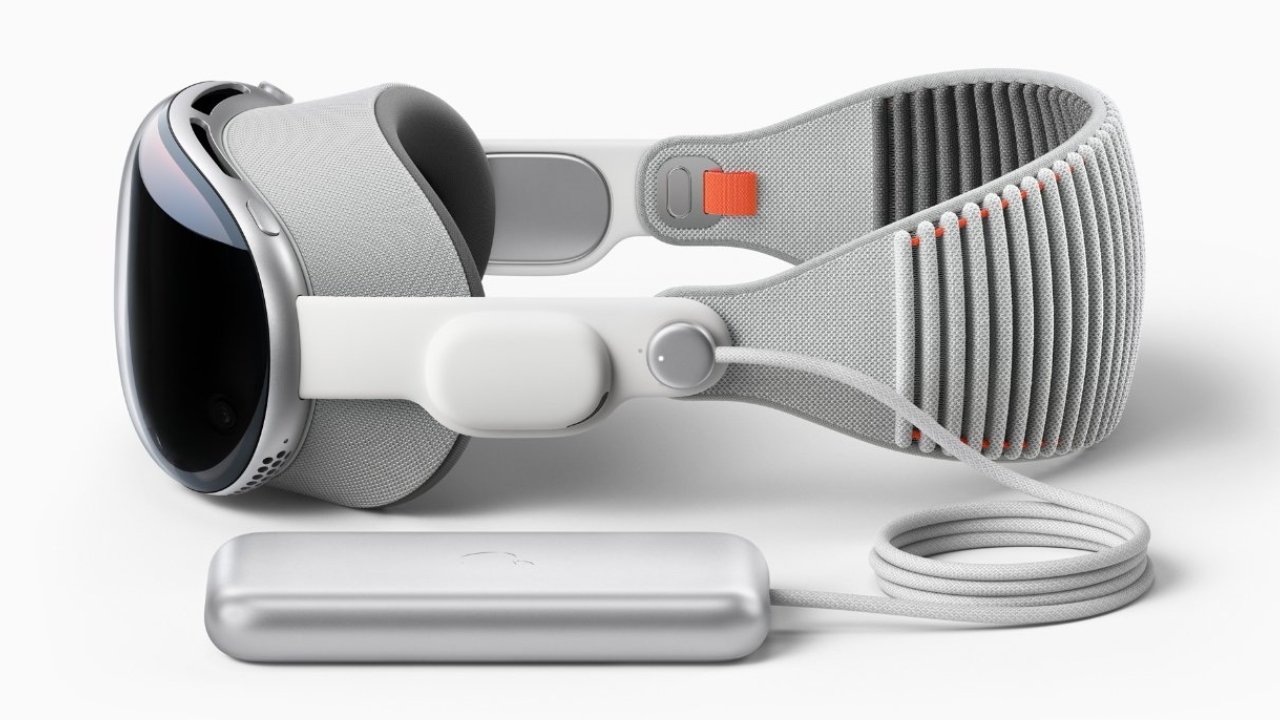

Apple Vision Pro comes with a Solo Knit Band and Dual Loop Band, giving users two options for the fit that works best for them. Apple Vision Pro also includes a Light Seal, two Light Seal Cushions, an Apple Vision Pro Cover for the front of the device, Polishing Cloth, Battery, USB-C Charge Cable, and USB-C Power Adapter.

Lenses cost $99 for "readers" and $149 for prescription Zeiss Optical inserts.

The Apple Vision Pro is the iPhone maker's first proper foray into creating a headset. Effectively a very high-specification VR headset, it uses an array of external sensors and 23 million pixels across its two displays to offer a high quality mixed reality experience.

Inside is a custom dual-chip design of Apple Silicon that splits apart the application processing with the M2 chip and the headset-specific duties in the R1 chip for real-time rendering of spatial computing. Users can turn a Digital Crown to blend between a fully-immersive experience or one that mixes in their local environment.

Users can create a Persona for the headset, a digital representation of themselves that appears during FaceTime calls and in applications.

EyeSight is Apple's unique feature that can show the eyes of the user's Persona on the external display, giving the appearance of the user actively looking at someone or something in their local environment. This serves as a way for others to know if the user can see them while wearing the headset, with a visualization appearing when they are fully immersed.

This certainly won't be Apple's only move into head-based spatial computing, but it could be one of its most expensive offerings. Apple is rumored to already be working on a more price-friendly version with a cut in features, as well as a full-fat second-generation model.

Malcolm Owen

Malcolm Owen

-m.jpg)

Charles Martin

Charles Martin

Mike Wuerthele

Mike Wuerthele

Marko Zivkovic

Marko Zivkovic

William Gallagher

William Gallagher

Amber Neely

Amber Neely

-m.jpg)

35 Comments

Apple clearly don't mind if the vast majority of buyers wait for the less featured version, or they'd have had a wall of secrecy. Letting it be known that there will be a non-Pro version and MkII version in the works tells me they expect first-generation buyers to be fully aware they are pioneers going in with their eyes wide open (with or without prescription lenses, lol). So, I hope the reaction to the MkI will be focused on this, not the price, and that Wall Street doesn't crucify AAPL due to lower-than-expected (as predicted by dick-heads) sales of this product.

No special event?

I am planning to order based on the probability of being able to sell it when I’m done playing with it, hoping to have owned it “rent free.” This based on the expectation of continued demand exceeding supply and no big price drop in the first year or so.

A MacBook Pro with 12 camera's and a R1 co-processor isn't going to get much cheaper than 2500 dollars, which is close to the price of a fully equipped 12.9 iPad Pro. The most important thing is what it can actually do (spacial video/movies/R1 chip capability) to justify the price, no different than all the other Apple devices released in the last 25 years.

What it will be is best in class by a country mile.