Apple has unveiled its mixed-reality headset, the Apple Vision Pro. Its ambitious launch of a new platform will make waves in the AR market, but it will ship in early 2024 and cost $3,499.

Following years of rumors, Apple has used its keynote address at the Worldwide Developer Conference to introduce its headset to the world. The Vision Pro is a standalone device similar to the Meta Quest 2, except that Apple takes the concept quite a few steps further.

As a premium headset, it aims to compete with high specifications and considerably advanced features that allow Apple's newest device category to stand out on its own.

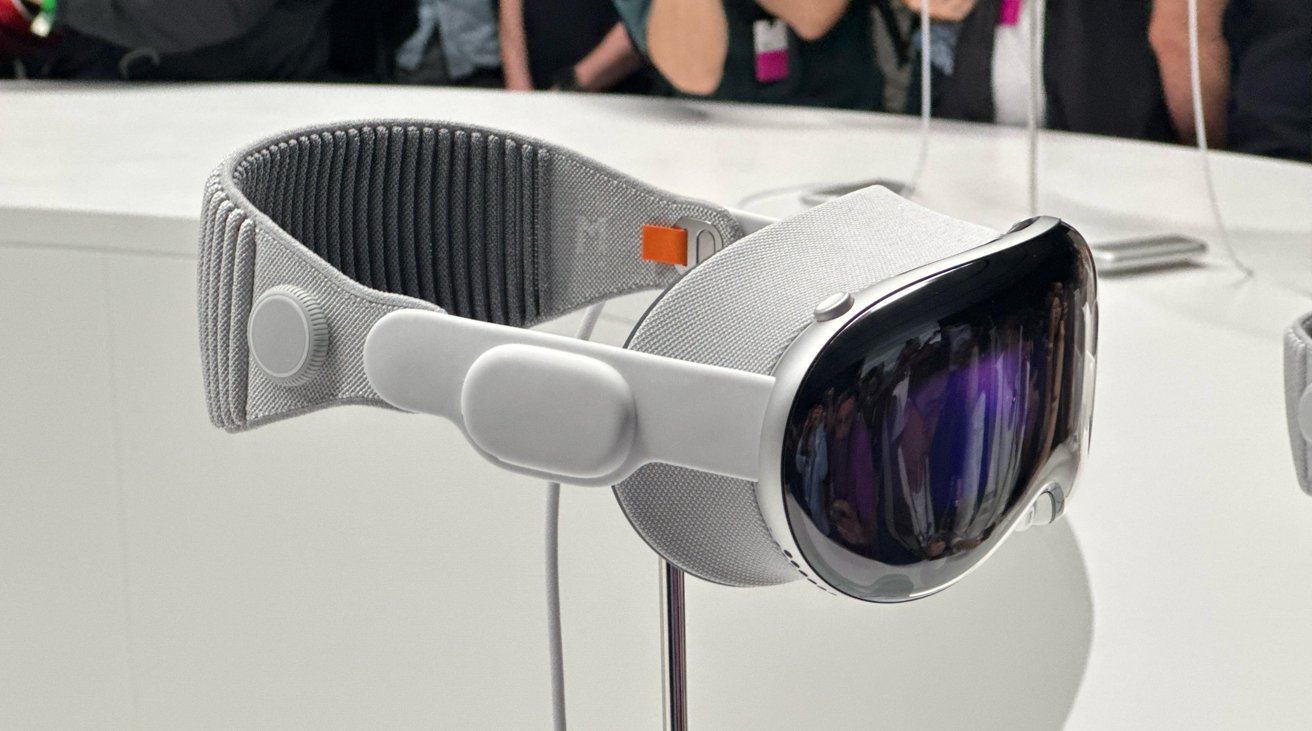

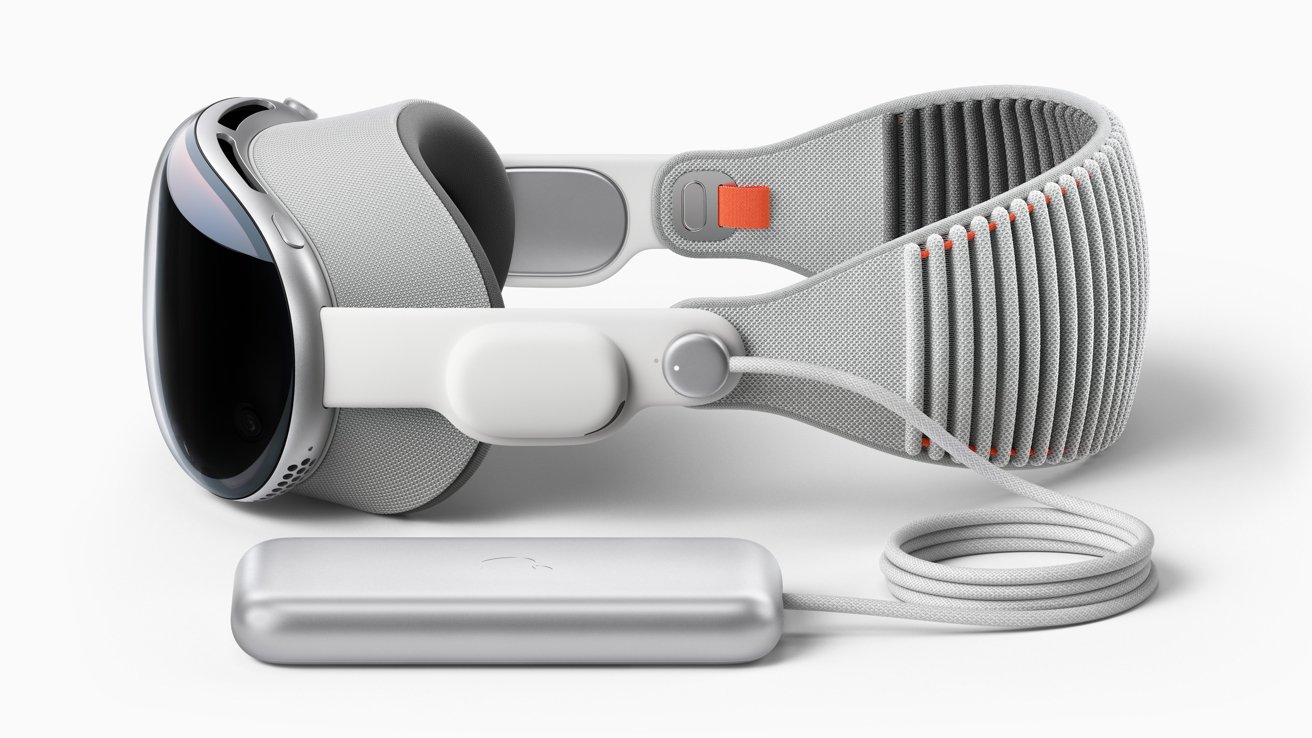

Taking the general form of a ski-goggle-style headset that others on the market also use, with silicone straps to the side and above the head to keep it in place. A cushion layer helps provide a snug fit to the user's face and keeps it comfortable to wear for long periods.

Referred to as a move into "spatial computing" and the first device Apple users see through, the Vision Pro displays apps within the user's space. Apps can expand within a space, complete with shadows placed on surfaces to show where apps are placed.

Multiple apps can be placed within a space and arranged to suit users' needs. This includes both 2D app panels and 3D objects, while still enabling a full view of the local environment if the user requires it.

To help users manage the VR and AR experience, a control like the Apple Watch Digital Crown is used to move gradually between the different modes. This allows users to go between VR and AR viewpoints granularly.

Navigating the operating system can be done in several ways, with hand, finger, and eye tracking employed using cameras inside and facing out of the headset. This allows gestures, such as pinches, to trigger items on a menu, for example.

Voice control is also available, making it especially useful for text entry, or Siri.

To give more of a connection to other people in the same room, EyeSight offers others a view of the user's eyes, allowing them to see what they are focusing on. The user's eyes are automatically revealed to other room occupants when the user looks at them.

While a standalone device, Vision Pro takes advantage of the Apple ecosystem and iCloud to synchronize the user's data between it and their Mac, iPhone, or iPad.

In one impressive example, a user could see the display of their Mac within Vision Pro simply by looking at it.

Along with typical Apple apps, there's an enhanced FaceTime with spatial elements. Tiles appear so contacts are life-size, with spatial audio helping user to determine who is speaking.

Documents also appear as separate tiles for co-working within a FaceTime call.

To allow others to see the user without the headset, Apple uses a front TrueDepth camera to scan the user's face to create a Persona. Relying on machine learning, the Persona appears on behalf of the user in the call, with face and hand movements reflected in real time.

Vision Pro is also Apple's first 3D camera, recording video and spatial audio that can be rewatched using the headset in 3D later. Apple refers to this as Spatial Video and Spatial Photos.

For entertainment, Vision Pro provides a Spatial Cinema with an adjustable screen size, while also dimming the surrounding light in the environment. It's also possible to set up a viewing experience with a 100-foot screen in a virtual environment, making it useful for flights and other tough situations.

And yes, there's support for 3D movies.

For gaming, there's controller support and over 100 Apple Arcade titles playable from the first day of availability.

Made from an aluminum alloy, the headset uses an efficient thermal design to cool the system. "Thousands of heads" were analyzed to design a modular system, so that the headset could be configured specifically for the user.

A single piece of "3D-formed laminated glass" is used for the front panel.

The headband is 3D-knitted as a single piece, ribbed, and attached using a mechanism to switch them out when required. Providing cushioning and stretch, it also has a Fit Dial so users can change how it functions to their particular head size and shape.

A partnership with Zeiss allows for vision-correction lenses to be used, allowing glasses wearers to enjoy the experience.

Connecting via a woven cable, a battery pack is tethered to remove weight from the headset, allowing it to be worn for longer periods. Apple says it can be worn for all-day usage when plugged into an outlet, but it can manage up to two hours running on the battery.

The display system uses a micro-OLED backplane with pixels seven and a half microns wide, allowing for it to fit in 23 million pixels in total. A custom three-element lens was designed to magnify the screen and make it wrap around as wide as possible for the user's vision.

Sound uses a new spatial audio system, with dual-driver audio pods on each side. Audio Raytracing is also used to match the audio to the environment.

Powering the headset is a dual-chip design, based on the M2 Apple Silicon. A second chip, R1, is a specialized chip for real-time sensor processing, reducing latency and motion discomfort.

This results in a 12-millisecond lag, which Apple says is faster than a blink of an eye.

That sensor list comprises 12 cameras, five sensors, and six microphones.

EyeSight uses a curved external OLED panel with a lenticular lens to make the user's eyes seem as correct as possible to others in the room.

While Apple is introducing SDKs and tools to make it easy for developers to produce 3D apps for Vision Pro and visionOS, its operating system, it's also making sure many apps are available. As a result, thousands of existing apps for Mac and iPad will be usable with Vision Pro without any real changes needing to be made for compatibility.

For security, Apple introduces Optic ID as an iris-scanning system. Much like Face ID or Touch ID, it will be used to authenticate the user, enable Apple Pay purchases, and other security-related elements.

Apple explains that Optic ID uses invisible LED light sources to analyze the iris, which is then compared to the enrolled data stored on the Secure Enclave, which is similar to the process used for Face ID and Touch ID. The Optic ID data is fully encrypted, not provided to apps, and never leaves the device itself.

Continuing the privacy theme, the areas where the user looks on the display and eye tracking data aren't shared with Apple or websites. Instead, data from sensors and cameras are processed at a system level so that apps don't necessarily need to "see" the user or their surroundings to provide spatial experiences.

Vision Pro starts from $3,499, and will be available in early 2024 in the United States at first. Launches in other countries are also expected throughout 2024.

Malcolm Owen

Malcolm Owen

-m.jpg)

William Gallagher

William Gallagher

Charles Martin

Charles Martin

Amber Neely

Amber Neely

Andrew Orr

Andrew Orr

Christine McKee

Christine McKee

244 Comments

FFS!!!!!! It’s not magical. It’s scientific and engineering.

Looks horrible. Total bill gates need level. Non even resembles apple design. Wastes battery with the outside screen.

Iger talking up these amazing new experiences that are just vague ideas, but ending with the reality: “oh. Um. Disney plus.” Pretty sad. So far, not really different use cases than other devices.

This is pretty embarassing so far. No selling features at all, just doing the same stuff with a silly helmet on.

I wonder if this this going to help you get rid of your myopia

Intriguing and worth trying in a store. I can tell how it would work for me, as I require corrective lenses.