Apple's digital assistant Siri is poised to receive a considerable upgrade in the near future, involving an awful lot of generative AI and machine learning changes.

Siri was once considered a great introduction to the iPhone and the Apple ecosystem. Over the years, the shine certainly wore off the digital assistant, with Google Assistant proving to be a highly capable rival.

Since the rise of ChatGPT and other large language model (LLM) AI initiatives in recent times, there's been an AI gold rush. With Siri seemingly staying static and being passed by by rivals, there is now an expectation for Apple to make considerable changes to its smart feature.

According to the New York Times, executives including Craig Federighi and John Giannandrea spent weeks looking at ChatGPT in 2023. It was said at the time by sources that ChatGPT made Siri appear antiquated.

The discovery led to Apple reorganizing and pushing forward with its own AI projects, to try and catch up.

Now, Apple is anticipated to show off what it has worked on at WWDC on June 10. That is expected to include a Siri that's more conversational and versatile, as well as a generative AI system enabling it to chat.

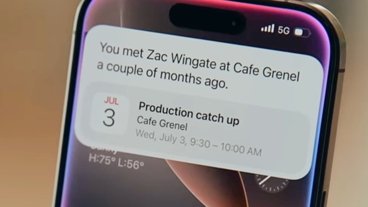

Three people familiar with the work say Siri will be better at dealing with tasks it already can do, such as setting appointments and summarizing text messages. It's an attempt to stand out from ChatGPT's creative leanings and to be more directly productive to users.

Apple also plans to use its on-device processing as a major plus point to customers, since it will be more private than sending data off to the cloud.

AI in progress

We've been talking about — and exclusively breaking — Apple's efforts in the space for some time.

The story so far for Apple's publicly-viewable AI work consists of a few key projects. That includes the multi-modal LLM "Ferret" released in October that can recognize and act upon queries involving regions of images.

A follow-up, Ferret-UI, followed the same concept to handle complex user interfaces. This enables the LLM to understand what's happening in an app, potentially to explain the information to the user, or to trigger other tasks.

There has also been considerable discussion about Ajax, an LLM that can cover many different functions that Siri can theoretically perform. For example, text summarization analyzing whether contacts are involved, and providing more intelligent results to Spotlight.

One thing that Ajax has running for it is that, while complex queries may have to require server-side processing, it is still able to generate rudimentary text-based responses on-device.

To highlight the seriousness of Apple's focus on AI, it has taken the path to try and make its work as legal as possible.

While rivals have gone down the route of scraping public sources for data, earning criticism in the process, Apple thought differently. Instead, it offered millions to publishers to access news archives, keeping its own AI training above board.

However, it remains to be seen how effective its year of crunch on AI has been for Siri. We won't truly know until we get to try it out on our own devices in the coming months.

Malcolm Owen

Malcolm Owen

-m.jpg)

Christine McKee

Christine McKee

Charles Martin

Charles Martin

Mike Wuerthele

Mike Wuerthele

Marko Zivkovic

Marko Zivkovic

William Gallagher

William Gallagher

-m.jpg)

28 Comments

Siri Pro.

Siri has been surpassed by Googles assistant for many years.

So, what features will these LLM algorithms enable? The answers are always vague.

They can summarize emails, so I would need to read them anymore, and just read a one sentence summary. Similarly true for web pages, or anything involving reading. They provide document templates based on inputted materials. I presume a Swift code generator based on prompts will be coming.

Hmm, the response from website advertisers will be interesting. There are many instances where I'd like to extract the 0.1% of a website that is actual content versus the ad-load that takes up 99.9% of the data and 99.999% of the computing power. That's a want, but I never use ad-blockers and decide to either read the website as is, or ignore it.

Maybe I’m the anomaly, but Siri has always worked very well for me for the basic reminder, calendar, weather conditions, currency exchange/math problems, calling, texting, “what song is this” and similar “life organization” tasks I throw at it. The only and only thing that drove me up a wall with Siri was working out the exact wording needed to get it to play my college radio station.

So I am in no way suggesting that Siri is somehow secretly better than the other vocal assistants in this area, or that the rest of you must be “using it wrong,” as I have no direct experience with any other vocal assistants. I just know that Siri works pretty well for me most of the time, but my requests are not very far-ranging and random, either.