A proposed class action suit claims that Apple is hiding behind claims of privacy in order to avoid stopping the storage of child sexual abuse material on iCloud, and alleged grooming over iMessage.

Following a UK organization's claims that Apple is vastly underreporting incidents of child sexual abuse material (CSAM), a new proposed class action suit says the company is "privacy-washing" its responsibilities.

A new filing with the US District Court for the Northern District of California, has been brought on behalf of an unnamed 9-year-old plaintiff. Listed only as Jane Doe in the complaint, the filing says that she was coerced into making and uploading CSAM on her iPad.

"When 9-year-old Jane Doe received an iPad for Christmas," says the filing, "she never imagined perpetrators would use it to coerce her to produce and upload child sexual abuse material ("CSAM") to iCloud."

The suit asks for a trial by jury. Damages exceeding $5 million are sought for every person encompassed by the class action.

It also asks in part that Apple be forced to:

- Adopt measures to protect children against the storage and distribution of CSAM on iCloud

- Adopt measures to create easily accessible reporting

- Comply with quarterly third-party monitoring

"This lawsuit alleges that Apple exploits 'privacy' at its own whim," says the filing, "at times emblazoning 'privacy' on city billboards and slick commercials and at other times using privacy as a justification to look the other way while Doe and other children's privacy is utterly trampled on through the proliferation of CSAM on Apple's iCloud."

The child cited in the suit was initially contacted over SnapChat. The conversations were then moved over to iMessage before she was asked to record videos.

SnapChat is not named in the suit as a co-defendant.

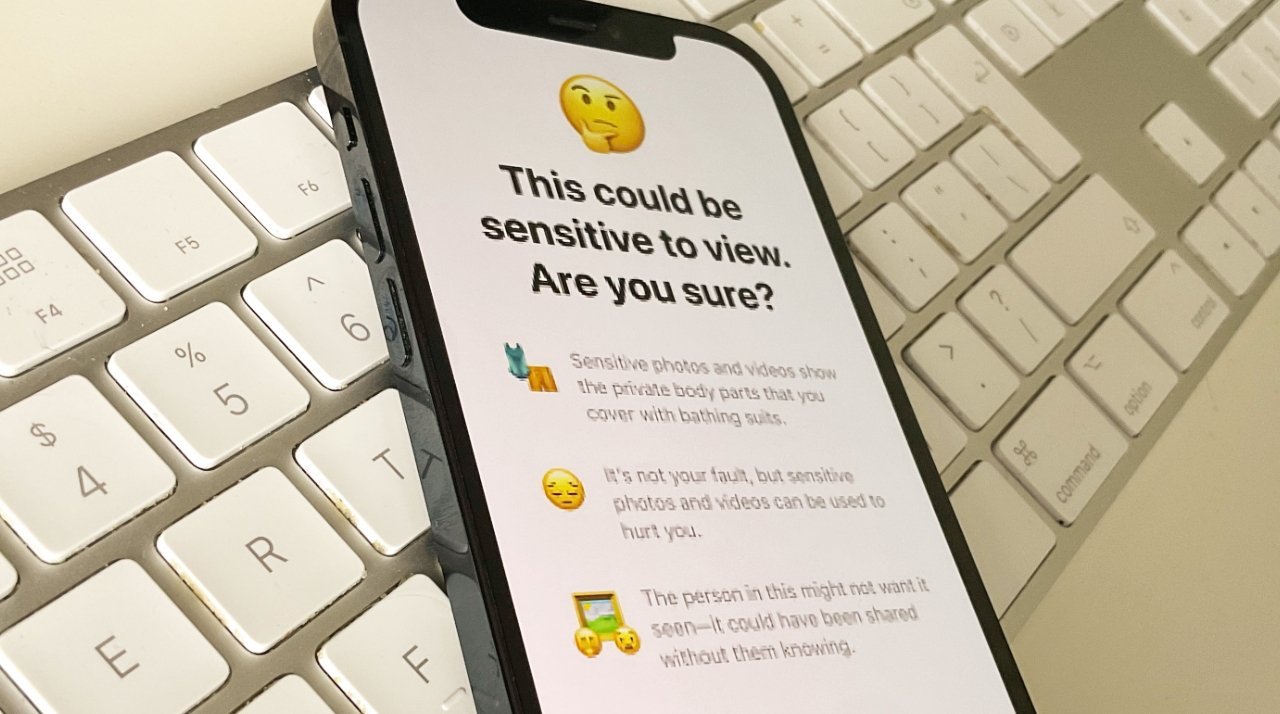

Apple did attempt to introduce more stringent CSAM detection tools. But in 2022, it abandoned those following allegations that the same tools would lead to surveillance of any or all users.

William Gallagher

William Gallagher

-m.jpg)

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

-m.jpg)

12 Comments

I’d say people who want to undermine privacy protections are using children as a cover for their goals.

Looks like FBI would like to see end-to-end encryption forbidden. This would be an absolut disaster for privacy!

Where were the parents when she was uploading to the cloud?

https://www.theregister.com/2024/05/13/e2ee_comment/

Yes it’s all Apple’s fault for not being a more responsible parent. /s