Digital Theater

The first filing, made by departing iPod chief Tony Fadell and colleague John Tang this February, relates to methods of adjusting a portable display system to cater to a user's desires or, more specifically, give the user the impression he or she is in attendance at a live sporting or entertainment event.

In some instances, the user may direct the personal display device to zoom in or out of the displayed media and play back a video while displaying only a portion of the stream. In addition, the user would be able to further adjust the media display of the personal display device by masking parts of the media and adding an overlay on a portion of the display screen.

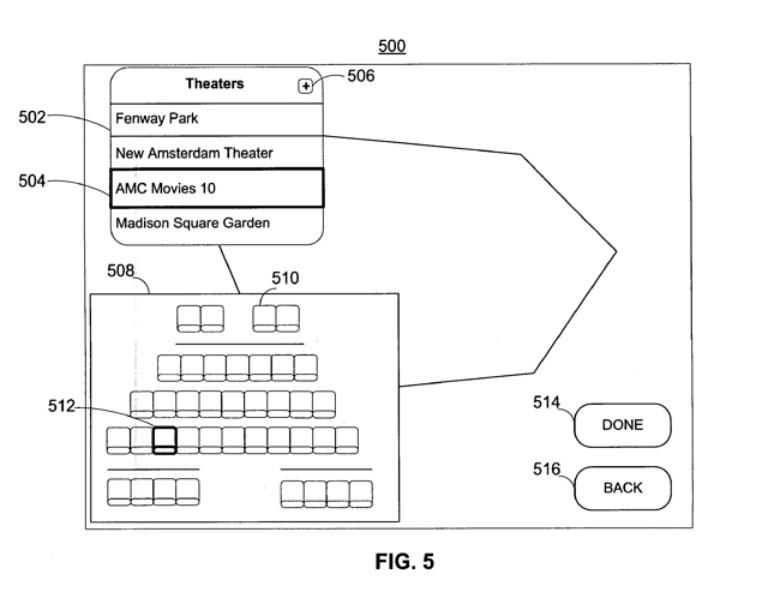

"For example, the user may select a theater, stadium, performance hall, or other location for viewing performances, and direct the personal display device to overlay the outlines of the selected location on the displayed media," Apple said. "In some embodiments, the user may select a particular theater or location to view media as if the user were in the selected theater."

To further enhance the user's belief that he or she is in the selected theater with other patrons, the personal display device may display outlines of other patrons in addition to the outlines of the theater. The outlines of other patrons could be operative to move to further increase the realism of the display.

More specifically, Apple said the user may select a particular seat or location within the theater from which to watch the media. In this case, the personal display device would display a seating map from which the user may select a particular seat. In response to receiving a selection of a seat, the display device would adjust the displayed media to give the user the impression that the media is viewed from the selected seat.

"For example, the personal display device may skew or stretch the displayed media to reflect a user's selection of a seat on the side of the theater," according to the filing. "As another example, the personal display device may modify the display of a sporting event to reflect the seat in the stadium from which the user selected to watch the event."

Alternatively, the software could display a mosaic of a plurality of media items, for which the user may select to view a particular media item. In response to a user selection of a particular media item from the mosaic, the personal display device would zoom to the selected media item, and may mask the media items other than the selected media item, superimposing any suitable type of overlay over the mask.

In addition to adjusting the displayed media, the personal display device would adjust other characteristics of played back media, such as the sound output to the user to reflect the acoustics of a selected theater, or to reflect the user's selected location within a theater. It could also introduce additional noise to reflect a sporting environment.

"When the user directs the personal display device to adjust the displayed media such that the entirety of the media provided cannot be displayed on the display (e.g., the user zoomed in the display), the personal display device may enable an option by which the user may move his head, eyes, or another body part to cause the portion of the media displayed to follow the user's movement," Apple said.

"The personal display device may detect and quantify the user's movement using any suitable approach, including for example by integrating one or more sensors in the personal display device. Upon receiving an indication of a user's movement from the sensors, the personal display device may determine the amount and speed of the users, movement, and direct the displayed media to move by an amount and at a speed related to those of the user's movement. In some embodiments, the correlation between the user's movement and the media display adjustments may be non-linear. In some embodiments, after detecting the user's movement, the personal display device may delay moving the displayed media. This may assist in reducing a user's eye fatigue."

Smart Apple Remote

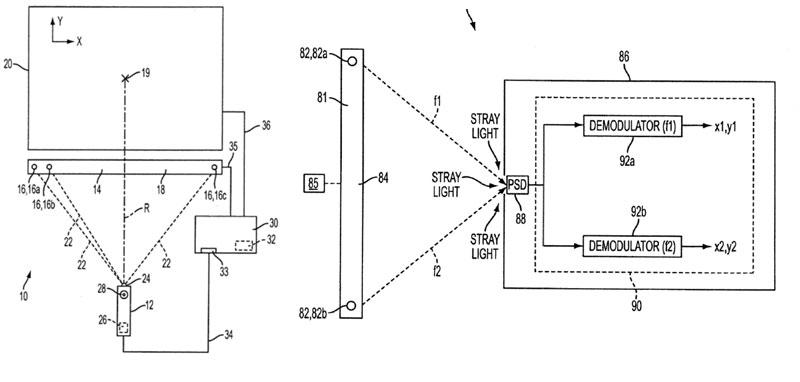

The second filing, made this past July, describes an Apple Remote control system that would be more reliable than the company's existing infrared-based model in that it would be able to better distinguish predetermined light sources from stray light sources.

"Some remote control systems use infrared (IR) emitters to determine the position and/or movement of a remote control," Apple said. "Such systems, however, often experience a common problem in that they may not be able to distinguish desired or predetermined IR light sources from undesirable environmental IR sources, e.g., the sun or a light bulb. Because those systems may mistake environmental IR sources for IR emitters, they may incorrectly determine the position and/or movement of the remote control. "

Another common problem in that the systems may not be able to distinguish IR emitters from reflections of the IR emitters, like those from the surface of a table or a window. Therefore, Apple proposes remote control systems that can distinguish predetermined light sources from stray or unintended light sources, such as environmental light sources or reflections.

"In one embodiment of the present invention, the predetermined light sources can be disposed in asymmetric substantially linear or two-dimensional patterns," the filing explains. "Here, a photodetector can detect light output by the predetermined light sources and stray light sources, and transmit data representative of the detected light to one or more controllers. The controllers can identify a derivative pattern of light sources from the detected light indicative of the asymmetric pattern in which the predetermined light sources are disposed."

Alternatively, the predetermined light sources can output waveforms modulated in accordance with signature modulation characteristics. By identifying light sources that exhibit the signature modulation characteristics, a controller can distinguish the predetermined light sources from stray light sources that do not modulate their output in accordance with the signature modulation characteristics.

Apple added that each predetermined light source can output light at one or more different signature wavelengths. For example, a photodetector module could detect the signature wavelengths using multiple photodetectors, each of which could detect one of the signature wavelengths. Alternatively, the photodetector module could include an interleaved photodetector having an array of interleaved pixels. Different portions of the interleaved pixels would detect one of the signature wavelengths.

The 15-page filing is credited to Apple engineers Steve Hotelling, Nicholas King, Duncan Robert Kerr, and Wing Kong Low.

AppleInsider Staff

AppleInsider Staff

-m.jpg)

Christine McKee

Christine McKee

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Andrew O'Hara

Andrew O'Hara

25 Comments

Mystery Science Theater 3000

, coming to an iPhone or iPod near you.

That sounds godawful and gimmicky. Why not also add candy wrapper and coughing sounds as well?

Unless you're a paraplegic who would want this -serously.

"Oh yeah- let me obstruct my viewing area with some heads!"

That sounds godawful and gimmicky. Why not also add candy wrapper and coughing sounds as well?...

Yeah, that first one is real "pie-in-the-sky" stuff. Right out of a sci-fi movie.

Possibly they are patenting the general idea so they can say they were first, but I don't see that happening for 50 years (if at all). The technology just doesn't exist to do that kind of simulation right. Your whole living room would have to act like the holodeck.

Yeah, that first one is real "pie-in-the-sky" stuff. Right out of a sci-fi movie.

Possibly they are patenting the general idea so they can say they were first, but I don't see that happening for 50 years (if at all). The technology just doesn't exist to do that kind of simulation right. Your whole living room would have to act like the holodeck.

Did you see the new CNN "beam me up Scotty/Princess Leia" hologram effect for interviewing on election night?

NOw was that a technological advance for journalism or what?

Did you see the new CNN "beam me up Scotty/Princess Leia" 2D hologram effect for interviewing on election night? NOw was that a technological advance for journalism or what?

Yesterday they had a segment on how this was actually performed. They used upwards of 40 different cameras positioned around the remote interviewee, who was positioned amongst a solid-color backdrop. They also said they weren't too confident the technology would work leading up to its debut. Sounds like they're aiming to perfect it going forward because they believe it's the future of remote interviews.

Best,

Kasper