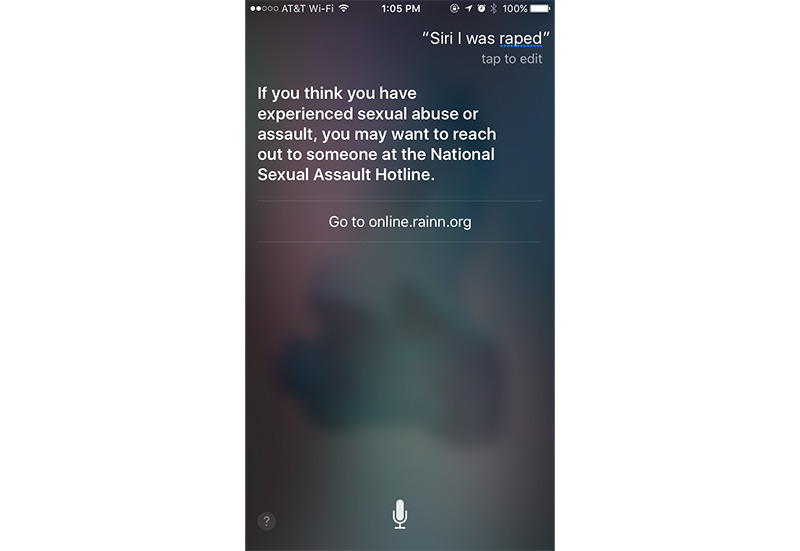

Apple in March updated its Siri virtual assistant to better handle questions about sexual assault and other emergencies, with some queries now leading to responses developed in cooperation with the Rape, Abuse and Incest National Network (RAINN).

On March 17, Apple added phrases like "I was raped" and "I am being abused" to Siri's index, programming responses to include Web links to the National Sexual Assault Hotline, the company told ABC News.

The update came just three days after a study published in the JAMA Internal Medicine journal found four virtual assistant technologies — Siri, Google Now, Microsoft's Cortana and Samsung's S Voice — lacking when it came to offering support for personal emergencies. Apple contacted RAINN shortly after the study was published, the report said.

"We have been thrilled with our conversations with Apple," said Jennifer Marsh, vice president for victim services at RAINN. "We both agreed that this would be an ongoing process and collaboration."

To optimize Siri responses, Apple gathered phrases and keywords RAINN commonly receives through its online and telephone hotlines. The response system was also adjusted to use language and phrases carrying softer connotations. For example, Marsh notes Siri now replies to personal emergencies with phrases like "you may want to reach out to someone" instead of "you should reach out to someone."

With human-machine interactions becoming increasingly common, virtual assistants like Siri might soon be vital conduits through which victims report assault and, if programmed correctly, get the help they need.

"The online service can be a good first step. Especially for young people," Marsh said. "They are more comfortable in an online space rather than talking about it with a real-life person. There's a reason someone might have made their first disclosure to Siri."

Apple is constantly updating Siri to provide more accurate answers, but the service has run into problems when it comes to sensitive social issues. In January, Apple corrected a supposed flaw in Siri's response database that led users searching for abortion clinics to adoption agencies and fertility clinics. The quirk was first discovered in 2011.

Mikey Campbell

Mikey Campbell

-m.jpg)

Thomas Sibilly

Thomas Sibilly

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Mike Wuerthele and Malcolm Owen

Mike Wuerthele and Malcolm Owen

Amber Neely

Amber Neely

William Gallagher

William Gallagher

12 Comments

Good work Apple.

I guess it's good, though I find it very weird that someone would turn to Siri about such a thing. Having said that, even 1 instance of a positive result is worth it.