A series of major brands have pulled ads from Google's YouTube following reports that their ads are being shown next to terrorist, hate group and other offensive or controversial videos.

A series of recent reports have drawn attention to problems at Google related to false answers, fake news and offensive or illegal content. Advertisers are increasingly growing concerned about their brands being associated with hate groups, terrorists and religious extremists.

Earlier this month, AppleInsider noted that ads for IBM and other major brands appeared on fake news clip that gained visibility after Google Home referenced fringe content on YouTube in an answer to whether "Obama was planning a coup."

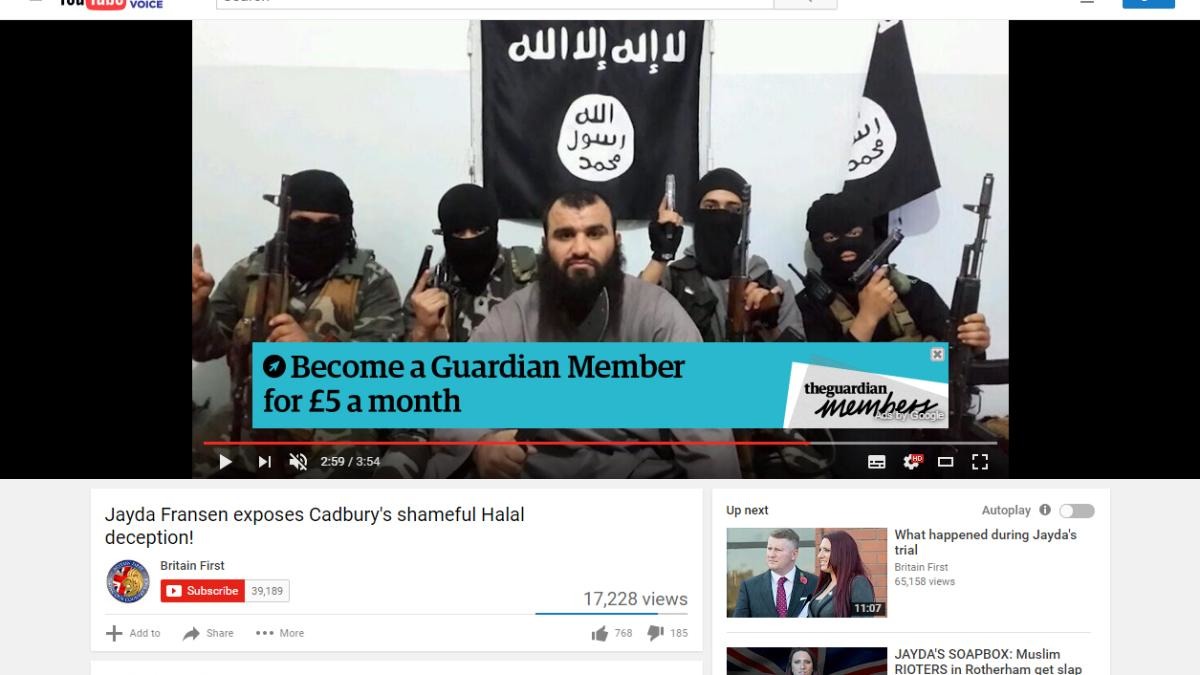

A new report by Bloomberg noted that the U.K. government and the Guardian newspaper have pulled their advertising from YouTube."It is completely unacceptable that Google allows advertising for brands like the Guardian to appear next to extremist and hate-filled videos" - Guardian News

"it is completely unacceptable that Google allows advertising for brands like the Guardian to appear next to extremist and hate-filled videos," wrote Guardian News & Media in a statement.

French marketing giant Havas has pulled its brands from both Google and YouTube in the U.K. after "failing to get assurances from Google that the ads wouldn't appear next to offensive material." Havas brands include European mobile carrier O2, British Royal Mail, the BBC, Domino's Pizza and Hyundai Kia.

The pulled ads were limited to the U.K., in an apparent link to an embarrassing report by the Times of London headlined "Taxpayers are funding extremism."

The report detailed that Google was placing ads from the U.K. government— including the Home Office, the Royal Navy, the Royal Air Force, Transport For London and the BBC— and major corporate brands next to "hate videos and rape apologists," featuring Michael Savage; "antisemitic conspiracy theorist, Holocaust denier and former Imperial Wizard of the Ku Klux Klan" David Duke as well as Christian extremist pastor Steven Anderson— who praised the murders of 49 killed in the Pulse nightclub shooting in Orlando, Florida.

After finding that ad spots for its charity supporting disadvantaged youth were being published by Google on extremist YouTube videos, cosmetics brand L'Oreal stated, "we are horrified that our campaign — which is the total antithesis of this extreme, negative content — could be seen in connection with them."

Bloomberg characterized the pulled ads as a "growing backlash" against Google's automated selling of online ads and the advertising giant's platform that does little to prevent the mingling of advertisers' brands with hateful, extremist content that is illegal in some countries. Google's problems are shared by Facebook, which has also been targeted for spreading viral fake news, violent content and extremist messaging on its platform.

Both Google and Facebook have taken over majority market share in global display advertising by automating the placement of ads next to content without any real human curation. Both have announced an intent to give advertisers better control over what content they are supporting and associating their brands with.

Apple's advertising in iTunes and the App Store is largely protected by the company's efforts to curate its own content, but it too has drawn criticism for both allowing moderately offensive content and— in the other direction— erecting a Walled Garden that stifles unrestricted speech.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

17 Comments

I'm starting to get the feeling this scumbag company is gonna go downhill soon.

I can't believe this is still an issue. Even by google standards this is ridiculous. google has known about this for years:

http://www.chicagotribune.com/news/nationworld/chi-youtube-terrorist-propaganda-20150128-story.html

google's response, back in 2015, was to whine and exaggerate how hard it is. Pretty pathetic. They were basically saying it's not a priority and they don't want to dedicate resources to it (i.e. they want to keep raking in the ad dollars).

This is classic google. They were even arrogant enough to imply that they're a useful public utility, and that other people and states should come in and help filter the terrorist propaganda for them.

But, but, but AI was going to eliminate this problem! As well as find the cure for death, banish recessions forever, and create perfect soulmates for pasty-faced basement dwellers! Wait, wait... I know what's going on. This is all Fake News!