Inner workings of Apple's 'Face ID' camera for 'iPhone 8' detailed in report

Last updated

Apple is widely rumored to integrate cutting-edge facial recognition solution in a next-generation iPhone many are calling "iPhone 8," and a report published Friday offers an inside look at the of the technology before its expected debut on Tuesday.

In a note to investors seen by AppleInsider, KGI analyst Ming-Chi Kuo details the components, manufacturing process and behind-the-scenes technology that make Apple's depth-sensing camera tick.

Referenced in HomePod firmware as Pearl ID, and more recently as "Face ID" in an iOS 11 GM leak, Apple's facial recognition system is expected by some to serve as a replacement for Touch ID fingerprint reader technology. As such, the underlying hardware and software solution must be extremely accurate, and fast as well.

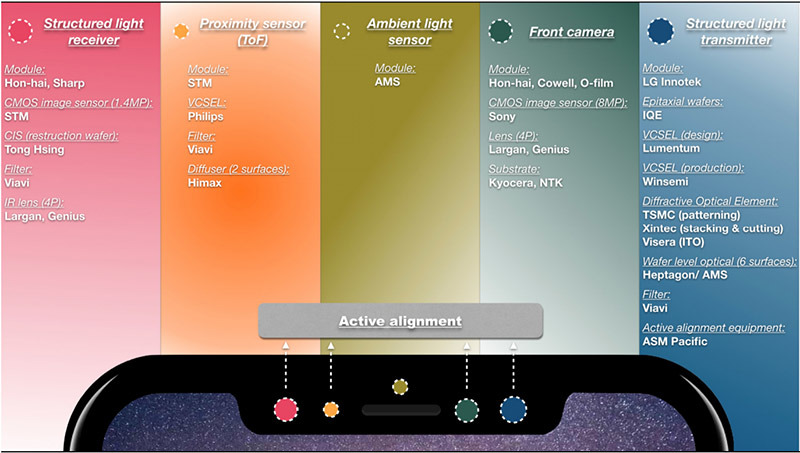

According to Kuo, Apple's system relies on four main components: a structured light transmitter, structure light receiver, front camera and time of flight/proximity sensor.

As outlined in multiple reports, the structured light modules are likely vertical-cavity surface-emitting laser (VCSEL) arrays operating in the infrared spectrum. These units are used to collect depth information which, according to Kuo, is integrated with two-dimensional image data from the front-facing camera. Using software algorithms, the data is combined to build a composite 3D image.

Kuo points out that structured light transmitter and receiver setups have distance constraints. With an estimated 50 to 100 centimeter hard cap, Apple needs to include a proximity sensor capable of performing time of flight calculations. The analyst believes data from this specialized sensor will be employed to trigger user experience alerts. For example, a user might be informed that they are holding an iPhone too far or too close to their face for optimal 3D sensing.

In order to ensure accurate operation, an active alignment process must be performed on all four modules before final assembly.

Interestingly, the analyst notes the ambient light sensor deployed in the upcoming iPhone will support True Tone display technology. Originally introduced in 2016, True Tone tech dynamically alters device display color temperatures based on information gathered by ambient light sensors. This functionality will also improve the performance of 3D sensing apparatus, Kuo says.

The analyst believes the most important applications of 3D sensing to be facial recognition, which could replace fingerprint recognition, and a better selfie user experience.

Kuo believes all OLED iPhone models — white, black and gold — will feature a black coating on their front cover glass to conceal the VCSEL array, proximity sensor and ambient light sensor from view. The prediction is in line with current Apple device designs that obscure components from view.

Mikey Campbell

Mikey Campbell

Amber Neely

Amber Neely

Thomas Sibilly

Thomas Sibilly

AppleInsider Staff

AppleInsider Staff

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

37 Comments

If you can build a composite 3D image from all that data, Apple shouldn't spoil the fun by limiting it to just Face Recognition. Likely usable to create 3D models of desktop sized objects usable in a variety of applications.

It is just amazing this guy can get something this detail for the apple supply chain. Petty sure he has his man inside Apple and pretty high up.

So how does this technology work in low light or with no light, via infrared or does a normal light shine in ones face?