Apple and IBM late Monday announced an expansion to their existing partnership that will allow customers to roll out advanced in-app machine learning capabilities through Apple's Core ML and IBM's Watson technology.

As detailed by TechCrunch, the project expansion lets clients develop machine learning tools based on Watson technology, then deploy those assets in app form on Apple portable devices.

Called Watson Services for Core ML, the program lets employees using equipped MobileFirst apps to analyze images, classify visual content and train models using Watson Services, according to Apple. Watson's Visual Recognition delivers pre-trained machine learning models that support images analysis for recognizing scenes, objects, faces, colors, food and other content. Importantly, image classifiers can be customized to suit client needs.

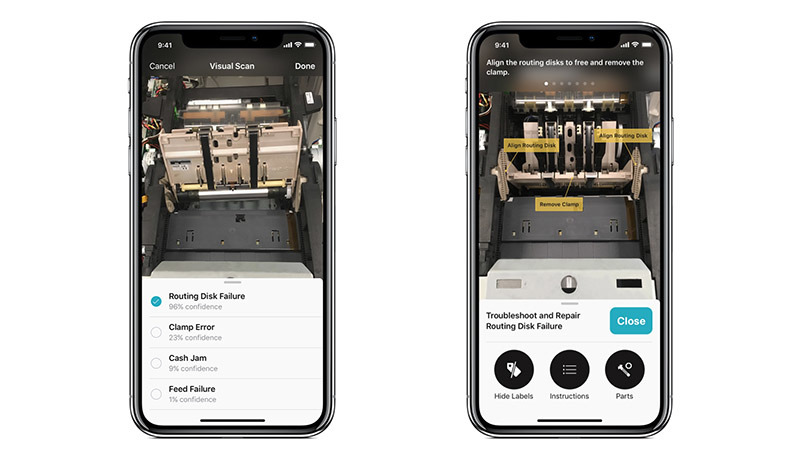

For example, a machine learning model integrated into an iOS enterprise app might be trained to distinguish a broken appliance from a photo or live iPhone camera view using Watson's image recognition capabilities. After determining the make and model, a technician can ask the app to run a database query for repair parts, return diagnostics procedures, identify parts onscreen or even assess potential problems.

Integrating Watson tech into iOS is a fairly straightforward workflow. Clients first build a machine learning model with Watson, which taps into an offsite data repository. The model is converted into Core ML, implemented in a custom app, then distributed through IBM's MobileFirst platform.

Introduced at the Worldwide Developers Conference last year, Core ML is a platform tool that facilitates integration of trained neural network models built with third party tools into an iOS app. The framework is part of Apple's push into machine learning, which began in earnest with iOS 11 and the A11 Bionic chip.

"Apple developers need a way to quickly and easily build these apps and leverage the cloud where it's delivered," said Mahmoud Naghshineh, IBM's general manager, Apple partnership.

On that note, IBM is also introducing IBM Cloud Developer Console for Apple, a cloud-based service that simplify the process of building Watson models into an app. The arrangement allows for back-and-forth data sharing between an app and its backbone database, meaning the underlying machine learning model can improve itself over time if the client so chooses. Users can also tap into IBM cloud services covering authentication, data, analytics and more.

"That's the beauty of this combination. As you run the application, it's real time and you don't need to be connected to Watson, but as you classify different parts [on the device], that data gets collected and when you're connected to Watson on a lower [bandwidth] interaction basis, you can feed it back to train your machine learning model and make it even better," Naghshineh told TechCrunch.

Apple and IBM first partnered on the MobileFirst enterprise initiative in 2014. Under terms of the agreement, IBM handles hardware leasing, device management, security, analytics, mobile integration and on-site repairs, while Apple aids in software development and customer support through AppleCare.

IBM added Watson technology to the service in 2016, granting customer access to in-house APIs like Natural Language Processing and Watson Conversation. Today's machine learning capabilities are an extension of those efforts.

A number of companies now rely on MobileFirst apps to conduct business operations, from air travel to healthcare to telecommunications. Last year, Banco Santander brought the solution into the realm of banking with a suite of apps designed to deliver relevant data to financial specialists. Most recently, IBM expanded its "Garage" concept, or physical office space dedicated to MobileFirst development, with new facilities in Shanghai and Bucharest.

AppleInsider Staff

AppleInsider Staff

-m.jpg)

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

14 Comments

Mark my words: Apple buys IBM within the next 6-8 years.

How many more steps before we have a working Tricorder?