The iPhone XS and iPhone XS Max have an externally similar camera assembly to the iPhone X, but under the lens is a new system. AppleInsider delves into the new camera, and what it will do for you.

In terms of camera specifications, Apple's latest flagship iPhones are very much like past models, but are at the same time very different. Calling it a "new dual-camera system," Apple carries over the twin 12-megapixel shooter layout introduced with iPhone X — a wide-angle lens and a 2X telephoto lens stacked one atop the other.

In fact, looking at the raw specs, the iPhone XS and the iPhone X appear to have largely unchanged cameras. They share identical megapixel counts, F numbers, and other key aspects, but that is only painting part of the picture. Behind those lenses are larger sensors, a faster processor and an improved image signal processor (ISP). Those, combined with several other new features make these the best cameras to ever grace iPhone.

The main wide-angle camera has a new, larger sensor. The module boasts a 1.4-micrometer pixel pitch, up from the 1.22-micrometer pixel pitch found on the iPhone X. This nearly 20-percent increase in pixel depth should greatly help with light sensitivity, and indeed Apple SVP of Worldwide Marketing Phil Schiller said as much onstage on Wednesday.

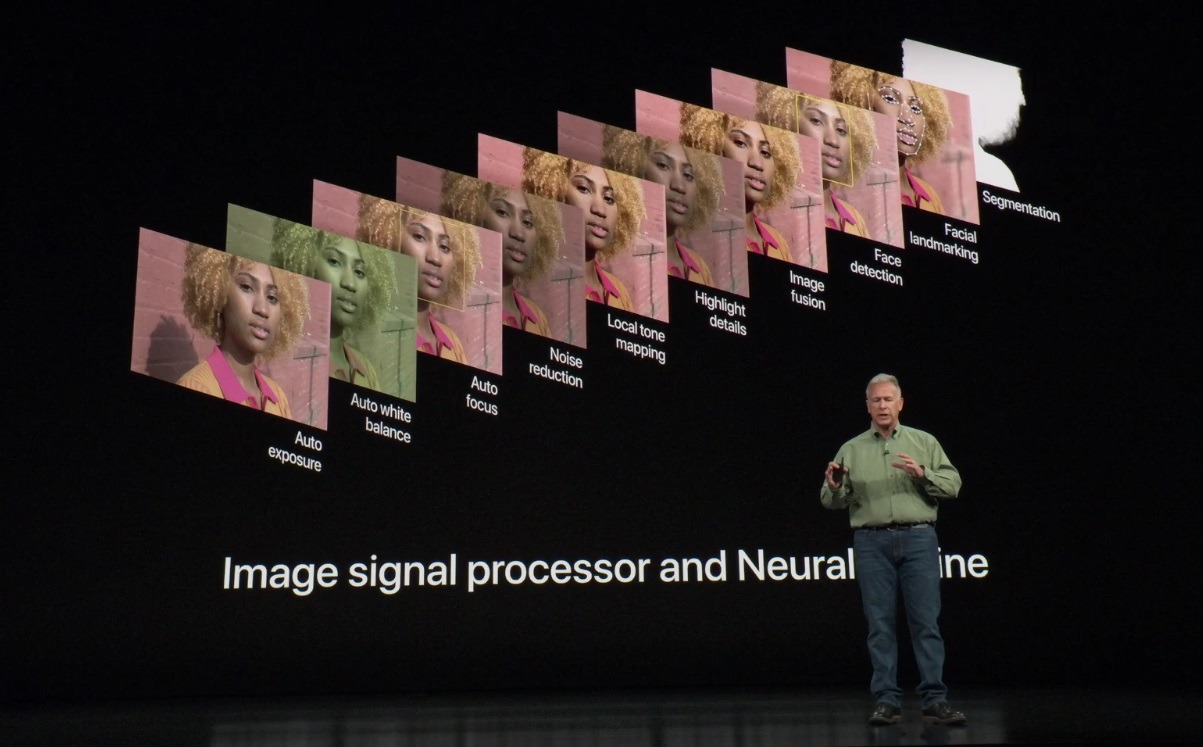

Apple has also tightly integrated the ISP with the newly upgraded Neural Engine found in its A12 Bionic processor. The A12 Bionic is an extremely powerful chip that incorporates a number of upgrades over its A11 Bionic predecessor, including faster and more efficient processing cores, and a beefed up GPU. More importantly, the now 8-core Neural Engine plays a larger role in capturing and processing images.

For example, the Neural Engine assists with facial recognition, facial landmarks and image segmentation. Image segmentation helps separate the subject from the background and is likely what is being used to simulate Portrait Mode photos on the iPhone XR, but with a single-lens setup.

The additional speed allows more information to be captured, which has enabled Apple to build in a new Smart HDR feature. High Dynamic Range photos have been available on iPhone for some time, but Smart HDR is more accurate and snappy thanks to the faster processor capturing even more data.

Portrait Mode has quickly become one of the most popular features on iPhones, and it gets even better with iPhone XS. A new feature allows for advanced depth control, which allows users to better handle bokeh.

After shooting a Portrait Mode photo, users can edit the image and adjust the simulated aperture, increasing or decreasing the amount of background blur. The lower the on-screen F value, the wider the "aperture," presenting more blur and larger bokeh in the background.

Lastly, a new and improved TrueTone flash helps brighten low-light photos.

Turning to video, there are less, but still important improvements. When shooting at 30 frames per second rather than 60, the dynamic range of the captured video is extended. This lends itself to more detailed clips, which is especially noticable on the HDR10 enabled displays. As demoed in Apple's keynote, lowlight performance is also significantly improved with less grain and noise noticable.

Cameras are always important to iPhones, and never more so than during the S-model years where the phones tend to lack any large physical changes to the design. AppleInsider will be going hands-on with the latest round of iPhones soon to truly put the cameras to the test.

Andrew O'Hara

Andrew O'Hara

-m.jpg)

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

16 Comments

...I wish they would give angle of view or 35mm focal length equivalents for the zoom(s) - as a photography enthusiast the features seem very compelling, yet are such basics as focal length a core starting point...? I shoot 21mm equivalent now - to me that is 'wide angle' yet to others 28 or even 35 are just fine... The unfortunately discontinued Zeiss ExoLens Pro 'lens system' add on offered 18mm equivalent, with excellent reviews (sharpness, etc) and (to me) indiscernible barrel distortion.

Anyone...?

In photography, there are combinations of shutter speed and f/stop that yield the same amount of light. Generally speaking, the smaller the f/stop (larger number) the greater the depth of field. However, if you change one, the other must as well. For example, f/8 at 1/500 second yields the same amount of light as f/11 and 1/250 second. The depth of field of f/11 is greater than the depth of field of f/8. Apple's trick must be using the depth information stored with the image, not actually changing the f/stop.