Apple is continuing to come up with ways to secure its devices while still making it as easy as possible to use, with one concept involving unlocking an iPhone or iPad and performing a Siri request, but only if the voice it hears matches that of its owner.

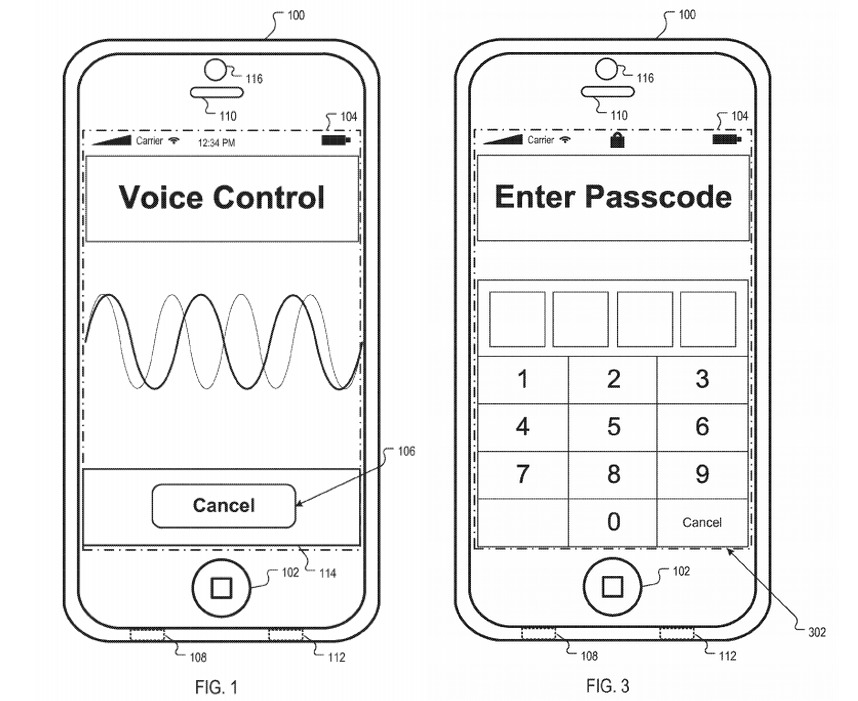

Granted by the U.S. Patent and Trademark Office on Tuesday, the patent for "Device access using voice authentication" is relatively straightforward, namely detecting the speaker's voice for a vocal request and determining if it is the main registered user.

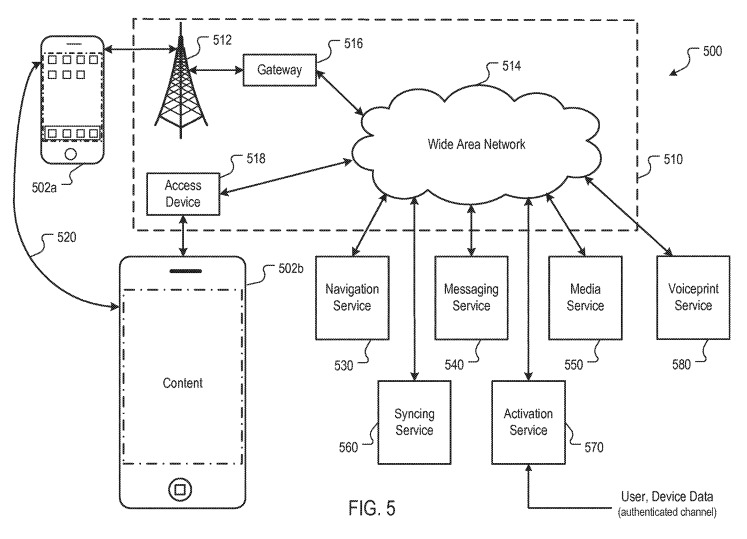

According to the patent, a device capable to receive speech input from a user could conceivably create a "voice print" for the owner, using multiple examples of their speech. This text-independent voice print would determine characteristics of the user's voice to create a model, either on the device or via an external service, with the result being the point of comparison for future checks.

The voice print could use "statistical models of the characteristics" of a user's pronunciation of phonemes, to create the signature. In theory, this could include characteristics such as voicing, silences, stop bursts, nasal or liquid interference, frication, and other elements.

By being text-independent, the voice print would theoretically be able to determine the speaker's identity just from normal speech, without requiring the use of a specific passphrase. This would be a better way of dealing with voice-based authentication in public situations, where users may not wish to loudly state a passphrase they would rather keep private.

At the time the device receives a vocal command, it effectively creates a model based on the uttered phrase, which it then compares against the already-established voice print. If there is a certain threshold met for similarity, the device could be unlocked then carry out the spoken command.

It is also suggested a voice print match may not need to unlock the phone entirely, but could still enable the spoken request to be performed for the user. For example, Siri on a device could provide a verbal and text-based response to a vocal query about a general topic if it detects the user's voice, but would refrain from providing a response involving user data unless the device is unlocked by other means beforehand.

There is even the possibility of a user electing for specific functions that may use personal data to be accessed by Siri while in a locked state, only if the voice of the user is recognized. This would still make the device available for general non-user-specific Siri queries by other people, without permitting access to data.

In the event the user fails to convince the device of their identity by speech alone, secondary authentication methods are offered, such as other types of biometric security or a passcode.

Apple submits a large number of patent applications every week, but not all ideas make it into commercial products. Considering Apple's existing work concerning biometric security, including Touch ID and Face ID, an extension into voice authentication makes sense, especially when looked at in the context of Siri on the iPhone.

This patent is one of a number Apple has filed in the field, and it certainly isn't the first to surface from the company. For example, a 2011 patent application for "User Profiling for Voice Input Processing" suggests virtually the same idea as the newly-granted patent.

In August, it was revealed Apple was looking into using voice prints for a slightly different purpose: differentiating between multiple users.

Aside from Siri on iPhone, another area this could be useful is with Siri for HomePod, a version of the digital assistant that can only provide verbal responses on hardware without any visual or physical biometric security. Multi-user support has been touted for the smart speaker before, with user voice recognition enabling the possibility of personalized query results or even playing an individual's private playlist.

Malcolm Owen

Malcolm Owen

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

William Gallagher

William Gallagher

18 Comments

Siri needs major attention. I find it nearly useless. It sends to many inquiries to the web browser. If I wanted to do a Google search and look at a web page, I would not bother with Siri. Siri should be working on becoming more like the Computer on Star Trek. When I ask the Computer a question, I want an answer or range of answers, not a screen telling me to look at a list of Google results.

Well, if they're going to do this, then they'd better make a big deal about it being able to tell the difference between a live voice and a recording.

Otherwise we're going to see a lot of this:

do we really need Siri? how useful has it been to most people? Is Siri the "flying cars" future solution to a problem that does not exist? Siri is no better than any other current web search engine because it depends on the same mechanics search engines use and when it does not understand your request get ready for so wacky results.

When will the superior privileged brains in Silicon Valley would stop chasing after "cool" stuff which is short of actual practical use. Look at just about any operating system to understand my point, piles of cool stuff which few people know, care about or ever use. Siri a failure and Apple may have know this but they feared being left behind in this fantasy ego race to get AI in all of our hands. Fortunately most people have hands which operate quite well but if Apple wanted to develop Siri for those with disabilities then I would applaud them. For now Siri is a useless failure.

I find Siri extremely helpful and around 98% accuracy. That said, there’s plenty of room for improvement and I feel Apple should be a leader in this space. I do appreciate the amount of effort involved, as Siri is available on more languages than the other assistants.

I use Siri a lot for my smart home functions, texting, calling, weather, timers, measurement conversion, basic references, sports queries, music control and Apple TV use.

With the deployment of silicon containing the Neural cores (A11 chips and up), I expect Siri to gain major improvements in processing. In the near future, I don’t see why Siri couldn’t be processed at the device level.