Apple is looking into ways to display pre-rendered 3D video in a stereoscopic way, a system that would be beneficial for AR glasses or a VR headset to provide a more believable view of a virtual object by creating a specific view for each eye.

The fields of virtual reality and augmented reality have had to deal with the idea of providing a perfect picture to the user through a headset. The head-mounted displays provide two slightly different images to each eye, to give the illusion of depth and physicality to virtual items, mimicking how the eyes would see it if it were in real life.

Game engines and VR systems typically rely on rendering a scene with two virtual cameras positioned where the eyes would be, with the two converging at a distance away from the user. However, a full 360-degree stereoscopic pre-rendered CGI scene is more difficult to create. Apple claims that unlike a live VR scene that is created on the fly, a 360-degree spherical video cannot take into account all of the positions the eyes can be located for the effect to work.

A spherical video file also cannot provide a stereoscopic parallax effect, as there would be a vertical disparity of the eyes if the user tilts their head so the eyes are at different heights.

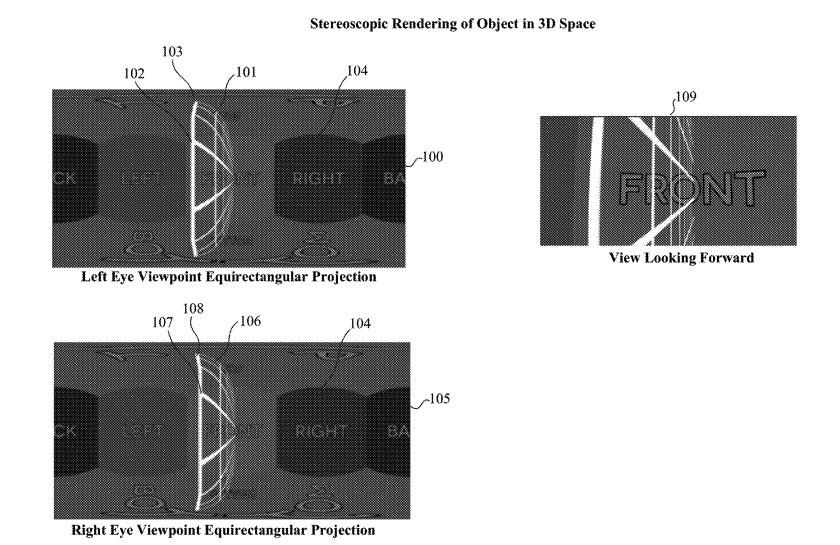

In the patent application "Stereoscopic rendering of virtual 3D objects," Apple suggests a system should first tesselate objects in a scene to identify vertices that are then mapped to spherical co-ordinates along with interaxial and convergence parameters, namely the distance between the eyes and the point of convergence in the distance.

The spherical positions can then be mapped to an equirectangular projection. Positions of 3D object vertices can define polygons of a surface of a 3D object that can be transformed to take up a portion of the projection's surface, based on the user's orientation and camera position.

Translating the 3D positions can involve rotating the vertices and positions based on the interaxial distance between the eyes, as well as the convergence. In the end, the created projection is rendered into a stereoscopic 360-degree rendering based on the sphere-based projection of each camera.

The result is two equirectangular representations, one for each of the left and right viewpoints.

Apple files numerous patents with the US Patent and Trademark Office on a weekly basis, but while the existence of a filing does not guarantee the idea will appear in a future Apple product or service, it does show areas of interest for the iPhone maker's research and development efforts.

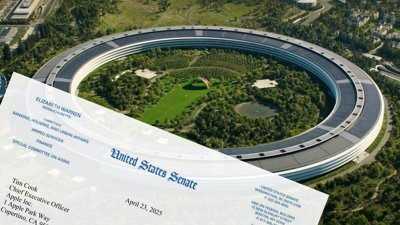

The need to come up with new and improved ways to render content is likely due to the long-rumored AR glasses that Apple is thought to be developing. The company has been pushing forward with its iOS device-based ARKit system, but it is thought that the work could result in a headset of some description in the future, possibly introduced as early as 2020.

Apple also has numerous patents in the field of headset design and usage, ranging from glasses that can holster an iPhone to eye tracking systems, thermal regulation to fitting the headset snugly on the user's head, and others. On the software side, Apple has considered the use of AR for ride-hailing services, and how points of interest in the world could be displayed to the user.

More directly connected to the latest patent application, one August 2018 filing for "Processing of Equirectangular Object Data to Compensate for Distortion by Spherical Projections" detailed how to prevent issues when combining multiple videos together, such as with multi-camera video recording rigs.

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

-m.jpg)

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->