Rumors have circulated about an Apple-produced augmented reality headset or smart glasses for quite a few years, but so far the iPhone producer has yet to officially confirm the hardware is in development. The additions made to ARKit in iOS 13 as well as a plethora of patents and other reports indicate Apple is closer to revealing its work than ever before.

During the WWDC 2019 keynote, Apple spent time revealing some of the newest features it is bringing to ARKit 3, the company's framework that allows developers to add augmented reality functionality to their apps. The additions arriving as part of iOS 13 will bring Apple's AR efforts to a new level, closer to the ideal form of the technology that users anticipate.

For the moment, however, AR in the Apple ecosystem continues to be limited to iPhones and iPads, which are less than ideal when it comes to the user experience. A version of AR using a headset, giving the effect without requiring people to hold up and look through a limited window on a mobile device, could be the perfected form of the technology, and Apple continues to edge closer to creating with every one of its improvements to the platform.

ARKit 3 in iOS 13

The keynote highlighted some elements that have so far been missing from the AR experience, made possible via earlier ARKit versions. Under ARKit 3, there are many changes made to make it more immersive, and enabling developers to offer even more functionality in their apps.

A key component of ARKit 3 is people occlusion, where the iOS device detects there is a person standing in the middle of an AR-enhanced scene. Rather than flatly covering the camera view with the digital objects, ARKit 3 can detect the distance the seen person is from the user, and if they are closer than a digital object theoretically would be, can alter the overlay to make the object seem to appear behind the person.

The technique also enables the possibility of green screen-style effects, where a subject viewed through an app could appear to walk through a fully digital environment, without needing a chroma key background.

Apple also added motion capture capabilities, where the movements of a subject could be analyzed and interpreted in real time in the app. Dividing the subject down to a simple skeleton, joints and bones can be determined and monitored for changes, with those movements able to trigger animations or to be recorded for custom movements of characters.

The tracking changes also impact ARKit Face Tracking, which already takes advantage of the TrueDepth camera on modern iPhone and iPad Pro models to analyze the user's face, such as for Memoji and Snapchat. In ARKit 3, the face tracking has been expanded to allow it to work with up to three faces at the same time.

Another key component is the ability for users to collaborate with each other within the same AR environment. By letting people see the same items and environment in a single session, this can enable multiplayer games and collaborative work to take place in AR between multiple people, enabling a social element to AR experiences.

Other changes also introduced in ARKit 3 is the ability to track the user's face and the world using the front and rear cameras at the same time, detecting up to 100 images at the same time, automatic estimates of physical image sizes, more robust 3D object detection, and improved plane detection via machine learning.

The ongoing work by Apple

Aside from ARKit announcements, patents are a second source of evidence for Apple's AR glasses work.

While not a guarantee Apple intends to use the ideas it files with the US Patent and Trademark Office in future products and services, the filings do at least indicate previous areas of interest for Apple's research and development efforts.

A key element of the AR headset is the display, as fast response times are essential for creating a perfect illusion of combined virtual and real scenery. Patent applications from December 2016 and January 2017 titled "Displays with Multiple Scanning Modes" describes how a display's circuitry could update only partial sections of a display for each refresh rather than the entire display, in order to minimize the possibility of artifacts appearing in high-resolution and high refresh rate screens.

Updating only part of a screen also offers less work for a display to perform each refresh, allowing for shorter refresh periods and a faster overall screen update rate. Faster updates may also help cut down on motion sickness in some users, where the display doesn't update fast enough to match physical movements of a user's head.

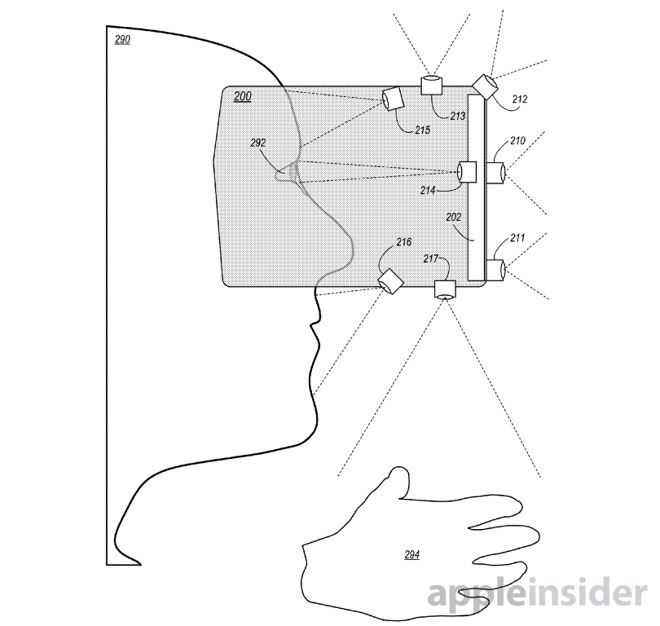

The ability render the world at high speed can also help prevent nausea and motion sickness, as suggested in the 2017 filing for a "Predictive, Foveated Virtual Reality System." Consisting of a headset with a multiple-camera setup, the hardware would take pictures of the world at low and high resolutions, with the higher sections shown to the user where their eyes are looking at on the display, while the lower fills the periphery, speeding up processing of the scene.

A 2016 "Display System Having World and User Sensors" uses cameras outside and inside the headset to track the environment and the user's eyes, expressions, and hand gestures, enabling a form of augmented reality image to be created and interacted with.

Eye tracking has also featured heavily in patent filings, including one from May 2019 for determining ocular dominance, and others from 2018 suggesting ways to actually perform eye tracking as close to the user's head as possible. By bringing the tracking equipment nearer the face and using items like a "hot mirror" to reflect infrared light, there is less weight positioned further away from the user's head, resulting in a more comfortable user experience.

On a more practical level, Apple has also explored how AR glasses could fit perfectly using various adjustment systems in 2019, including motor-cinched bands and inflatable sections to prevent the headset from moving once worn. Thermal regulation was also determined to be a important element, cooling not only the hardware inside the headset, but also the user's face.

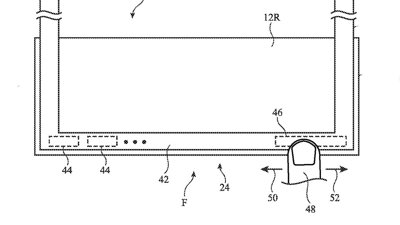

Apple has also considered how to create a form of smart glasses that, instead of using a separate display, simply places the user's iPhone in front of their eyes. A more advanced form of the simple smartphone-based VR headset enclosures, Apple's proposal for a "Head-mounted Display Apparatus for Retaining a Portable Electronic Device with Display from 2017 slides an iPhone in from one side, includes a camera and external buttons, built-in earphones in the arms, and a remote control.

For interaction, Apple has spent time working out how to use force-sensing gloves with VR and AR systems. By using conductive strands and embedded sensors, gloves and other garments could provide force-sensing capabilities that could trigger commands, read gestures, and even count the number of fingers in contact with a physical object.

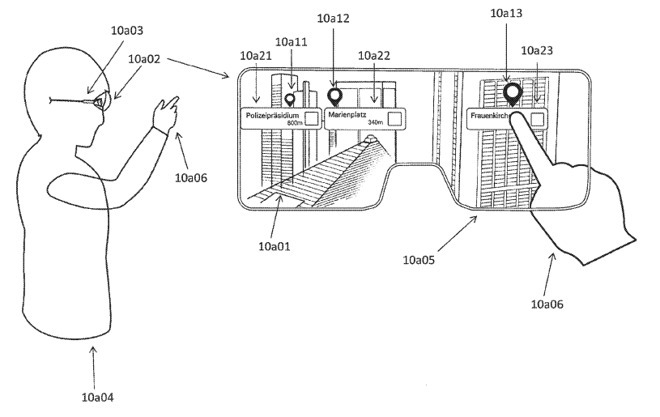

On the application side, Apple's patent filings suggest AR could be used for ride-hailing services, with smart glasses allowing customers to find their ride and vice versa. For navigation, one 2019 patent advises how points of interest could be displayed in a real-world view via AR, one which could also be expanded to advise of the position of items in a room, rather than notable geographic features.

Reports and Speculation

The idea of Apple creating some sort of smart glasses or AR headset has circulated for some time, and could result in the release of hardware in the next few years. Loup Ventures analyst Gene Munster suggested in 2018 that Apple could release "Apple Glasses" in late 2021, possibly selling as many as 10 million units in the first year of release at a cost of around $1,300 per unit.

Ming-Chi Kuo, another long-term Apple commentator, has more recently proposed the headset could make an appearance midway through 2020, with manufacturing plausibly commencing at the end of 2019.

As for how the supposed glasses could appear, Kuo suggests they could act more as a display with the iPhone performing the bulk of the processing. WiGig, a 60-gigahertz wireless networking system that could provide cableless connectivity, has also been tipped for the device, as well as 8K-resolution eyepieces, and the supposed codename "T288."

Headset development surfaced in a leaked safety report in 2017, where incidents requiring "medical treatment beyond first aid" were required for a person testing a prototype device at one of Apple's offices. Specifically the injury related to eye pain, suggesting it was in testing something vision-related, potentially a headset of some form.

Apple has also reportedly spoken to technology suppliers about acquiring components for AR headsets at CES in 2019, with incognito Apple employees visiting stands of suppliers of waveguide hardware.

There have also been acquisitions of companies that are closely related to AR and headset production. In 2017 Apple picked up eye tracking firm SensoMotoric Instruments, while in August 2018 it bought Akonia Holographics, a startup focused on the development of specialized lenses for AR headsets.

Malcolm Owen

Malcolm Owen

-xl-m.jpg)

-m.jpg)

Thomas Sibilly

Thomas Sibilly

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

9 Comments

A lot of VR related patents described in an article ostensibly about AR.

Between Munster's and Ming-Chi Kuo's predictions on what Apple will deliver, I'd put my money on on the latter. Munster doesn't appear to understand the technology and its limitations if he thinks Apple will release $1,300 glasses which, presumably will cost that much because they'll be gill full of tech. But there's no room for a lot of technology in glasses that are to be worn all day. I think that was one of the traps Google Glass fell into: it tried to fit a complete system into the temples & hinge of the glasses. The resulting glasses were still too heavy - and its batteries lasted only lasted for a couple hours at most.

Kuo, like me, seems to think that most of the processing will happen on the phone (where ARKit and ARKit enabled apps already reside). The glasses themselves will simply replicate the sensors/camera the iPhone already uses for AR and a display for AR images sent to it by the phone, use a minimal CPU, memory, and networking (e.g. Apple's own W2 chip),. It should then be possible to have small batteries drive these AR glasses last all day.

I am super excited about this!!

By 2021, gamers subscribing to Arcade likely will be among first movers to AR glasses. Cook and crew in either world seen as visionary.

Everyone wearing such glasses will be able to live in what appears to be a beautiful, well-kept mansion (even if they actually live in a cockroach infested dump).