The new Mac Pro is a long-anticipated development for Apple's high end pro users, but it sure looks as if the company also created the machine for its own strategic benefit — specifically to help make its Metal API become a dominant standard for GPU-intensive software. That could have big implications for Macs, iOS devices, and Apple GPUs going forward, with history providing some insight into why this matters.

Over the last decade, GPU maker Nvidia has established its CUDA platform as the most popular way to build intensive compute workflows in parallel computing, 3D and CGI rendering, and in machine learning— the company has claimed an 80% share of the inference processing market. CUDA is also widely adopted in video game software engines and professional film, music, and image editing software. This strategy has paid off, as using CUDA software requires an Nvidia GPU.

That raises the question of how Apple can expect to launch its new Mac Pro into the professional space without any apparent support for Nvidia's latest GPUs. It's like Apple launching the original Macintosh without support for DOS software, or iPod without any way to playback Windows Media Player files, an iPhone without Java, or iPad without Adobe Flash. It's boldly insane to pundits, but it's also exactly the kind of high stakes gamble that has regularly worked out well for Apple and its customer base.

Bear with me through a brief history of how we got to the current situation in GPU-accelerated computing tasks, and take a look at where the industry is headed as we begin a new decade of technology in the 2020s.

The software of GPUs

Long ago, the first Macs of the 1980s handled graphics operations in software running on their CPU. While Apple has long been considered a hardware company, the Mac's truly differentiating features — its graphical user environment powered by QuickDraw and later its pioneering video capabilities from QuickTime — were the results of the company's sophisticated software.

That software was so good — and its role was so valuable and important— that Microsoft and Intel worked to steal Apple's code representing proprietary video acceleration techniques to deliver a functional Video for Windows in the early 1990s. That launched a dispute that wasn't resolved until Microsoft agreed in public to invest in Apple and continue writing Office for the Mac in 1997.

Despite that deal, and Apple's first-mover position in bringing Xerox PARC's research-grade graphical computing to mainstream audiences, Apple overwhelmingly lost its fight to stop Microsoft from appropriating its work across the 1990s. Apple was also unable to successfully keep up in the development of high-end, state-of-the-art graphics— in part because its executives pushed out Steve Jobs, who then took a whos-who of Apple's talent with him in 1986 to target the high-end of graphical computing at NeXT, Inc.

The graphical computing market itself was also changing rapidly, as graphics hardware accelerators quickly escalated in sophistication. Two companies founded in 1985— ATI and VideoLogic— began selling specialized video hardware, initially for other PC makers and later directly to consumers, to enhance graphics performance and capabilities.

By 1991 ATI was selling dedicated GPU cards that worked independently from the CPU. By enhancing the gameplay of titles like 1993's "Doom," ATI's dedicated video hardware began driving a huge business that attracted new competition. In 1993, CPU designers from AMD and Sun founded Nvidia, and the following year former employees of SGI created 3dfx.

Apple was not only competing against NeXT and Microsoft as a graphical desktop computing platform, but the Macintosh was also being outgunned in pro markets where various high-end UNIX workstation vendors, including Silicon Graphics and Sun, were quickly shifting to far more powerful new RISC CPUs while also developing their own specialized, high-end hardware to drive graphics supercomputers used to render CGI and perform simulations and research.

Graphics capabilities were exploding while component prices were rapidly falling. In 1991, Apple replaced its ultrafast high-end, barely a year old Mac IIfx— with a starting price well above $17,000 in today's money— with the much faster and more powerful Quadra 900, priced closer to a current-day $13,000. Apple was also publicly working to introduce PowerPC, a new RISC CPU that would be even faster. Imagine being a graphics pro at the time, trying to survive while hardware values were collapsing into obsolescence so quickly.

On the high end, SGI was selling its $250,0000 Onyx workstations outfitted with the company's RealityEngine graphics architecture, composed of a series of boards containing a dozen RISC CPUs and scores of huge custom ASICs. All this hardware was required to perform graphics operations that today appear comical (below).

The work to design and build integrated graphics hardware and software was incredibly expensive. It was also being performed in parallel by several companies competing over relatively small pockets of demand. The industry was begging for some sort of collaboration.

SGI adapted its RealityEngine graphics architecture into a single chip GPU and began licensing its internal Graphics Language to others as OpenGL, a common platform intended to make it easier to write code that could run across the graphics hardware on different computing systems. This helped create a competitive market for GPUs, specialized processors optimized to perform simple, parallel tasks at high speeds that make them ideal for handling the repetitive pixel operations required in 2D and 3D graphics. SGI's OpenGL was given to an Architecture Review Board in 1992, with members eventually including Apple, ATI, Dell, IBM, Intel, Microsoft, NVIDIA, SGI, Sun and 3dfx.

While OpenGL was aimed at high-end workstation graphics, Apple delivered its own, simplified QuickDraw 3D API for 3D graphics on a Mac in 1995. Microsoft similarly acquired RenderMorphics in early 1995 and shipped it as Direct3D, aimed at making DOS PC gaming dependent upon Windows 95. Mac sales were low enough that QD3D hardly mattered, but Microsoft's Direct3D began challenging OpenGL's objective of having an open graphics library that worked across vendors.

Contention between OpenGL partners and Microsoft resulted in the 1997 Fahrenheit project to merge the two, to make OpenGL work on Windows NT workstations, similar to how IBM was working with Microsoft to merge its OS/2 platform with Windows. But Microsoft backed out of both and focused on Direct3D and Windows NT in a bid to tie all graphics-intensive software exclusively to its Windows platform, leaving OpenGL and OS/2 for dead.

That occurred just as Apple was acquiring NeXT. When the returning Steve Jobs reviewed Apple's assets and prospects in 1998, he determined that Apple should drop QD3D and adopt OpenGL, creating significant new support for the open API and allowing Apple to focus its efforts on other technologies while benefitting from the existing work invested in OpenGL and its broad support across existing GPU architectures. Rather than reinventing OpenGL, Apple developed a sophisticated new display server and graphics compositor for its next-generation Macs.

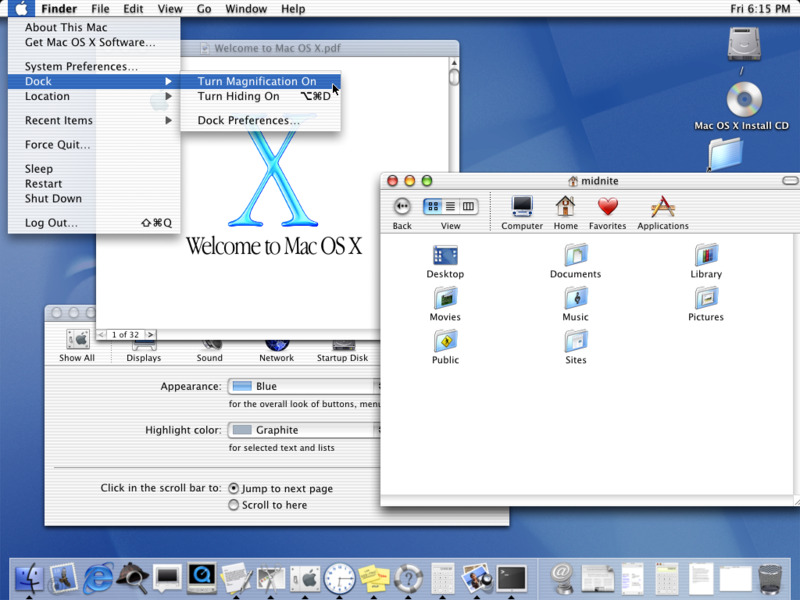

Mac OS X shipped in 2001 with that new Quartz Compositor software drawing its windows using techniques pioneered in video games, resulting in a fluid new Aqua appearance (above) with slick animations, live video transforms, shadows, and alpha translucency that made it clearly distinct from the flat, simple bitmapped graphics still used by Windows XP.

In 2005, as Microsoft struggled to catch up with own compositing engine in Vista, Apple released Quartz 2D Extreme, which took another page from video games to hardware accelerate its graphics using OpenGL commands that could run directly on a GPU.

When Apple released the iPhone in 2007, its buttery smooth animation was the direct result of Apple's extensive work in leveraging a GPU to deliver a satisfying graphical experience with responsive multitouch input. Years later, Google was still struggling to make its jerky, stuttering Android as smoothly, graphically responsive as iOS, complicated in part because its cheapskate partners didn't want to spend much money on GPU hardware, a factor Apple had made a priority.

Understanding and appreciating graphics was key to Apple successfully launching the Mac in the 1980s, fueled Apple's rise from the ashes in the 2000s in a world utterly dominated by Microsoft, and then set the stage for the most successful consumer electronics product launch to ever occur, and then helped to prevent Google and the rest of the consumer electronics industry from collectively taking away Apple's iOS crown and the trillion-dollar business it conferred. Graphics are a really big deal.

Making the GPU do more than graphics

Adopting OpenGL twenty years ago enabled Apple to develop higher-level graphics software that could run on any major GPU. Apple subsequently used GPUs from ATI/AMD, Intel, and Nvidia on the Mac, and Imagination Technology's PowerVR mobile GPUs in its mobile devices.

In 2007, Nvidia released CUDA to make it easier to run general-purpose computations on GPUs, known as GPGPU. GPUs are explicitly designed for graphics operations but have characteristics that also make them ideal for plowing thorough huge sets of non-pixel data. CUDA intended to make GPGPU easier for researchers and developers who weren't already experts in low-level graphics but could benefit from being able to delegate their parallel computing tasks to a GPU, where they could run faster than a CPU. Nvidia was, of course, seeking to expand the addressable market for its GPU hardware.

AMD launched its own similarly proprietary "Close To Metal" project for its GPUs, kicking off a GPGPU platform struggle similar to what had occurred before in graphics. In 2009, Apple released OpenCL, an open API for GPGPU that wasn't tied to a specific GPU architecture. Apple gave it to the OpenGL standards body to maintain. AMD abandoned CTM to adopt OpenCL, but Nvidia continued to pursue CUDA. Like Microsoft a decade earlier, Nvidia realized that getting software developers to write and optimize code for its own proprietary graphics API would cultivate an exclusive market for its platform, in this case, Nvidia's GPU hardware.

That same year, Apple and Nvidia also had a larger falling out related to the defective Nvidia GPUs in MacBook Pros that resulted in extra support costs and bad optics for Apple. There was also an IP dispute between Intel and Nvidia that had forced Apple to change the architecture of its 2008 MacBooks.

Nvidia may also have maintained a grudge over having acquired PortalPlayer in 2006 as part of a strategy that was expected to make Nvidia a chip partner in Apple's increasingly successful mobile efforts. That involved Nvidia's Tegra mobile chip, which Apple never ultimately used. Tegra chips were subsequently hyped into the stratosphere by Microsoft Zune and then Surface RT fans and then by Google's Android fans who were enamored with Nvidia's marketing that bragged of Tegra's supposedly amazing mobile GPU. The chip never managed to catch up with Apple's own mobile SoCs, in part because the vendors who used Tegra couldn't sell their hardware.

The "quiet hostility" between the once-close partners resulted in no new use of Nvidia GPUs in Macs. That included the new architecture of the 2013 Mac Pro, which only offered AMD graphics and had no PCIe slots for end-users to install their own.

At the time it also looked possible that Apple might be losing any interest in the shrinking market for "pro" Mac hardware entirely. That same year, Apple had surprised the market with iPhone 5s and the world's first 64-bit mobile chip, its custom-designed A7 with a PowerVR GPU, which took the wind out of the sails of Nvidia's new, but still 32-bit, Tegra 4 and effectively destroyed the remains of Nvidia's mobile armada.

In 2013, AMD also launched Mantle, its new low-level graphics API targeted at video gaming, competing with OpenGL and Direct3D and once again setting off a new platform struggle similar to what had occurred before in graphics and GPGPUs.

Apple debuts Metal

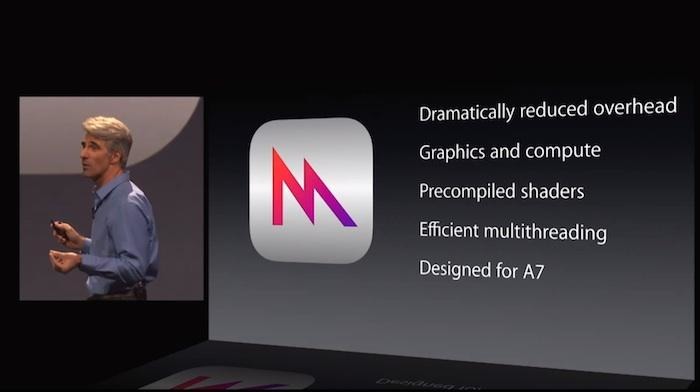

The next year, Apple surprised again with the release of Metal, a new graphics API specifically optimized to run "close to the metal" of the GPU in its new A7 and subsequent Apple-designed SoCs. At WWDC14, Apple GPU software engineer Jeremy Sandmel told developers, "we're incredibly excited" and said of Metal, "we believe it's literally going to be a game-changer for you, your applications and for iOS."

Metal was designed to strip away much of the overhead imposed by the cross-platform nature of OpenGL, particularly for "state vector," or all of the details associated with each draw call prepared by the CPU and handed to the GPU. This process involves a lot of expensive "bureaucracy" under OpenGL. Because it's optimized to run specifically on Apple's GPUs, it doesn't have to deal with all of the differences in competing GPU architectures. At the same time, Apple was also freed to add support for new GPU features without the external dependencies of working within a standards body.

Apple also introduced its Metal Shader Language to write "shaders," the specialized computer programs designed to be rapidly run by a GPU. Initially, a "GPU shader" was code that described how to apply the smooth shades of color needed to create realistic surfaces on a 3D model. In today's more general terms, a shader can be any sort of image or video processing, from calculating the geometries of an animated 3D model, to rendering individual pixels of a scene, or creating a motion blur effect on a frame of video. Shaders can also be used to package GPGPU computational tasks for rapid execution on the GPU. This allows Metal to replace both OpenGL and OpenCL, spanning graphics and GPGPU.

OpenGL compiles shader object code on the CPU into GPU machine code before it can run. This enabled it to support a wide variety of different GPUs, each of which needs to have the required shaders compiled specifically for its chip architecture. Because Metal only runs on the GPUs that Apple uses, it can identify tasks that can be precompiled in advance so that they can execute without delay at runtime. That results in a huge jump in both GPU utilization as well as a reduction in CPU overhead, freeing up the system to do other things.

In 2015 Apple released Metal for macOS, with support for Mac GPUs running back to 2012: Intel HD and Iris GPUs; AMD Graphics Core Next GPUs; and the Nvidia Kepler GPUs that Apple had used in its Macs. Last year's macOS Mojave and the current release of Catalina now require a Metal-supported GPU. This more directly positioned Metal as its officially sanctioned way to build video games, leveraging its substantial weight with iOS to influence development decisions on the Mac.

Just two years after crushing Nvidia's Tegra mobile aspirations, Apple was now dropping Metal into its Mac platform where Nvidia still had some relevance. That same year Apple also recruited away Nvidia's director of deep learning software Jonathan Cohen, who remained at Apple for nearly three years before returning to Nvidia.

AMD effectively scuttled its internal Mantle development the same year, donating it to the OpenGL standards body to maintain as Vulkan starting in 2015. Vulkan offers a modern graphics API similar in approach to Apple's Metal and Microsoft's Direct3D 12, giving former OpenGL users a path forward on Android, Linux, and Tizen.

In 2017 Apple introduced Metal 2, a further expansion into Nvidia's CUDA territory, with a new shader debugger and GPU dependency viewer for more efficient profiling and debugging in Xcode; support for accelerating the computationally-intensive task of training neural networks, including machine learning; lower CPU workloads via GPU-controlled pipelines, where the GPU is able to construct its rendering commands and schedule them with little to no CPU interaction; and specialized support for virtual reality rendering and external GPUs.

This year, Apple incrementally advanced new Metal 2 features in macOS Catalina in anticipation of its new hardware, enabling professional content-creation pro apps to take advantage of Metal Peer Groups to rapidly share data between multiple GPUs in the new Mac Pro without transferring through main memory. Metal Layer API enhancements also provide access to the High Dynamic Range capabilities of the Mac Pro's new Pro Display XDR.

Like Microsoft since the mid-1990s and Nvidia over the last decade, Apple realized that getting software developers to write and optimize code for its own proprietary graphics API would cultivate an exclusive for its platforms, in this case, Apple's Mac, iOS, and other hardware.

Looks like Apple wants to own its graphics technology

The release of Metal 2 emphasized that Apple was looking to displace Nvidia's CUDA API, particularly in the areas of machine learning, artificial intelligence, research, and other GPGPU. Of course, Nvidia has long been working to own GPGPU, particularly in deep learning, the massive processing requirements inherent in self-driving vehicles, and other emerging technologies. By tying these types of applications to its CUDA APIs, Nvidia hopes to keep demand strong for its GPU hardware.

Nvidia's newer Maxwell, Pascal, and Turing-architecture GPUs are not supported under Mojave and Catalina, the two most recent macOS versions that now require Metal graphics. There isn't a clear answer if the issue is Nvidia refusing to support Apple's Metal, or Apple refusing to officially approve Nvidia's drivers. It appears to be both. Apple pretty clearly has a vested interest in expanding industry support for its Metal API, the same as Nvidia with its CUDA platform.

Currently, Apple has thoroughly won this skirmish in mobile devices, where Nvidia barely has any remaining relevance after the stinging defeat of Tegra. Apple effectively owns the market for premium mobile phones, tablets, and wearables, despite vibrant and unrestricted competitive efforts from Samsung, Huawei, Google, and others. That's almost immaterial because Nvidia's CUDA doesn't have any relevance on mobile devices anyway.

In consumer markets, Apple has also established Metal in partnerships with video game developers including Aspyr, Blizzard Entertainment's WoW and SC2 engines; Feral Interactive; Unity; and Epic Games' Unreal Engine. A variety of other graphics-oriented Mac software developers have also embraced Metal, including Adobe, Autodesk, Pixelmator, and Affinity Photo's Serif.

Moving to Metal is attractive for developers because it delivers higher performance than OpenGL, and provides access to Apple's huge installed base of affluent iOS users and its significant base of 100 million higher value Mac users. At WWDC17, Apple cited 148,000 applications that make use of Metal directly and another 1.7 million that benefit from higher-level frameworks that have been optimized for Metal. There are more users on Android, but those users don't pay for software the way Apple's platform buyers do.

Apple has depreciated, but not yet removed, macOS support for OpenGL. But there is increasingly little reason to keep maintaining it because even OpenGL's standards body is seeking to move to a new, more modern and optimized replacement in the form of Vulkan. Vulkan code can even be hosted on top of Metal using MoltenVK. The other major alternatives are DirectX, used by Microsoft in Windows and Xbox, and CUDA, which is limited to Nvidia hardware.

Apple's late arrival to the pro graphics party is pure Metal

Where uptake of Apple's Metal had been weakest— and Nvidia CUDA strongest— is in higher-end markets for research, artificial intelligence, machine learning, deep learning, and other professional work. Nvidia has invested in efforts to create scientific modeling, medical imaging, and other specialized software and workflows dependent upon CUDA, and therefore its GPU hardware, for a decade now.

Apple hasn't even introduced a new Mac Pro since it first debuted Metal, making the new Mac Pro its first-ever built exclusively for Metal, and the first Metal Mac with PCIe slots apart from the 2010 Mac Pro models that were supported in Mojave if upgraded with a Metal GPU card. Those aging machines are no longer supported Catalina.

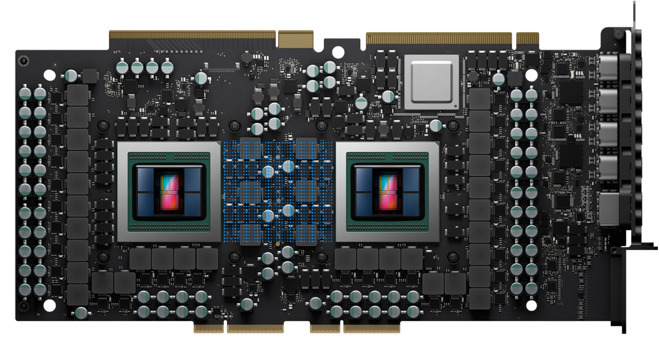

Across the last several years, Apple has been working with a variety of pro app developers, as well as AMD, to develop pro-tier Metal support. Apple's prominent focus on the graphics capabilities of Mac Pro, including the mention of AMD's Radeon Pro Vega II Duo as "the world's most powerful graphics card," pairing two GPUs together on one card connected by Infinity Fabric Link, wasn't just boasting. It was signaling potential applications of the new machine.

When Apple detailed that two of those MPX mega boards could be installed in tandem, providing "56 teraflops of graphics performance" and a combined 128GB of super-fast, dedicated GPU RAM, it was a nebulous claim to make about graphics but a powerful statement about how much raw processing power a Mac Pro could provide in the area of GPGPU capacity.

Apple has also been making a strong case for machine learning on the Mac. Its new Create ML lets developers create and train models— such as object detectors, image, and sound classifiers, word embeddings and transfer learning for text classification, and recommendation engines— using libraries of sample data right on their machine, without needing to rely on a model training server. ML training is one example of a non-graphical use of GPU hardware, to crunch through computationally complex ML training sessions. It also has direct relevance to Mac app developers, building on Apple's massive installed base of users and the ecosystem of coders it sustains.

Comments from Metal developers

Along with ML, Apple has various other aspirations in what is now Nvidia's CUDA territory. In the immediate term, it has largely focused on the more easily attainable goal of getting more high-end, creative professional software to embrace Metal. It started these efforts well before it publicly unveiled the new Mac Pro. In June, it offered a list of beaming messages of support from Adobe, Avid, and others, indicating significant outreach by Apple to bring more software to the Mac, leveraging the efficiency of Metal and the processing capacity of the new Mac Pro.

Sebastien Deguy, Adobe's vice president of 3D and Immersive, stated that "we're incredibly excited about the new Mac Pro, which represents a strong commitment from Apple towards creatives working in 3D. We've already started porting the Substance line of tools, as well as Dimension, to Apple's new graphic API Metal to fully take advantage of the immense power the new Mac Pro hardware offers and empower 3D creatives in unprecedented ways."

Jules Urbach, the CEO and founder of OTOY, stated he was "incredibly excited about the all-new Mac Pro and how it will empower our users.," adding that his company's Octane X "has been rewritten from the ground up in Metal for Mac Pro, and is the culmination of a long and deep collaboration with Apple's world-class engineering team.

"Mac Pro is like nothing we've seen before in a desktop system. Octane X will be leveraging this unprecedented performance to take interactive and production GPU rendering for film, TV, motion graphics and AR/VR to a whole new level. Octane X is truly a labor of love, and we can't wait to get it into the hands of our Mac customers later this year."

Note that Mac Pro not only took Apple years of engineering but also involved a "long and deep collaboration" with pro software developers like OTOY, with Metal at the center of that work. Metal is pretty clearly a big priority for Apple.

Blackmagic Design CEO Grant Petty similarly called his company's DaVinci Resolve not only "the world's most advanced color correction and online editing software for high-end film and television work" but also "the first professional software to adopt Metal."

He added that "with the new Mac Pro and Afterburner, we're seeing full-quality 8K performance in real-time with color correction and effects, something we could never dream of doing before. DaVinci Resolve running on the new Mac Pro is easily the fastest way to edit, grade and finish movies and TV shows."

Maxon CEO David McGavran similarly stated that his company is "excited to develop Redshift for Metal," adding that "we're working with Apple to bring an optimized version to the Mac Pro for the first time by the end of the year. We're also actively developing Metal support for Cinema 4D, which will provide our Mac users with accelerated workflows for the most complex content creation. The new Mac Pro graphics architecture is incredibly powerful and is the best system to run Cinema 4D." That's a lot of Metal work.

Francois Quereuil, Avid's director of Product Management, stated that "Avid's Pro Tools team is blown away by the unprecedented processing power of the new Mac Pro, and thanks to its internal expansion capabilities, up to six Pro Tools HDX cards can be installed within the system - a first for Avid's flagship audio workstation. We're now able to deliver never-before-seen performance and capabilities for audio production in a single system and deliver a platform that professional users in music and post have been eagerly awaiting."

Pixar's vice president of Software Research and Development, Guido Quaroni, stated, "we are thrilled to announce full Metal support in Hydra in an upcoming release of USD toward the end of the year. Together with this new release, the new Mac Pro will dramatically accelerate the most demanding 3D graphics workflows thanks to an excellent combination of memory, bandwidth, and computational performance. This new machine clearly shows Apple is delivering on the needs of professionals at high-end production facilities like Pixar."

Amy Bunszel, the senior vice president, Autodesk Design and Creation Products, stated "Autodesk is fully embracing the all-new Mac Pro and we are already working on optimized updates to AutoCAD, Maya, Fusion, and Flame. This level of innovation, combined with next-generation graphics APIs, such as Metal, bring extremely high graphics performance and visual fidelity to our Design, Manufacturing and Creation products and enable us to bring greater value to our customers."

Jarred Land, the president of Red Digital Cinema, said "Apple's new hardware will bring a mind-blowing level of performance to Metal-accelerated, proxy-free R3D workflows in Final Cut Pro X that editors truly have never seen before. We are very excited to bring a Metal-optimized version of R3D in September."

Foundry's chief product and customer officer Jody Madden said, "with the all-new Mac Pro, Apple delivers incredible performance for media and entertainment professionals, and we can't wait to see what our customers create with the immense power and flexibility that Mac Pro brings to artists. HDR is quickly becoming the standard for capturing and delivering high-quality content, and the Pro Display XDR will enable Nuke and Nuke Studio artists to work closer to the final image on their desktop, improving their speed and giving them the freedom to focus on the quality of their work. We look forward to updating our products to take advantage of what Mac Pro offers."

Bill Putnam Jr., the CEO of Universal Audio, stated, "the new Mac Pro is a breakthrough in recording and mixing performance. Thunderbolt 3 and the numerous PCIe slots for installing UAD plug-in co-processors pair perfectly with our Apollo X series of audio interfaces. Combined with the sheer processing power of the Mac Pro, our most demanding users will be able to track and mix the largest sessions effortlessly."

Matt Workman, the developer of Cine Tracer, said "thanks to the unbelievable power of the new Mac Pro, users of Cine Tracer will be able to work in 4K and higher resolution in real-time when visualizing their projects. And with twice as many lights to work within the same scene, combined with Unreal Engine's real-time graphics technology, artists can now load scenes that were previously too large or graphically taxing."

Kim Libreri, the CTO Epic Games, said, "Epic's Unreal Engine on the new Mac Pro takes advantage of its incredible graphics performance to deliver amazing visual quality, and will enable workflows that were never possible before on a Mac. We can't wait to see how the new Mac Pro enhances our customers' limitless creativity in cinematic production, visualization, games and more."

Unity's vice president of Platforms, Ralph Hauwert, stated, "we're so excited for Unity creators to tap into the incredible power of the all-new Mac Pro. Our powerful and accessible real-time technology, combined with Mac Pro's massive CPU power and Metal-enabled high-end graphics performance, along with the gorgeous new Pro Display XDR, will give creators everything they need to create the next smash-hit game, augmented reality experience or award-winning animated feature."

Simonas Bastys, the lead developer at Pixelmator, stated, "he new Mac Pro is insanely fast — it's by far the fastest image editor we've ever experienced or seen. With the incredible Pro Display XDR, all-new photo editing workflows are now a reality. When editing RAW shots, users can choose to view extended dynamic detail in images, invisible on other displays, for a phenomenal viewing experience like we never imagined."

Cristin Barghiel the vice president of Product Development at SideFX, stated, "with the new Mac Pro's incredible compute performance and amazing graphics architecture, Houdini users will be able to work faster and more efficiently, unleashing a whole new level of creativity."

Serif's managing director Ashley Hewson said, "Affinity Photo users demand the highest levels of performance, and the new, insanely powerful Mac Pro, coupled with the new discrete, multi-GPU support in Photo 1.7 allows our users to work in real-time on massive, deep-color projects. Thanks to our extensive Metal adoption, every stage of the editing process is accelerated. And as Photo scales linearly with multiple GPUs, users will see up to four-time performance gains over the iMac Pro and 20 times over typical PC hardware. It's the fastest system we've ever run on. Our Metal support also means incredible HDR support for the new Pro Display XDR."

Building on Metal into the future

Simply having a super-powerful Mac Pro model with vast expansion capability won't necessarily sell millions of the new machines. The market for high-end workstations remains relatively small. But it will allow Apple to find fresh interest in new industries and help bring more software — particularly high end, specialized, creative pro work titles — to the Mac, optimized for Metal. That will benefit users of any Mac. Additional developer interest and experience in coding for Metal will also benefit iOS, Apple TV and even Apple Watch development.

It remains to be seen if Apple will keep actively investing in its Mac Pro and deliver regular upgrades that keep it up to date. However, the Apple of today is far better positioned to do this than the Apple of 2010, back when it was struggling with Xserve and desperately trying to keep Google's new Android consortium from destroying the iPhone. As I've noted since at least 2016, Apple effectively lacks any real consumer competition. It's now graduating into the high end of computing to take on new bosses.

Rather than expanding into the territory of larger and more entrenched rivals, as Apple was forced to do with Macs, iPod, iPhone, and iPad over the past two decades, the company is now setting its sights on smaller rivals with less capital and influence: Spotify, Netflix, and Nvidia. It has to, because Apple is now vastly larger and more influential than ever, and its rivals are all now relatively smaller, and weaker.

One can be righteously indignant that Apple isn't subsidizing everyone else with support for their platforms, whether CUDA, Vulkan, or even Android. But such emotions won't have any bearing on the final outcome of who wins and who loses in the market for developing and commercializing the graphics technology of the future. Apple supporters in the 1990s didn't have any impact on how things turned out, no matter how well-reasoned their ideological arguments were as they typed them into the Internet.

What remains to be seen is how well Apple as a company implements its strategies, and how effectively it delivers hardware and software products that solve the needs of its customers. Big companies in the lead have fallen behind before by growing complacent.

Since 2012, Apple has proven it can incrementally iterate in software on an annual cycle with both iOS and macOS, and deploy new software rapidly across a unified installed base of users. And this year, Apple has dramatically expanded into Services, with both Apple Arcade and Apple TV+ acting as content factories that can directly benefit from this parallel push into professional production tools, generating the creative work that induces the new and advancing software that enriches its ecosystem.

Apple appears to be entering the 2020s as if it was an Atari, a Disney, a Pixar, a Microsoft, an Intel, a HP, a Canon, a Sony, and a Nokia all rolled into one. It should be an interesting decade ahead.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Bon Adamson

Bon Adamson

-m.jpg)

58 Comments

So, anyone on what the connectors on the top of the Pro Vega II Duo card is for? The connectors are on the bottom of the image.

Apple’s recent FCPX release with the new metal engine can improve performance by 20% to 40%. Yowsers. I don’t know what to think. Prior releases of the GPU compute engine in FCP were really bad? The recent Metal API improvements are really good?

An interesting “What if” is if Nvidia was actually able to deliver an x86 compatible Tegra SoC to OEMs 6 to 7 years ago. Using a VLIW architecture probably would have doomed it, but the signs are out there now for Nvidia to be pincered, and they should have soldiered on with a Tegra/x86 chip. AMD is going gang busters on the CPU wins and GPUs wins. Xbox, PlayStation. They are taking market share from Intel.

This comes to mind every time I read a Metal story.

Excellent article. I am so glad at least somebody knows and writes about the history of Apple accurately.

Although only mentioned in passing I am pleased you mentioned the costs of those powerful Macs such as the "Mac IIfx--with a starting price well above $17,000 in today's money--with the much faster and more powerful Quadra 900, priced closer to a current-day $13,000." It helps put the cost of the new Mac pro in perspective and hopefully helps quash this totally silly meme that Apple has priced itself out of the pro-market spread by those that can't afford or justify one but secretly want one.

Game development on a Mac Pro huh...? Doubt it. That is a PC development world. Games do get ported to Mac OS sometimes, and gamers consistently complain that the performance is inferior on Mac OS (even when run on the same hardware as Windows).

More importantly: Two of the major game *engines* (Unreal and Unity, not iD-tech) are ported, but not necessarily the development tools. The development is still primarily Windows-based. CryTek even seemed to have abandoned the Mac porting feature for CryEngine, shortly after announcing CryEngine (originally tied to DirectX on Windows) would be massively cross-platform.

Am I holding old info? Have these engines’ development environments and toolchains actually been ported to Mac OS?

Even if developing games on a Mac Pro would be fast enough to justify studios buying Mac Pros for development (all the positive wow statements mean nothing for Mac Pro sales), the game itself would have to be cut down massively to run on *any other Apple product* (the ones consumers can actually afford to buy).

The same thing happens to PC games, as we see when developers show off BS at E3 and then ship games with far inferior-looking versions of the same games... but the GPU performance on Windows is consistently higher level for desktop gaming than on Mac OS, and only the non-anorexic Macs were ever competitive at all (while still running Windows).

so...

Is Apple actually going to start courting gaming on Macs? How will they do that?